API Gateway vs Load Balancer vs Reverse Proxy 🌟

#85: Break Into Edge Architecture (4 Minutes)

Get my system design playbook for FREE on newsletter signup:

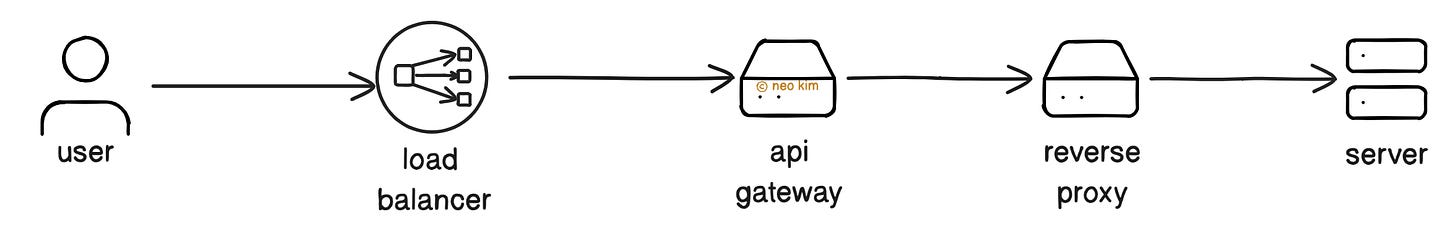

This post outlines the differences between a load balancer, API gateway, and reverse proxy. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

Once upon a time, a single server was enough to run an entire site.

The clients connected directly to it over the internet.

But as the internet became more popular, some sites exploded in traffic.

And it became extremely hard to scale those sites reliably.

So they added more servers and put a traffic management layer in front of those sites.

This includes components such as the load balancer, API gateway, and reverse proxy.

Yet it’s necessary to understand their differences to keep the site reliable.

Onward.

Ship faster, with context-aware AI that speaks your language - Sponsor

Augment Code is the only AI coding agent built for real engineering teams.

It understands your codebase—across 10M+ lines, 10k+ files, and every repo in your stack—so it can actually help: writing functions, fixing CI issues, triaging incidents, and reviewing PRs.

All from your IDE or terminal. No vibes. Just progress.

Load Balancer

A load balancer distributes traffic evenly among servers.

Think of the load balancer as a restaurant manager who ensures each waitress handles a fair number of tables without overwhelming themselves.

Here’s how it works:

The client sends a request to the load balancer

The load balancer finds servers that are ready to accept the request

It then forwards the request to the proper server

This technique ensures high availability.

Yet each service has a different workload and usage pattern. So it’s necessary to use different algorithms to route traffic.

Here are some of them:

Round-robin: routes traffic across servers in a sequential order.

Least-connections: routes traffic to the least busy servers.

IP-hashing: routes a client’s traffic to the same server for sticky sessions.

And some popular examples of load balancers are HAProxy, AWS ELB, and Nginx.

Besides some load balancers work at layer 4 (transport level). This means it checks the IP address and port number to route traffic. While others operate at layer 7 (application level). This means they check details such as URLs or HTTP headers to route traffic.

A load balancer prevents server overload and offers faster response time. But it adds operational costs and resource usage. Also it could become a single point of failure if configured incorrectly. So it’s important to design and monitor them for high availability.

Let’s keep going!

API Gateway

The API Gateway acts as a single entry point to the site.

Imagine an API Gateway as the kitchen window of a busy restaurant. It’s where the orders get passed from a waitress to the kitchen. This avoids overwhelming the kitchen with many orders, similar to rate limiting.

Here’s how it works:

The client sends a request to the API Gateway

The API Gateway throttles requests to avoid server overload and transforms data if necessary

It then routes the request to the correct microservices based on its URL path, HTTP headers, or query parameters

The API Gateway combines the responses from different microservices and responds to the client

Some popular ways to set up an API Gateway are using Kong, AWS API Gateway, and Google Cloud Apigee.

An API Gateway simplifies client interactions. Yet it slightly increases latency because of an extra network hop. Also it might become a performance bottleneck if set up incorrectly. So it’s important to install it properly.

Ready for the next part?

Reverse Proxy

A reverse proxy protects the backend server. It decrypts incoming traffic for TLS termination and caches the response to reduce server load.

Think of the reverse proxy as a kitchen host. They accept the orders on behalf of the kitchen chefs. This helps to group orders and reject orders if something is unavailable (filter traffic).

Here’s how it works:

The client sends a request to the reverse proxy

It forwards the request to the server

The server responds to the reverse proxy

The reverse proxy then caches the response and returns it to the client

Some ways to set up a reverse proxy are using Nginx, HTTP Server, or Traefik.

A reverse proxy hides the backend server complexity and speeds up responses with caching. Yet it increases operational complexity and might become a single point of failure without redundancy. So it’s important to design it with failover and set up proper monitoring.

TL;DR

Here’s how a typical edge architecture looks:

Load balancer: distributes traffic evenly across servers

API gateway: handles complex service calls

Reverse proxy: handles security and caching

They manage traffic reliably and securely in large-scale systems. Yet this isn’t the only way to set them up together. So use them based on your scale and needs.

Subscribe to get simplified case studies delivered straight to your inbox:

Want to advertise in this newsletter? 📰

If your company wants to reach a 170K+ tech audience, advertise with me.

Thank you for supporting this newsletter.

You are now 170,001+ readers strong, very close to 171k. Let’s try to get 171k readers by 5 September. Consider sharing this post with your friends and get rewards.

Y’all are the best.

TL;DR 🕰️

You can find a summary of this article here. Consider a repost if you find it helpful.

References

Block diagrams created with Eraser

That's a great breakdown, Neo!

I'd say that API Gateways are a must for client-facing apps, since they help prevent many security attacks like DDoS.

Good breakdown, Neo.

Helpful distinction between roles, especially how API gateways extend beyond routing with auth, rate limiting, and observability.