How Dropbox Scaled to 100 Thousand Users in a Year After Launch

#43: Break Into Early Architecture of Dropbox (6 minutes)

Get the powerful template to approach system design for FREE on newsletter sign-up:

This post outlines the architecture of Dropbox from its early days. If you want to learn more, scroll to the bottom and find the references.

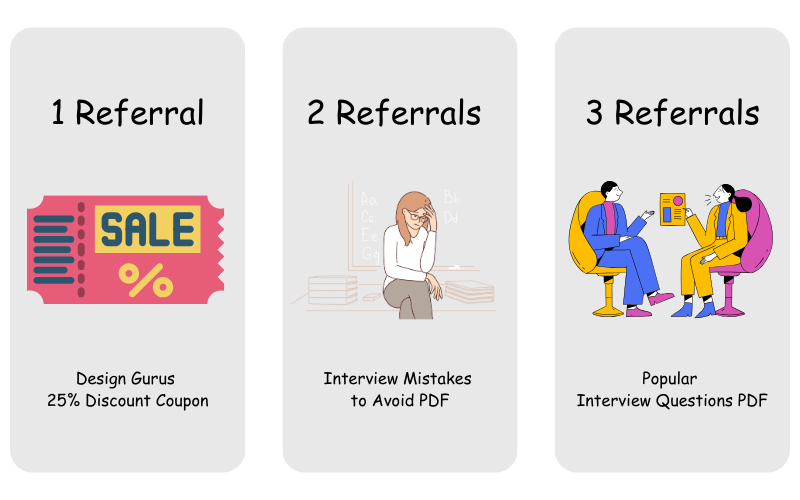

Share this post & I'll send you some rewards for the referrals.

Disclaimer: This post is based on my research and might differ from real-world implementation.

March 2009.

Sunil bought a new iPhone.

But he found it frustrating to copy individual files from desktop to iPhone each time.

This was when he heard about a file-sharing service called Dropbox from a friend.

So he installs it immediately.

And was dazzled by its simplicity in automatically synchronizing files across devices.

MindStream (Featured)

Struggling to keep up with AI? Then you need Mindstream! With daily news, polls, tool recommendations and so much more, join 120,000+ others reading the best AI newsletter around!

Did I mention it’s completely free?

Not only that, but you get access to their complete collection of 25+ AI resources when you join!

Dropbox is a cloud storage service with ACID requirements:

Atomicity: A large file uploaded to Dropbox must be available as a whole

Consistency: A file must be updated for different clients at the same time. While a deleted file in a shared folder shouldn’t be visible to any client

Isolation: Offline operations on files should be allowed

Durability: Data stored in Dropbox shouldn’t get damaged

They scaled Dropbox to 100 thousand users with 9 engineers. And was able to handle millions of file synchronizations each day. Yet synchronizing files across many devices efficiently is hard.

Dropbox Architecture

Here’s how Dropbox scaled their architecture:

1. Uploading and Downloading Files

Dropbox application running on the desktop and mobile is called the client.

They use the client to watch for file changes and handle upload-download logic.

They get about the same amount of reads and writes to the server. That means the number of file downloads and uploads is the same.

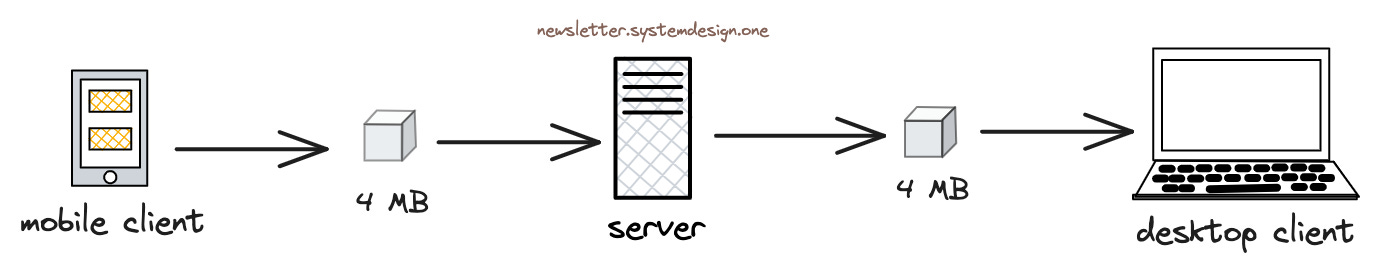

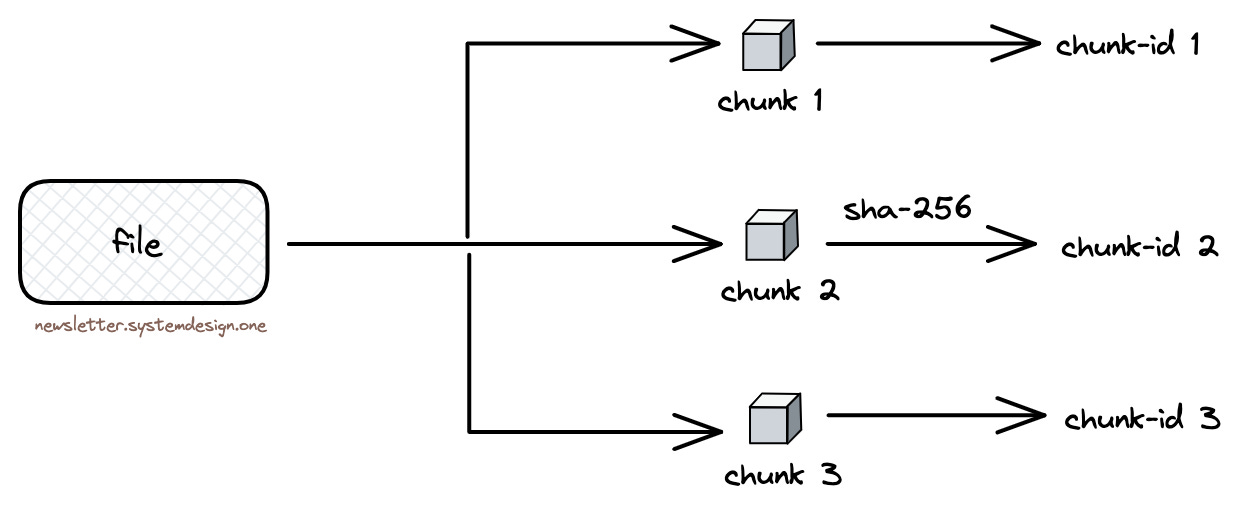

Yet network bandwidth is expensive and its usage increases with many clients. So they don’t transfer the entire file on every file change. Instead the client breaks down files into chunks of 4 MB size and uploads them to the server. That means only chunks that got modified are transferred. It reduces the network bandwidth usage and makes it easier to resume upload-download after a network interruption. Think of each chunk as a data block.

While they use rsync to upload data if the data diff is small for simplicity. Rsync is an efficient file synchronization and transfer protocol.

Besides they give each chunk a unique ID by hashing it with SHA-256 to prevent duplicate data storage. They check whether two chunks have the same hash, and if so it gets mapped to the same data object in S3. This means if 2 people upload the same file, they store it only once on the backend to reduce storage costs. SHA-256 is a secure hashing algorithm with 256 bits.

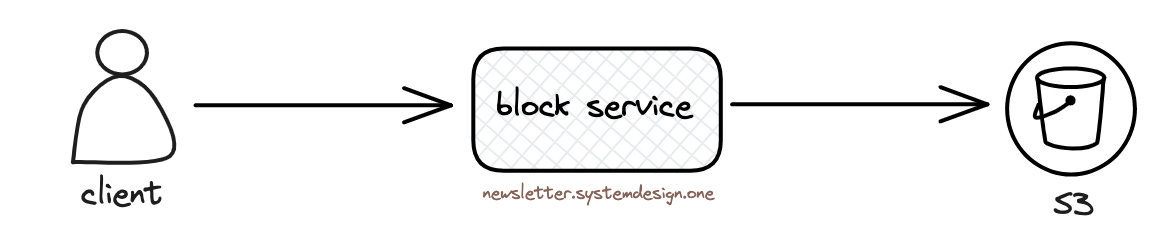

They store file data in Amazon S3 and replicate it for durability. While they run the block service to handle the file upload-download from the client. Amazon Simple Storage Service (S3) is an object storage.

2. Synchronizing Files Between Devices

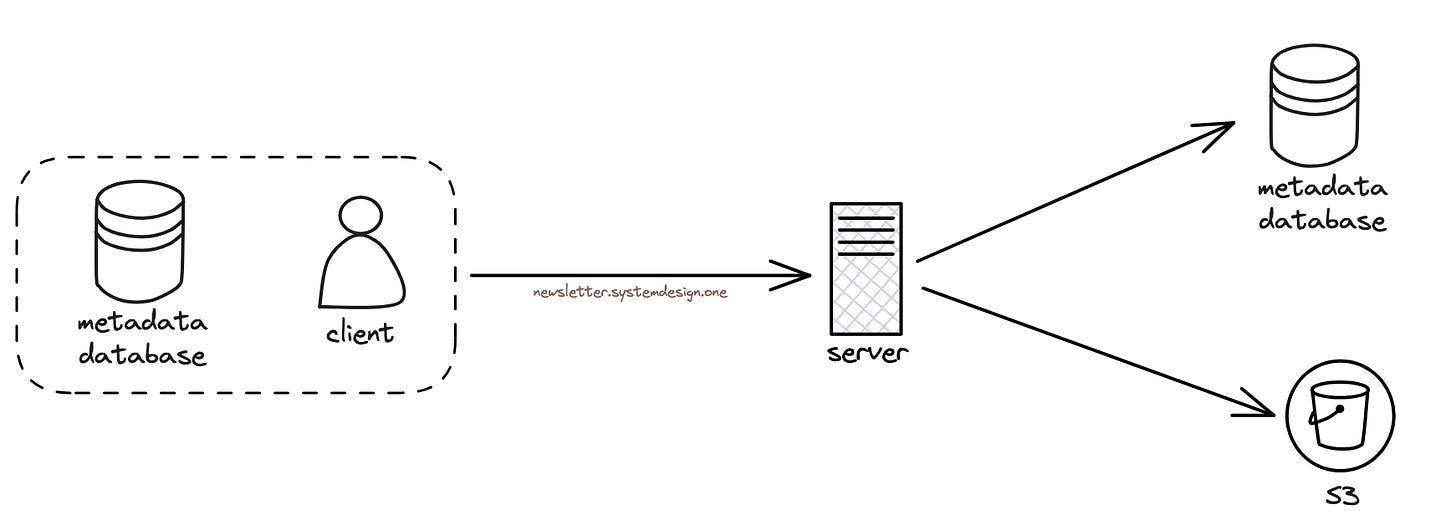

They run a metadata database on the client side. It stores information about files, file chunks, devices, and file edits.

And they avoid extra network calls and make files available offline using the client metadata database. Also it helps to reconstruct a downloaded file from its chunks.

Besides they run a version of the metadata database on the server using MySQL.

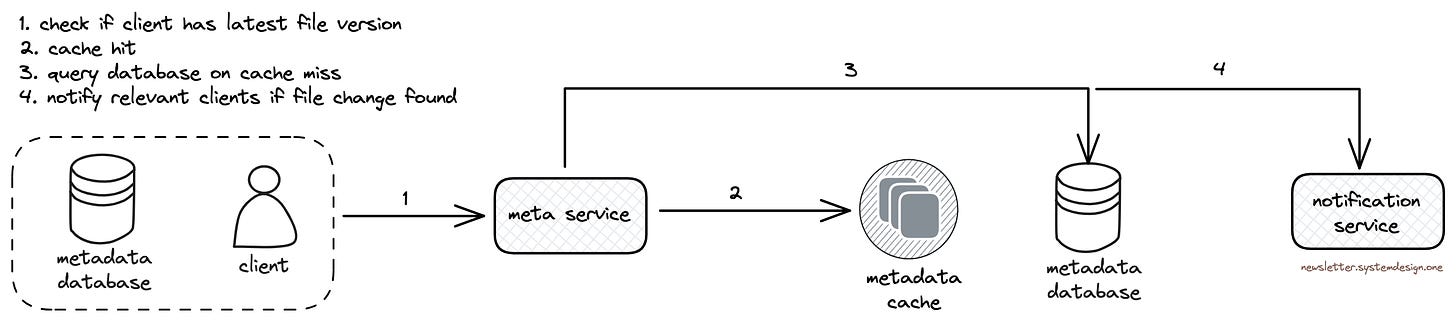

Yet querying the metadata database often could get expensive. So they set up a caching layer with Memcached in front of the database for scalability. It reduced the database load by caching frequently queried data.

Put simply, S3 stores the file data while MySQL stores file metadata. They separate upload-download functionality from file synchronizing functionality for better performance.

They run the meta service to synchronize the file metadata between client and server. The meta service queries the metadata database to find if the client that just came online has the latest version of a file. It then broadcasts file changes to the client via the notification service.

They store all file edits in a log on the metadata database. While both the client and server keep a version of the log.

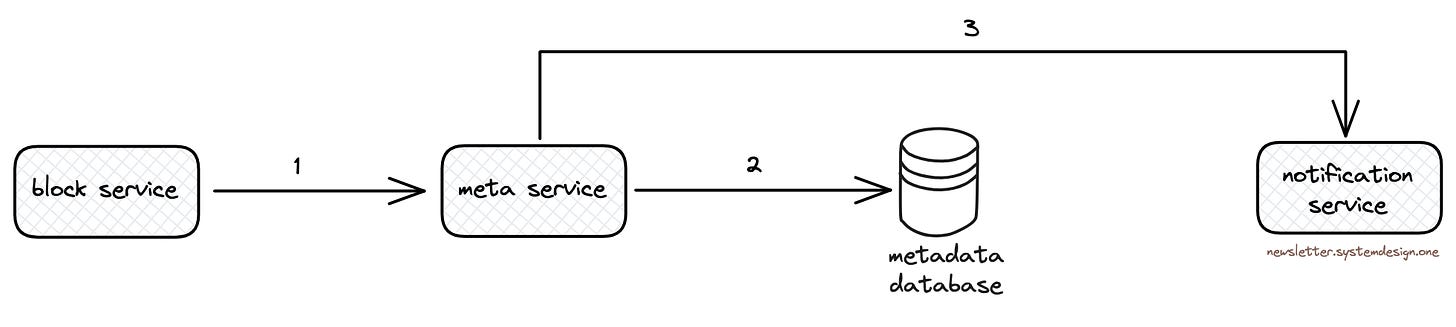

The block service should update the metadata after a client uploads-downloads any file changes. But the block service doesn't directly interact with the metadata database. Instead it calls the meta service via RPC to update the metadata database. And they encapsulate the database queries in the meta service. Remote Procedure Call (RPC) communicates by executing procedures on a remote server.

A file change must be shown to every client watching that file. So they use the notification service to broadcast file changes to the client. They set up the notification service with a 2-level hierarchy for scalability. While the client keeps an open connection with the server using Server-Sent Events (SSE). And the server sends file changes to the client.

3. Scalability

They run many instances of the services for high availability and throughput.

And installed a load balancer in front of each service to evenly distribute the load. Also they run a hot backup of the load balancer. And switch to the hot backup if the primary load balancer fails.

They do exponential backoff while reconnecting clients to the server. Otherwise there will be spikes in server load causing degraded performance. This means the client adds extra delay to reconnect after each failed attempt.

Now they replaced Amazon S3 with an in-house storage system called Magic Pocket. It offers better performance and customization.

And Dropbox remains one of the main market players in the cloud storage industry.

👋 PS - Are you unhappy at your current job?

And preparing for system design interviews to get your dream job can be stressful.

Don't worry, I'm working on content to help you pass the system design interview. I'll make it easier - you spend only a few minutes each week to go from 0 to 1. Yet paid subscription fees will be higher than current pledge fees.

So pledge now to get access at a lower price.

"Excellent system design golden nuggets to ace technical interviews. Highly recommend." Irina

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Pretty crazy when you realize that their main “thing” is simply storing objects on a S3 bucket.

Hello Kim, could you tell me or explain how you create these images? Do you use any specific application?