How Amazon Scaled E-commerce Shopping Cart Data Infrastructure

#41: Break Into Amazon Dynamo White Paper (8 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines the Amazon Dynamo white paper. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

The holiday season, 2004.

Amazon's e-commerce platform is running the Oracle enterprise database.

They set up clustering and leader-follower replication topology for scalability. Yet the database couldn’t scale well for critical services like the shopping cart. And caused several outages during peak shopping hours with 10+ million requests a day.

They could only scale reads by adding database followers. While they had to vertically scale the database leader to scale writes. That means write operations suffered from a single point of failure.

Yet each customer must be able to view and add items to the shopping cart all the time. Otherwise they will lose money. Also the shopping cart infrastructure should be scalable.

So they did post-mortem debugging. And found that 70% of their operations need only a simple key-value data model. That means a relational database would be inefficient for their use case.

So they modeled the shopping cart as a key-value service. The cart ID is the key while the items in the shopping cart are represented as a list of values.

Also they didn’t want to lose any item added to the shopping cart by customers. In other words, they want extremely high write availability and durability.

So a group of distributed systems experts designed a horizontally scalable distributed database. They created it to scale for both reads and writes.

Amazon Dynamo Architecture

They didn't create new technologies. Instead combined many distributed systems techniques to solve the problems. Here’s how they did it:

1. Partitioning Data

They partitioned the data to solve the write scalability problem. Because each partition could accept writes in parallel.

A simple approach is to partition and sort data. Yet it could create a hot shard problem as some shards might receive more traffic than others.

An alternative approach is regular hashing. The idea is to hash the data keys and do modulo-operation on the result against the number of servers. Thus finding the server to interact with. And it distributes data more uniformly.

But it won't scale while adding or removing servers. Because data associations will break and cause large data movement.

So they use consistent hashing to solve this problem. Think of consistent hashing as a distributed systems technique to reduce data movement while adding or removing servers.

Consistent hashing treats the output range of the hash function as a ring. That means the largest hash value wraps around to the smallest hash value. And each server gets a random position on the hash ring.

They find the position of a server by hashing the server ID. And doing a modulo-operation on that result against the number of available positions on the hash ring.

While they add a data item to the server by hashing its key. And doing a modulo-operation on that result against the number of available positions on the hash ring. After that, they traverse the ring in a clockwise direction to find the responsible server.

Put another way, a server is responsible for data that falls in the region between it and its predecessor server on the hash ring.

In the example above, the data key falls between 75 and 100 range. So the server at position 100 stores the data.

Besides they store the server positions in a sorted data structure. So a server could be found in logarithmic time complexity.

The data movement is low when a new server gets added to the ring. Because they move only the data in the range that falls between the new server and the preceding server. Thus making it easier to scale.

Life was better.

Until one day when they noticed an unbalanced load across the servers.

So they introduced virtual nodes. It’s a variant of consistent hashing.

Virtual nodes mean a server position in the hash ring doesn’t map only to a single physical server. Instead it could contain many physical servers. Thus reducing the risk of hot shards and making it easy to include heterogeneous servers.

2. Ensuring Data Durability

But one fine day a server fails and they lose some data.

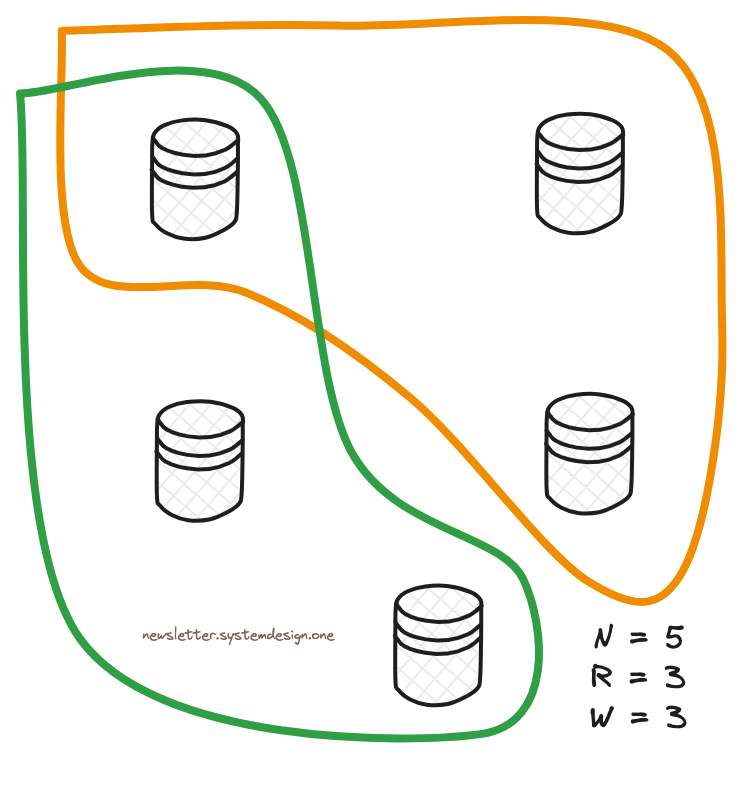

So they replicate data asynchronously across N number of servers for durability. That means they store a data item in the next server on the ring. And also in the next (N-1) servers. While N is the replication factor.

Yet they need data consistency.

So they use sloppy quorum.

At least a single server will have the latest data using sloppy quorum.

And they consider the system consistent if it satisfies the equation: R+W > N.

R represents the read quorum. That means the number of servers that must reply to consider a read operation successful.

While W represents the write quorum. That means the number of servers that must reply to consider a write operation successful.

So the set of servers in reads and writes will overlap considering the equation and the system will return the latest data.

Life was good again.

3. Resolving Data Conflicts

Yet one day concurrent writes with sloppy quorum caused conflict on a data item.

They could resolve the conflict by applying the last write.

A simple solution to find the last write is to use the system clock - Network Time Protocol (NTP). But clock skew is normal in distributed systems, thus it's unreliable. That means different clocks run at different rates.

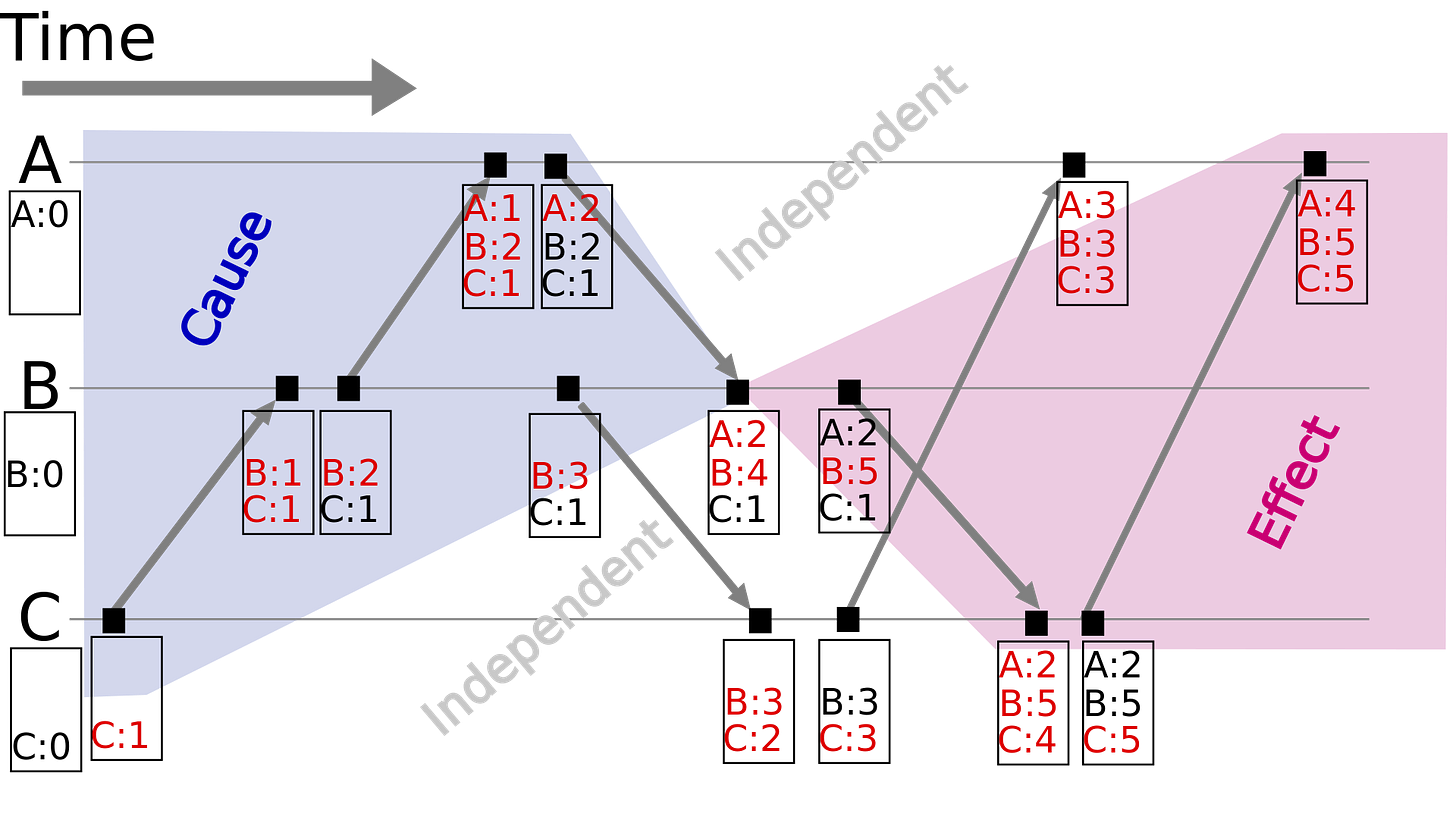

So they use vector clocks to find data version history and merge them during reads. In other words, they use vector clocks to find causality between versions.

The different data versions get automatically merged if there are no conflicts. Otherwise the client logic must resolve the conflicts.

The idea behind vector clocks is simple. Each server maintains a vector of integers and the integers start at zero. The server increments its integer whenever an event gets sent.

While the receiver finds the maximum between its integer and the received value. So event A occurred before event B if all integers in A are lesser than those of event B.

Also the whole vector gets sent along with an event. That means vector clocks are passed between the system and the client.

They have solved another problem.

4. Handling Temporary Failures

Imagine their write quorum is 3. That means 3 servers must reply to consider a write operation successful.

Yet one morning, one of those servers was temporarily down.

So they use hinted handoff. It stores the data temporarily in the next healthy server. That means instead of the next N servers, it picks the next N healthy servers.

While the temporary server sends the data once the failed server is back online. Thus offering high write availability.

And life was good.

5. Handling Permanent Failures

Until one day when the server storing temporary data from hinted handoff also crashed.

And it happened before sending temporary data to the server that was back online after a failure.

So they use Merkle trees to synchronize data between servers in the background.

Think of it as a data structure that allows finding data differences efficiently.

They find data differences by comparing the hash value of the root in the Merkle trees. After that, they check the children nodes if the parent nodes aren’t the same. Thus in the worst case, they need logarithmic time complexity to transfer data.

Things are looking better.

6. Detecting Server Membership

But one day they add more servers to scale the system.

And it was important to find the available servers in the system. Yet a centralized approach using heartbeats isn’t scalable.

So they use gossip protocol to track the system state. It finds the servers by pinging random servers periodically.

While each server transfers its entire membership list to a set of servers on each epoch. And a server is considered dead if it’s unavailable for a specific number of epochs.

Gossip protocol is a decentralized approach to service discovery and failure detection. Also the system would reach eventual consistency in logarithmic time with gossip protocol.

It wasn't a perfect system. But they chose the tradeoffs carefully to solve problems.

3 years later…

They published the results in a white paper and called it Amazon Dynamo.

It laid the foundation for many NoSQL databases.

And distributed databases like Apache Cassandra, Riak, and Amazon DynamoDB are based on Amazon Dynamo principles.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

For the merkle tree, is tree designed such all data blocks are on the leafs?

If yes, then i assume any difference between 2 data blocks will cause all parent hashes to be different. So when searching from root downwards, we also to go all the way from root to the leafs. There won't be a case of the search stopping somewhere in the middle right?

"Thus in the worst case, they need logarithmic time complexity to transfer data."

So i don't understand why is it a worst case. I thought it is always the case that we have to search until leafs, which are where the data blocks exist, and anything above leaf level are meaningless hashes

Also could you clarify statements in section 4+5?

"While the temporary server sends the data once the failed server is back online. Thus offering high write availability."

Temporary server send data to where? The failed server after it's recovered?

" it happened before sending temporary data to the server that was back online after a failure."

Which server is sending to which server?

- Failed (before failing) to temporary?

- Temporary to Failed (after recovering)?