How Amazon S3 Achieves 99.999999999% Durability

#38: Break Into Incredible Amazon Engineering (7 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how Amazon S3 provides a mind-boggling level of durability. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

November 2010 - Paris, France.

A young software engineer named Julia works at a Pharmaceutical startup.

They create many log files each day.

And durability is important to their business model.

Yet they stored the data in an in-house tiny server room.

Life was good.

And seasons passed.

But one day.

The hard disk of their main data server crashed.

And ruined it all.

Julia noticed that the log files of a main customer couldn't be found.

So she was Sad.

Luckily they had a backup server.

But she knew that they were looking for trouble with the current setup.

So she searches the internet for a cheap and durable storage solution.

And reads about Amazon S3.

She was dazzled by its durability numbers.

Amazon Simple Storage Service (S3) is an object storage.

It stores unstructured data without hierarchy.

Amazon S3 Durability

Amazon S3 offers 11 nines of durability - 99.999999999%.

Put another way, a single data object out of 10 thousand objects might get lost in 10 million years.

Durability is about the prevention of data loss.

And here’s how Amazon S3 provides extreme durability:

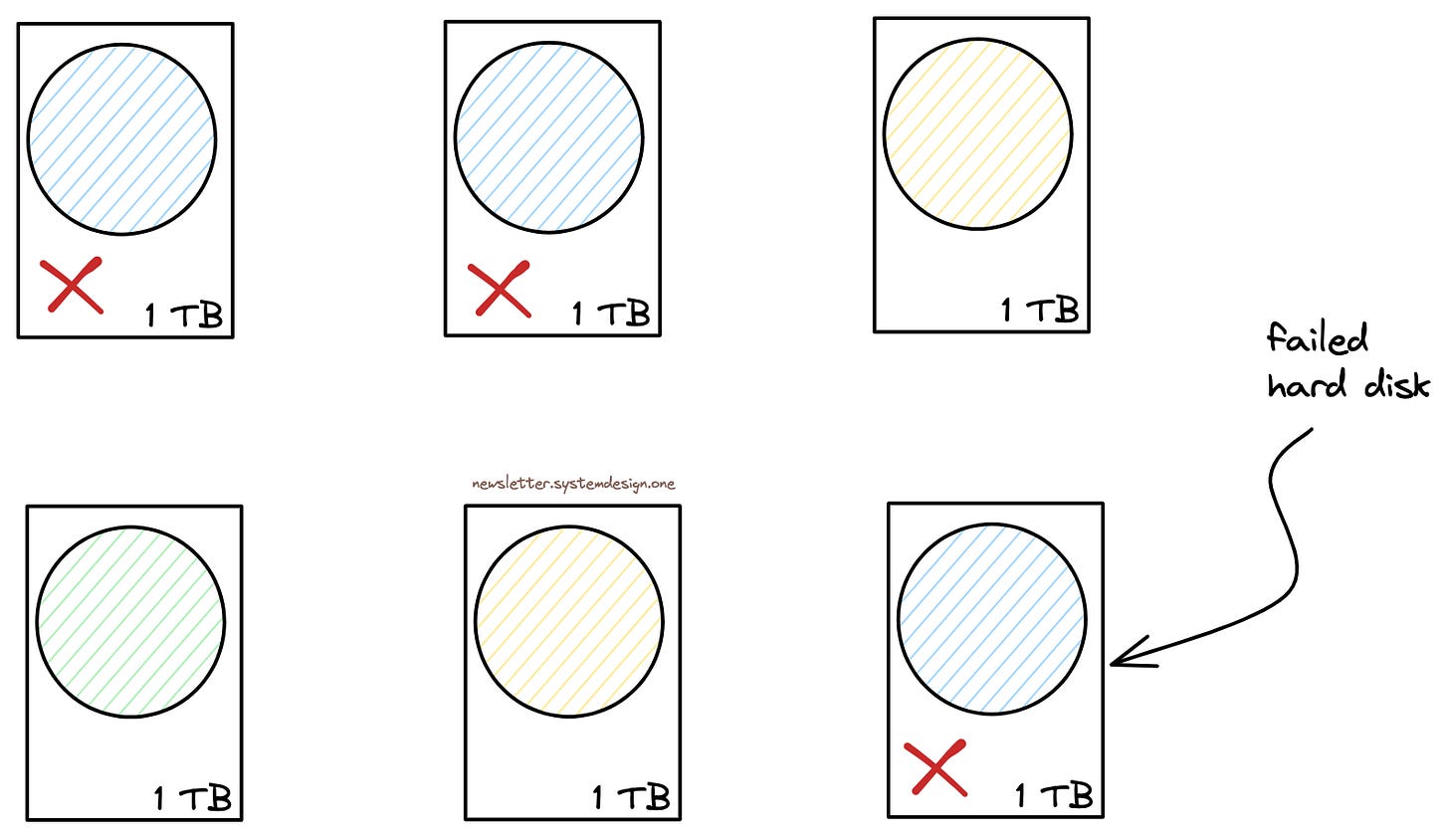

1. Data Redundancy

Mechanical hard disks provide large storage at small costs.

So they're still popularly used in the cloud.

Yet they fail often.

And it could happen due to a physical shock or electrical failure.

A solution is to replicate the data across many hard disks.

So data could be recovered from another disk if a failure occurs.

Put another way, replication improves durability.

But there's still a risk of data loss if all the hard disks that store a specific data object fail.

So they use erasure coding to reduce the probability of data loss.

It's a replication technique.

Erasure coding splits a data object into chunks called data shards.

And creates extra chunks called parity shards.

While the original data object can be recreated from a subset of the shards.

They store the erasure-coded shards across many hard disks.

Thus reducing the probability of data loss.

Put another way, flexible replication at many levels improves durability.

In the above figure, shards are shown in different colors.

They run background processes to monitor the health of storage devices.

And quickly replace a failed device to re-replicate its data.

Besides they don't keep empty storage devices.

Instead some free space in each storage device.

Thus many storage devices could participate in recovery.

And provide high recovery throughput through parallelization.

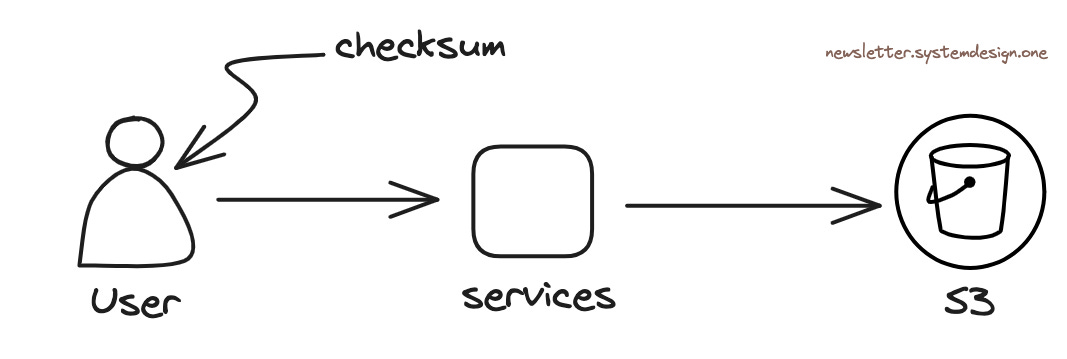

2. Data Integrity

They send data from a user through many services before storing it in S3.

Yet there's a risk of data corruption due to bit flips in network devices.

Bit flip is an unwanted change of bits from 0 to 1, or vice versa.

And it could happen due to a noise in the communication channel or hardware failure.

Also TCP doesn't detect bit flips because it’s a higher-level protocol.

So they add checksums to data using Amazon client SDK.

And it helps them to detect corrupted data on its arrival at S3.

Put another way, they do data integrity checks using checksums.

Imagine checksum to be a fingerprint of the data object.

So 2 different data objects wouldn’t have the same checksum.

And they use checksum algorithms like CRC32C and SHA-1 for performance.

Besides they use the HTTP trailer to send checksum.

Because it allows sending extra data at the end of chunked data.

Thus avoiding the need to scan data twice and check data integrity at scale.

They erasure code the data before storing it in S3.

And add extra checksums to erasure-coded shards.

Because it allows data integrity checks during reads.

While S3 calculates around 4 billion checksums per second.

Besides they do bracketing before returning a successful response to the user.

Bracketing is the process of reversing the entire set of transformations on the data.

It ensures that a data object can be recreated from individual shards.

Put another way, it validates stored data against uploaded data.

3. Data Audit

A failed hard disk should be replaced fast.

Also data must be re-replicated for durability.

So they match the repair rate of hard disks with its failure rate.

Yet the failure rate isn’t always predictable. Because it could be influenced by weather or power outages.

So they run a separate service to detect hard disk failures.

And scale the repair service accordingly.

Also bit flips occur on hard disks.

And could corrupt a specific data sector.

Put another way, the storage device may be functional but a specific data sector won’t be readable.

So they periodically scan the storage device to check data integrity.

And use checksums stored with erasure-coded shards to audit the data.

Besides they re-replicate the data if a bad sector is found.

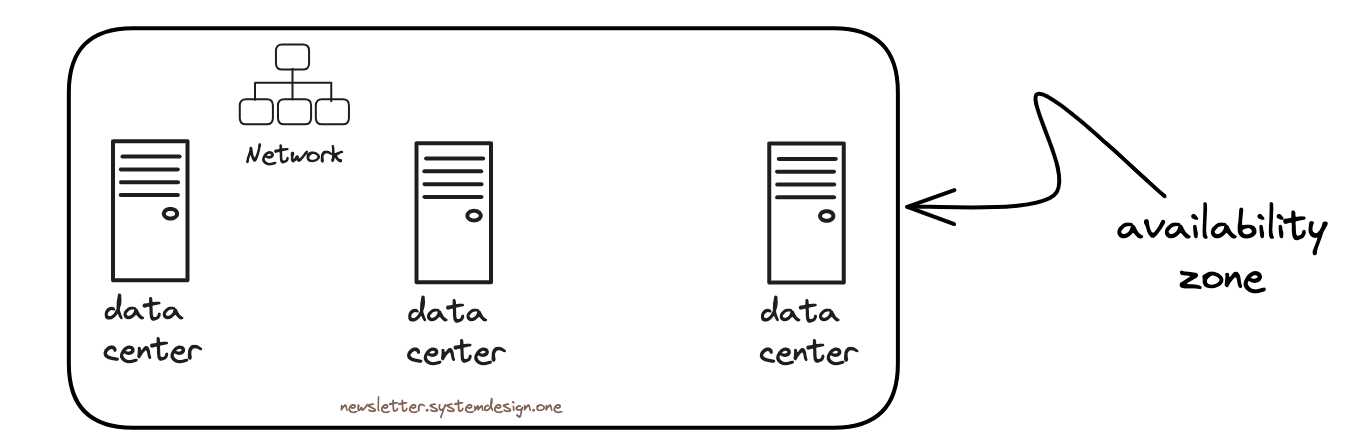

4. Data Isolation

They store metadata and file content separately in S3.

The metadata gets stored in a database.

While file content gets stored as chunks in massive arrays of hard disks.

Also they run physically separated data centers within an availability zone.

Put another way, an availability zone is a group of isolated data centers.

And each availability zone gets a separate networking infrastructure and power supply.

Thus reducing the risk of simultaneous failures and improving durability.

Yet there’s a risk of data loss due to user mistakes.

For example, a user could accidentally delete or overwrite a data object.

So they design the software better to avoid such situations.

They provide versioning in S3 to track data object changes.

And every change to an object will get a version ID.

Also a delete marker is used to delete a version.

Because it delays the actual deletion.

Thus avoiding accidental overwriting or deletion of a data object.

Besides they support object lock in S3 to avoid user mistakes.

It prevents permanent object deletion during a specified period.

Also they offer point-in-time backups for durability.

5. Engineering Culture

They deploy a change only after a durability review.

Because it forces them to think about possible cases and simplify design decisions.

Put another way, durability reviews help them understand the risks in software design.

They keep a written document for durability reviews.

Also a threat model with a list of things that could go wrong.

Thus creating an engineering culture that constantly thinks about durability.

Besides they use proven mathematical models to calculate durability.

They made durability a part of the architecture and their engineering culture.

Besides they are extremely focused on durability fundamentals.

And consider durability as an ongoing process.

Consider subscribing to get simplified case studies delivered straight to your inbox:

NK’s Recommendations

High Growth Engineer: Actionable tips to grow faster in your software engineering career.

Author: Jordan Cutler

The Hustling Engineer: Get 2 min weekly tips to grow your software engineering career.

Author: Hemant Pandey

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Great walkthrough. I really like the visuals and the way you structure you sentences to have breaks between them.

Just reading between the lines, the way they do data redundancy seems costly, I know they say it's a cheap solution but would be good if you have any insights on how much that costs to have such high durability this way?

"Besides they use the HTTP trailer to send checksum.

Because it allows sending extra data at the end of chunked data.

Thus avoiding the need to scan data twice and check data integrity at scale."

What do we mean by 'avoiding the need to scan data twice'? Why do need to scan data twice to calculate checksum? I don't quite get it. Can someone explain please?