How Meta Achieves 99.99999999% Cache Consistency 🎯

#52: Break Into Meta Engineering (4 minutes)

Get my system design playbook for FREE on newsletter signup:

A fundamental way to scale a distributed system is to avoid coordination between components.

And cache helps to avoid the coordination needed to access the database. So cache data correctness is important for scalability.

Share this post & I'll send you some rewards for the referrals.

Once upon a time, Facebook ran a simple tech stack - PHP & MySQL.

But as more users joined, they faced scalability problems.

So they set up a distributed cache1.

Although it temporarily solved their scalability issue, maintaining fresh cache data became difficult. Here’s a common race condition:

The client queries the cache for a value not present in it

So the cache queries the database for the value: x = 0

In the meantime, the value in the database gets changed: x = 1

But the cache invalidation event reaches the cache first: x = 1

Then the value from cache fill reaches the cache: x = 0

Now database: x = 1, while cache: x = 0. So there’s cache inconsistency.

Yet their growth rate was explosive. And became the third most visited site in the world.

Now they serve a quadrillion (1015) requests per day. So even a 1% cache miss rate is expensive - 10 trillion cache fills2 a day.

This post outlines how Meta uses observability to improve cache consistency. It doesn’t cover how they invalidate cache, but how they find when to invalidate cache. You will find references at the bottom of this page if you want to go deeper.

This case study assumes a simple data model and the database & cache are aware of each other.

Cache Consistency

Cache inconsistency feels like data loss from a user’s perspective.

So they created an observability solution.

And here’s how they did it:

1. Monitoring 📈

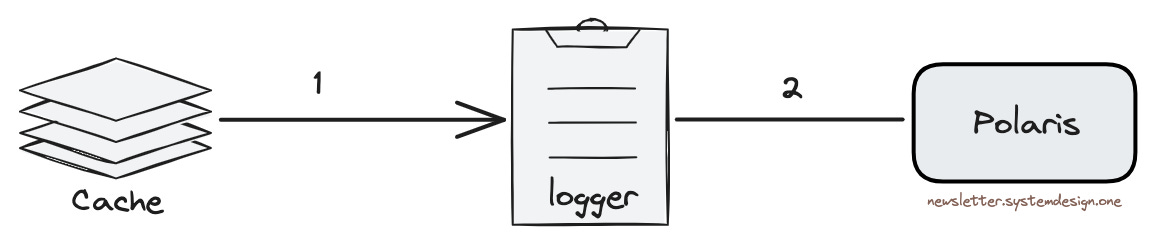

They created a separate service to monitor cache inconsistency & called it Polaris.

Here’s how Polaris works:

It acts like a cache server & receives cache invalidation events

Then it queries cache servers to find data inconsistency

It queues inconsistent cache servers & checks again later

It checks data correctness during writes, so finding cache inconsistency is faster

Simply put, it measures cache inconsistency

Besides there’s a risk of network partition between distributed cache & Polaris. So they use a separate invalidation event stream between the client & Polaris.

A simple fix for cache inconsistency is to query the database.

But there’s a risk of database overload at a high scale. So Polaris queries the database at timescales of 1, 5, or 10 minutes. It lets them back off efficiently & improve accuracy.

2. Tracing 🔎

Debugging a distributed cache without logs is hard.

And they wanted to find out why cache inconsistency occurs each time. Yet logging every data change isn’t scalable as it’s write-heavy. While the cache is for a read-heavy workload. So they created a tracing library & embedded it on each cache server.

Here’s how it works: