Concurrency Is Not Parallelism 🔥

#75: Break Into Concurrency vs Parallelism (3 Minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines the differences between concurrency and parallelism. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

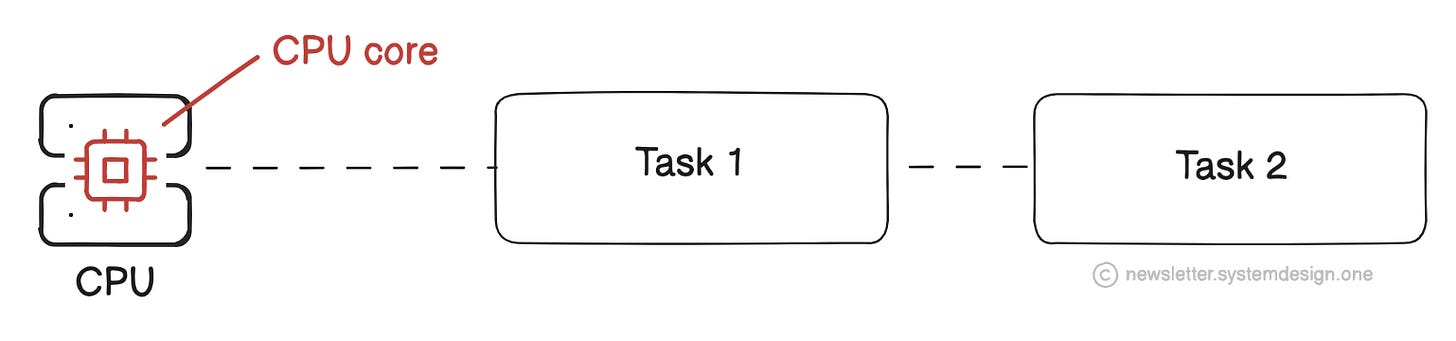

Once upon a time, computers had a single CPU and could run only one task at a time.

Still it completed tasks faster than humans.

Yet the system wasted CPU time waiting for input-output (I/O) responses, such as keyboard input.

And the system became slow and non-responsive as the number of tasks grew.

So they set up a scheduler and broke down each task into smaller parts.

The scheduler assigns a specific time for processing each task and then moves to another. Put simply, the CPU runs those micro tasks in an interleaved order.

The technique of switching between different tasks at once is called concurrency.

But concurrency only creates an illusion of parallel execution.

The performance worsens when the number of tasks increases. Because each task has to wait for its turn, causing an extra delay.

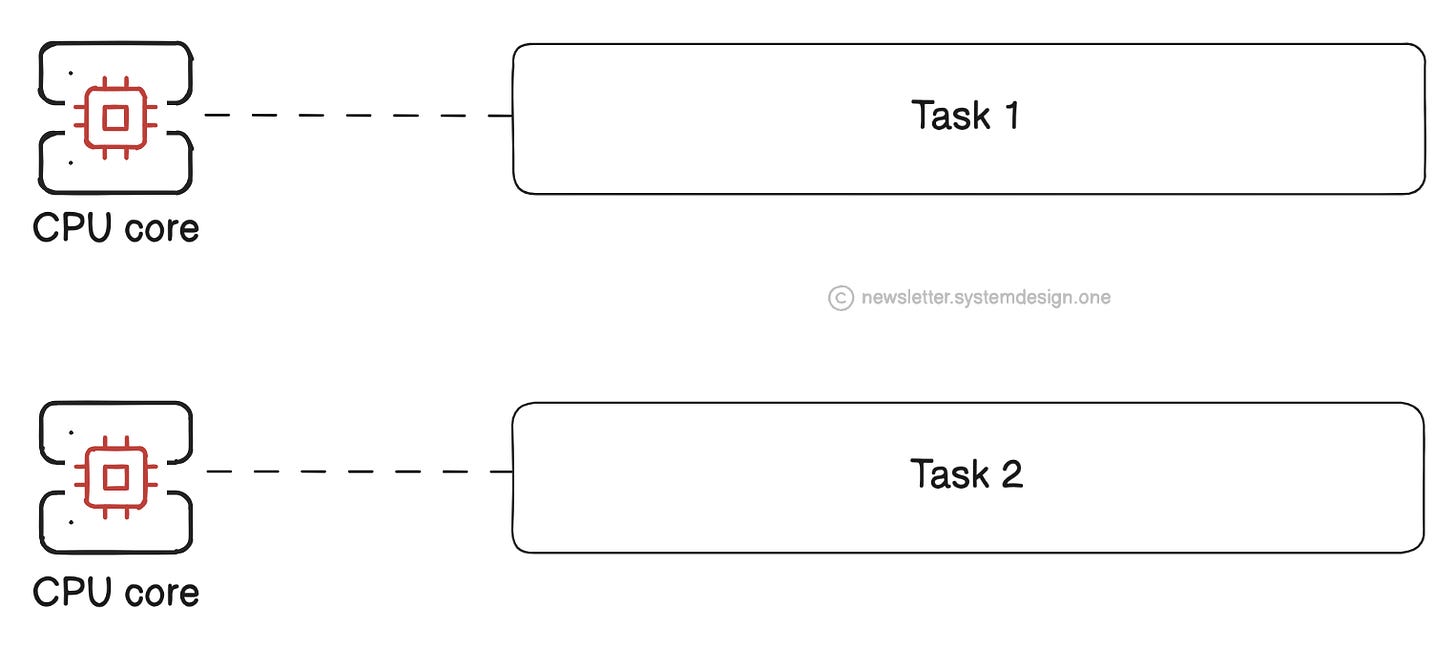

So they added extra CPU cores. And each CPU core could run a task independently.

Think of the CPU core as a processing unit within the CPU; it handles instructions.

The technique of handling many tasks at once is called parallelism. It offers efficiency.

Onward.

Cut Code Review Time and Bugs in Half — Sponsor

CodeRabbit is your AI-powered code review co-pilot.

It gives you instant, contextual feedback on every PR and one-click fix suggestions. It has reviewed over 10 million PRs and is used in 1 million repositories.

Besides, it’s free to use for open-source repos; 70K+ open-source projects already use it.

CodeRabbit lets you instantly spot:

Logic & syntax bugs

Security issues (XSS, SQL injection, CSRF)

Concurrency problems (deadlocks, race conditions)

Code smells & readability concerns

Best practice violations (SOLID, DRY, KISS)

Weak test coverage & performance bottlenecks

Write clean, secure, and performant code with CodeRabbit.

Concurrency Is Not Parallelism

Concurrency is about the design, while parallelism is about the execution model.

Let’s dive in:

1. Concurrency vs Parallelism

Imagine concurrency as a chef making doner kebab for 3 customers. He switches between fries, meat, and vegetables.

And consider parallelism as 3 chefs making doner kebab for 3 customers; all done at the same time.1

The CPU-intensive tasks run efficiently with parallelism.

Yet parallelism wastes CPU time when there are I/O heavy tasks.

Also running tasks on different CPUs doesn’t always speed them up. Because coordinating tasks for independent execution is necessary.

So combine concurrency with parallelism to build efficient, scalable, and responsive systems.

Besides simultaneous changes to the same data might corrupt it.

Yet it’s difficult to determine when a thread will get scheduled or which instructions it’ll run.

So it’s necessary to use synchronization techniques, such as locking.

Think of a thread as the smallest processing unit scheduled by the operating system.

A thread must acquire the lock to do a set of operations. While another thread can use the CPU only after the current thread releases the lock.

The 2 popular synchronization primitives are mutex and semaphore. They’re used to coordinate access to a shared resource.