How Facebook Was Able to Support a Billion Users via Software Load Balancer ⚡

#58: Break into Meta Engineering (5 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how Facebook scaled its load balancer to a billion users. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

Note: This post is based on my research and may differ from real-world implementation.

Once upon a time, Facebook ran on a single server.

Life was good.

But as more users joined, they faced scalability issues.

Although adding more servers temporarily solved their scalability problems, routing traffic became difficult.

So they were sad & frustrated.

Until one morning when they had a smart idea to create a software load balancer.

Yet they wanted to keep it reliable and offer extreme scalability.

So they used simple ideas to build it.

Onward.

This Post Summary - Instagram

I wrote a summary of this post

(save it for later):

And I’d love to connect if you’re on Instagram:

Facebook Load Balancer

Here’s how they scaled the load balancer from 0 to 1 billion users:

1. One Million Users

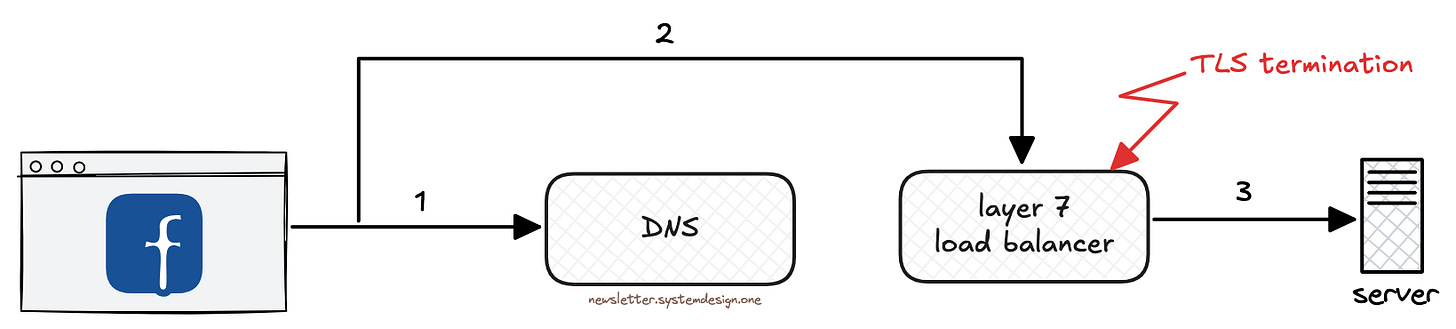

They send encrypted data using Transport Layer Security (TLS). Think of TLS as a security protocol.

And the server must decrypt the data before processing it. This is called TLS termination.

Yet it needs a ton of computing power.

So they do TLS termination on the load balancer. And forward decrypted data to servers. Thus reducing server load.

They use a layer 7 load balancer to proxy requests. It routes traffic based on application data - URLs, HTTP headers, and so on.

2. Ten Million Users

Yet a single layer 7 load balancer might crash with high traffic.

So they added more layer 7 load balancers and put a layer 4 load balancer in front.

A layer 4 load balancer does Transmission Control Protocol (TCP) routing. Put simply, it doesn’t inspect application-level data. Instead forwards traffic based on IP address & port number.

Thus offering better performance.

Also they use consistent hashing for routing requests to layer 7 load balancers.

The IP addresses, and port numbers get hashed to find the target load balancer.

While a request gets routed to the next layer 7 load balancer if the original layer 7 load balancer fails. And future requests get routed to the original load balancer once it’s live again.

They use Apache Zookeeper for service discovery. It lets the layer 4 load balancer find available layer 7 load balancers.

Besides a TCP connection must be created each time to send requests.

Yet it’s not efficient to create one every time due to connection overhead.

So they maintain open TCP connections between layer 4 and layer 7 load balancers.

And keep a separate state table with the list of open connections. It lets them do failover quickly if the layer 4 load balancer fails.

3. Hundred Million Users

But a single layer 4 load balancer might crash with explosive traffic.

So they added more layer 4 load balancers and put a router in front.

Here’s how the router works:

It uses Border Gateway Protocol (BGP) to find the best routes to the layer 4 load balancer

It uses the Equal Cost Multi Path (ECMP) routing technique to load balance traffic across those routes

Thus avoiding network congestion on a single route and making better use of bandwidth.

4. One Billion Users

Yet the router is now a single point of failure.

So they added more clusters within a data center - each cluster consists of a router and load balancers.

And set up extra data centers for more computing capacity.

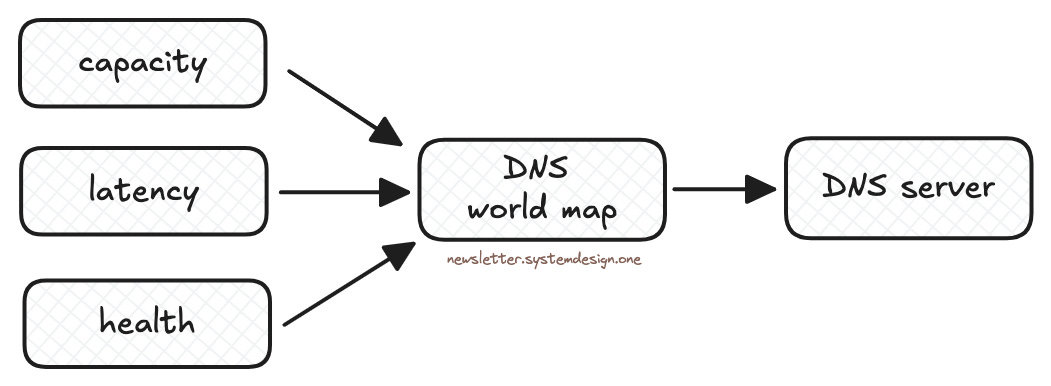

Besides they do smart DNS load balancing across data centers.

A network map is created in real time based on server capacity, server health, and so on.

The requests then get routed to the optimal server based on this network map.

They use software load balancers for flexibility.

And use commodity hardware everywhere except for the router.

While Facebook became one of the most visited sites with ~3 billion users.

Subscribe to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

It's fascinating to see how to build a system, then they replicate that system with a load balancer, just to replicate that system with another form of load balancer and so on.

I wonder if they thought about changing something within the first system instead of replicating it.

Fantastic article Neo!

Interesting to see how different strategies are applied at layer 7 and 4 to achieve overall load balancing improving scalability by multiple times.