How to Scale an App to 100 Million Users on GCP 🚀

#54: A Simple Guide to Scalability (7 minutes)

Get my system design playbook for FREE on newsletter signup:

This isn’t a sponsored post. I wrote it for someone getting started with the Google Cloud Platform (GCP).

There are various ways to scale an app on the cloud, and this is just one of them. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

Once upon a time, there lived 2 software engineers named Dominik and James.

They worked for a tech company named Hooli.

Although they had extremely smart ideas, their manager never listened to them.

So they were sad and frustrated.

Until one afternoon when they had a wild idea to launch a startup.

And their growth rate was mind-boggling.

Yet they wanted to keep it simple. So they hosted the app on GCP.

Onward.

System Design Frontpage (Featured)

I built a GitHub repository to help you learn system design months ago.

I want to make it the front page for system design. And help you pass system design interviews + become good at work. Consider putting a star if you find it valuable:

Google Cloud Scalability

Here’s their scalability journey from 0 to 100 million users:

1. Thousand Users:

This is how they served the first 1000 users.

They created a minimum viable product1 (MVP) using monolith architecture - a single web server and a MySQL database.

Then ran the app on a single virtual machine.

A virtual machine provides workload isolation and extra security.

They used Google Cloud Shell to deploy the app on GCP. It’s a command-line tool for the management and deployment of apps on GCP.

While Cloud DNS routed user traffic to the virtual machine. Domain Name System (DNS) is a service for translating human-readable domain names into IP addresses.

Yet they had users only from North America.

So they deployed the app only in that region to keep data closer to users and save costs.

Life Was Good.

2. Five Thousand Users:

Until one day when they received a phone call as users couldn’t access the app.

They faced performance issues due to many concurrent users. And the single virtual machine running their app crashed. (This is a single point of failure.)

While they needed a scalable2 and resilient3 app.

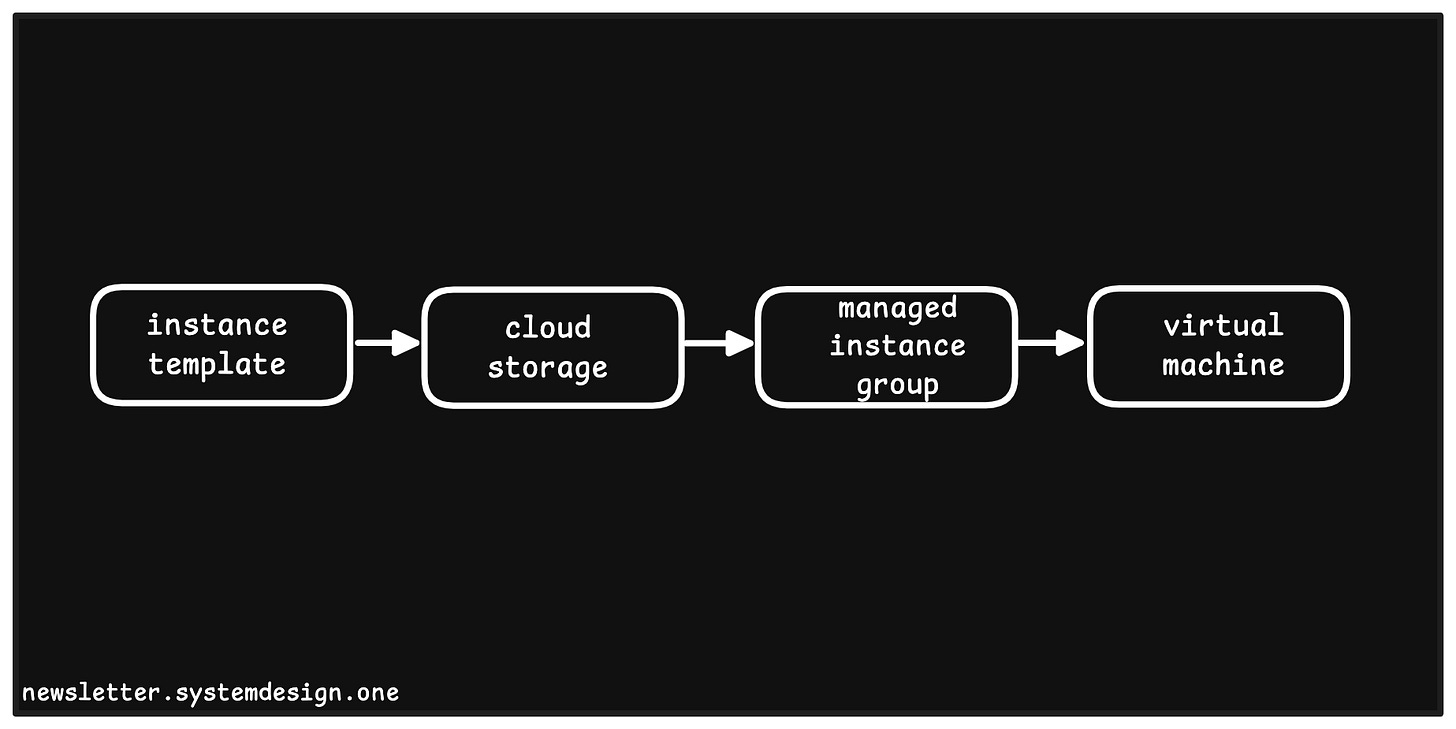

So they set up autoscaling4 using the Managed Instance Group (MIG). It uses a base instance template to create new virtual machine instances.

This is how they used MIG:

Auto scaler: create and destroy virtual machines based on load (CPU & memory)

Auto healer: recreate a virtual machine if it becomes unhealthy

And automate virtual machine installation across different zones for high availability.

Yet they must distribute traffic across various virtual machines for performance.

So they set up a load balancer. And route the user traffic to the single IP address of the load balancer. It then distributes the traffic across virtual machines.

Also they set up monitoring and logging to troubleshoot system failures.

Besides they set up continuous integration and continuing delivery (CI/CD). It allowed them to automate:

Testing

Development

Deployment

Thus get faster feedback, improve code quality, and do reliable releases.

And Life Was Good Again.

3. Ten Thousand Users:

Virtual machines failed at times.

While they ran the web server and MySQL database in the same virtual machine instance. Put simply, all functionality runs within a virtual machine. Thus limiting availability for some users on failures.

So they decoupled the app into a 3-tier architecture:

Frontend

Backend

Database

And ran each layer on separate virtual machines.

They deploy virtual machines across different zones to prevent single-zone failures.

And set up auto-scaling for each layer. They use a load balancer to route traffic to the frontend layer. While another load balancer distributes traffic across the backend layer.

Besides they ran MySQL in leader-follower replication topology for high availability.

Many users from Europe and Asia signed up in the meantime.

And their growth was inevitable. Yet new users faced latency issues as they were located far from the servers. So they deployed the servers across many regions on GCP.

Very Neat.

4. Hundred Thousand Users:

But one day, they noticed database performance issues.

They got extreme concurrent reads and writes. And adding more disks for increased input-output operations per second (IOPS) needs manual operational overhead. So they moved to Google’s managed relational database service.

It automatically extends the disk without downtime.

Yet the database often got queried for the same data.

So they installed an in-memory database (Redis) between the backend and database. It cached frequently accessed data for fast access. And reduced database load.

This stabilized their data layer.

Very Clean.

5. One Million Users:

Their growth skyrocketed.

Yet one morning, they noticed user traffic routed to failed virtual machines.

Here's what happened. They use DNS Geo routing for user traffic. But the client’s DNS cache got outdated and pointed to the failed virtual machines. A simple fix is to reduce cache time to live (TTL). But frequent DNS policy updates are needed for better results.

So they set up the Google Cloud global load balancer.

These are the benefits of using the global load balancer:

A single IP address gets configured on the global load balancer, so there’s no need to update the DNS policy

It knows failed virtual machines using periodic health checks

And routes the traffic to another region if a region fails.

Yet web pages with images were slow for some users.

So they set up the content delivery network (CDN) for fast delivery. And used cloud storage for storing static content. It’s an object storage.

While CDN is cheaper to serve images due to low bandwidth usage.

They wanted to scale the data layer again.

But didn’t want to invest time and effort into it. (Scalability without sacrificing data consistency is difficult.)

So they use Google Cloud Spanner. It’s a scalable relational database with strong consistency.

Very Straight.