How Disney+ Hotstar Delivered 5 Billion Emojis in Real Time

#35: Learn More - Hotstar Architecture for Emojis (5 Minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how Disney+ Hotstar delivered billions of emojis in real time. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

February 2015 - Mumbai, India.

Disney+ Hotstar starts streaming live cricket.

Yet it lacked an immersive user experience.

So they created a live feed where people can express their moods via emojis. Thus creating an engaging live cricket experience.

And 55 million raving fans of cricket in India sent over 5 billion emojis in real-time during the 2019 Cricket World Cup.

Hotstar Architecture

They don’t do caching because emojis must be shown in real-time.

Also they split the services into smaller components to scale independently.

Besides they avoided blocking resources and supported high concurrency via asynchronous processing.

Here’s how they scaled emojis to millions of concurrent users:

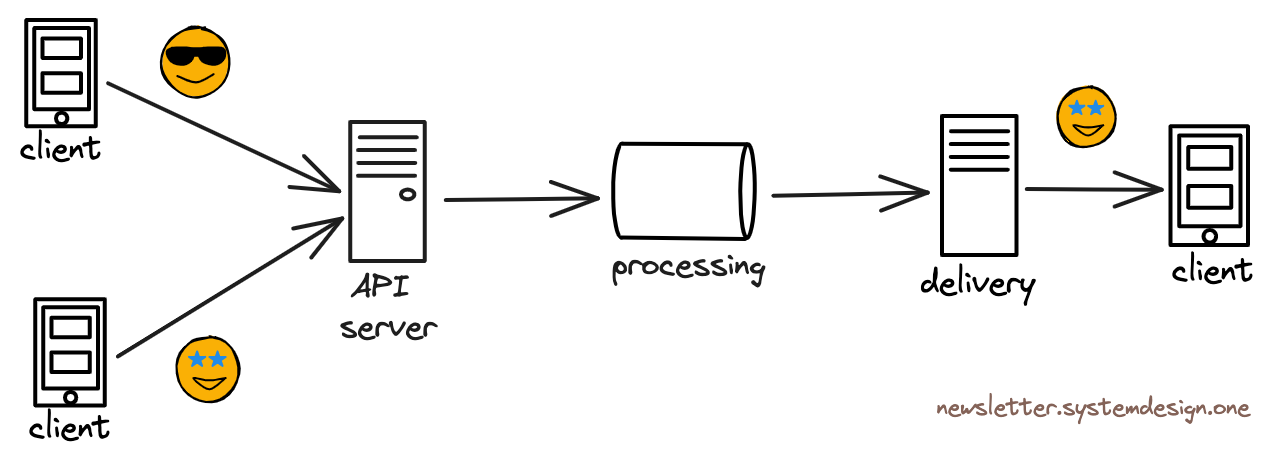

1. Receiving Emojis From Clients

They send emojis from the client to the server using HTTP. And run the API server in the Go programming language.

The data gets written to a local buffer before returning success to the client. Because it prevents hogging client connections on the API server.

And the buffered data is asynchronously written in batches to the message queue. They use Goroutines while writing to the message queue for concurrency.

Goroutines are lightweight threads that execute functions asynchronously.

They use Apache Kafka as the message queue. It decouples system components and automatically removes the older messages on expiry.

Yet Kafka has a high operational complexity. So they use their in-house data platform, Knol to run Kafka.

The message queue is a common mechanism for asynchronous communication between services.

2. Processing Emojis

They use Apache Spark to process the stream of emojis from Kafka.

Stream processing is a type of data processing designed for infinite data sets.

A streaming job in Spark aggregates the emojis over smaller intervals to offer a real-time experience. And the aggregated emojis get written into another Kafka.

They chose Spark because it offers micro-batching and good community support. Also it offers fault tolerance through checkpointing.

Micro-batching is batching together data records every few seconds before processing them.

While Checkpointing is a mechanism to store data and metadata on the file system.

Besides combining Spark and Kafka guarantees only one-time processing of emojis. Thus preventing duplicates.

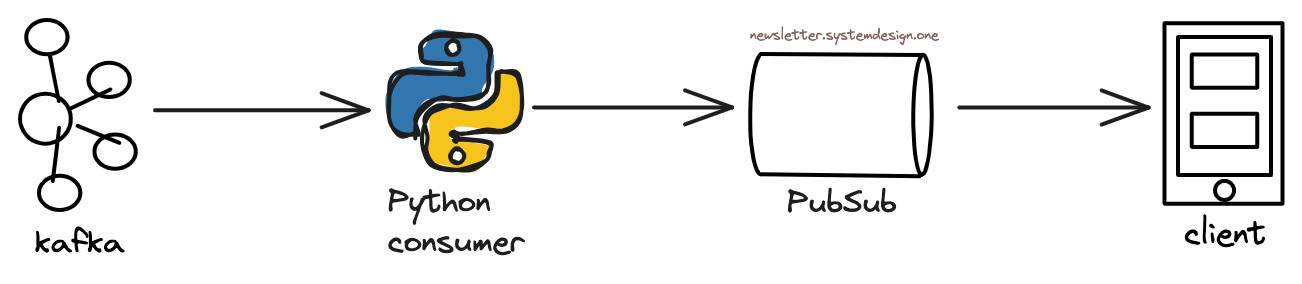

3. Delivering Emojis to Clients

They use Python consumers to read normalized emojis from Kafka.

And the emojis get sent to the PubSub infrastructure.

While the PubSub delivers emojis over a persistent connection to the clients.

They use the Message Queuing Telemetry Transport (MQTT) protocol to deliver the emojis.

MQTT is a lightweight messaging protocol over TCP. And it’s supported by a broad range of platforms and devices.

They use the EMQX (MQTT) message broker to distribute messages. It’s based on Erlang and offers very high performance.

A single machine in the EMQX cluster could handle 250k connections.

But EMQX internally uses a distributed database called Mnesia. And it was designed to support only a few machines. So it became difficult to scale beyond 2 million concurrent connections by adding more machines.

Thus they didn’t use the EMQX cluster. Instead set up a multi-cluster system using the reverse bridge.

Each cluster contains a single publish node and many subscribe nodes.

While the main publish node forwards the emojis to each cluster.

They run a Golang service on each cluster. And it subscribes to all the messages from the main publish node and forwards them to the publish node on every cluster. They called it the reverse bridge architecture.

Besides they set up autoscaling to change the number of subscriber nodes for scalability.

Disney+ Hotstar remains a major player in India's streaming industry.

And this case study shows that a simple tech stack with proven technologies is enough for high scalability.

Consider subscribing to get simplified case studies delivered straight to your inbox:

NK’s Recommendations

The Modern Software Developer: Get tips on navigating the world of software development while getting to grips with your mental and physical well-being.

Author: Richard Donovan

Crushing Tech Education: Join a community of 5,000 engineers and technical managers dedicated to learning system design.

Author: Eugene Shulga

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Ming blowing how scalable Golang routines and Erlang mnesia can be.

Great read and thanks for recommending my newsletter

"They don’t do caching because emojis must be shown in real-time."

Sorry for the noob question, but isn't the one of the point of caching is to support lower latency requests?