How Disney+ Hotstar Scaled to 25 Million Concurrent Users

#6: You Need to Read This - Amazing Scalability Patterns (6 minutes)

Get my system design playbook for FREE on newsletter signup:

February 2015 - Mumbai, India.

There was an increasing demand for online streaming in India.

Star India, a 21st Century Fox subsidiary, decided to take the opportunity. And launched Hotstar.

Hotstar is a subscription-based video streaming service. They hit the 25 million concurrent users record from the raving fans of live cricket in India.

This post outlines the scalability techniques from Hotstar. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

Hotstar Scaling

Here are the scalability techniques:

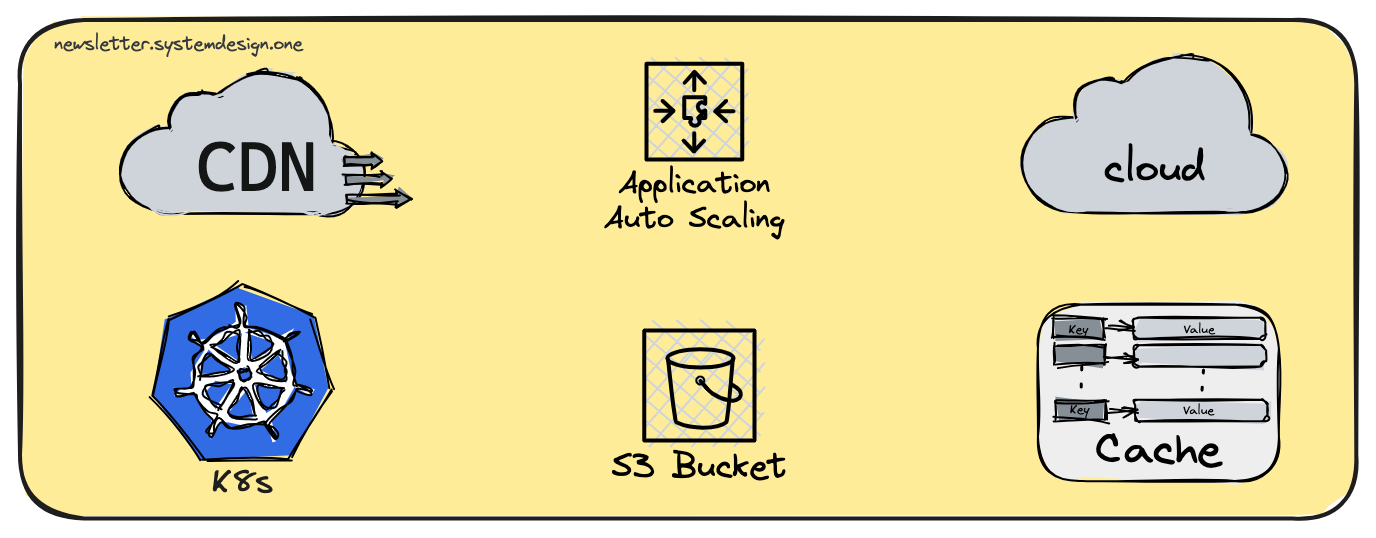

1. Autoscaling Is Not Enough

They didn't rely on autoscaling to handle traffic spikes. Because it took time for a newly provisioned server to become healthy. The observed time range was 90 seconds.

Instead they prewarmed their infrastructure ahead of major sports events. They did it by estimating the peak concurrent traffic. The historical traffic patterns helped them with estimations.

They prewarmed their infrastructure with autoscaling. Also tuned their servers to run at full capacity.

2. Avoid the Thundering Herd

The thundering herd problem occurs if many concurrent clients query a server. It results in degraded performance.

Their techniques to avoid the thundering herd problem:

Add jitter to client requests

Activate client caching

Allow exponential backoff by the client

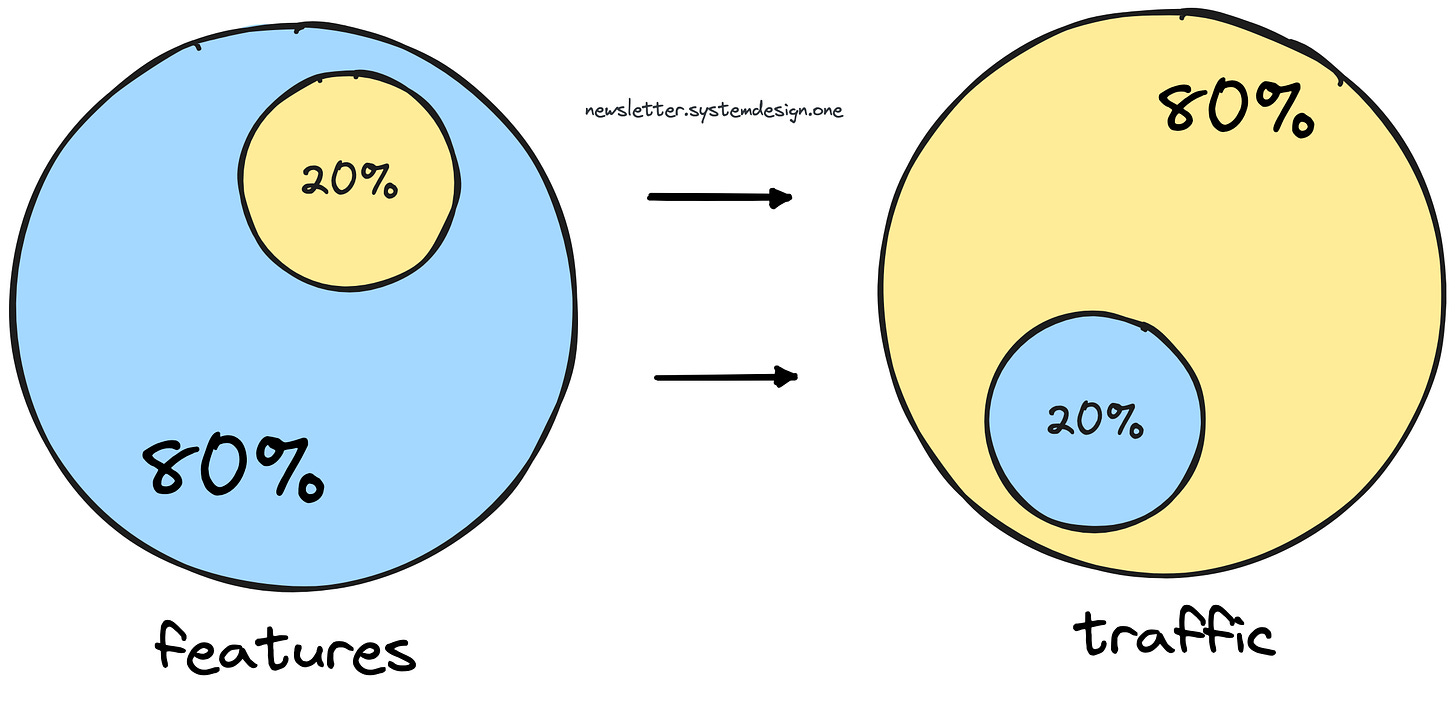

3. Apply the Pareto Principle

They studied the traffic patterns to identify important components.

The result: subscription engine, metadata engine, and streaming infrastructure. And they put full focus on the high availability of important components.

Also they tweaked each component. It allowed them to meet unique scalability needs.

Besides they kept their system design simple. It enabled them to achieve operational excellence.

They added multi-level redundancy to overcome server failures.

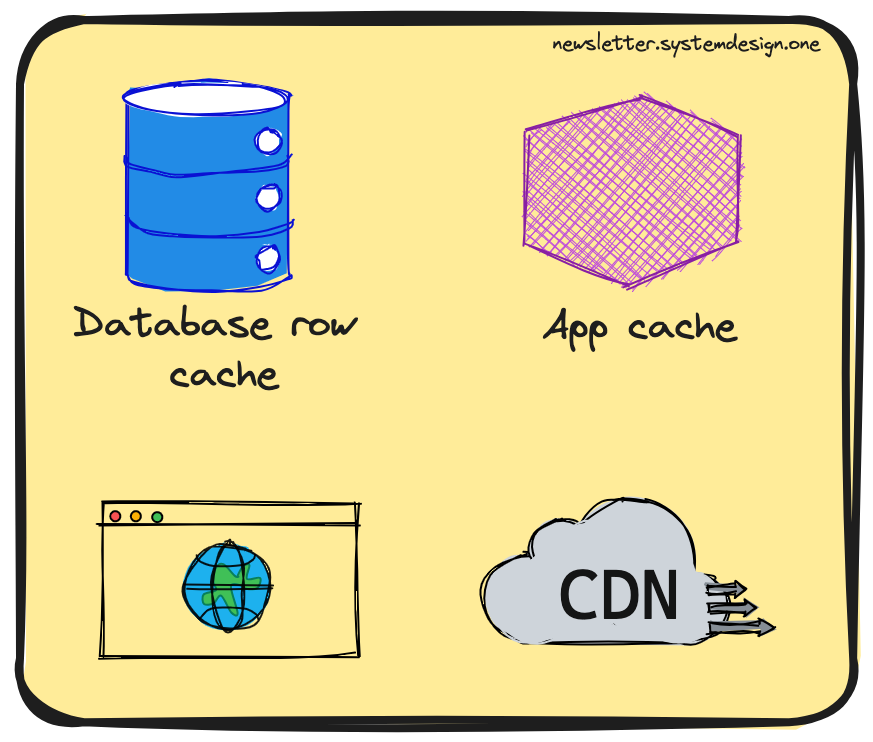

4. Don’t Repeat Yourself

They used a multi-level cache to improve read scalability. Their key idea was to reduce the traffic that reaches the origin server. And prevent repeating expensive operations.

They used caching with the right time-to-live (TTL). This allowed them to keep the system functional even when the origin server failed.

Also they monitored the TTL of every cache level. Because they wanted to prevent the thundering herd and avoid data consistency issues.

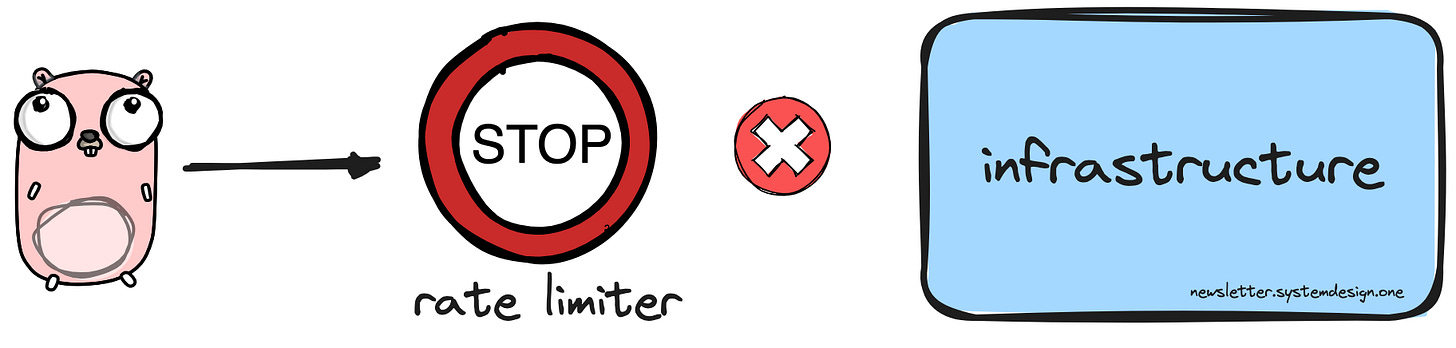

5. Rate Limit the Traffic

They rate limited the traffic. So, they were able to prevent unwanted traffic from overloading the servers.

Also they kept a denial list. This allowed them to stop suspicious traffic from degrading the system.

6. Enable Panic Mode

They implemented panic mode on the server. This allowed them to inform the client of server overload.

Also they configured the client to exponentially back off. This technique provided high availability.

Here is what they did in panic mode: favor the availability of important components. And gracefully degrade non-essential components. For example, their recommendations service. This allowed them to free up and reuse the servers that ran non-essential components.

Also they allowed users to bypass the non-essential components. This improved the reliability.

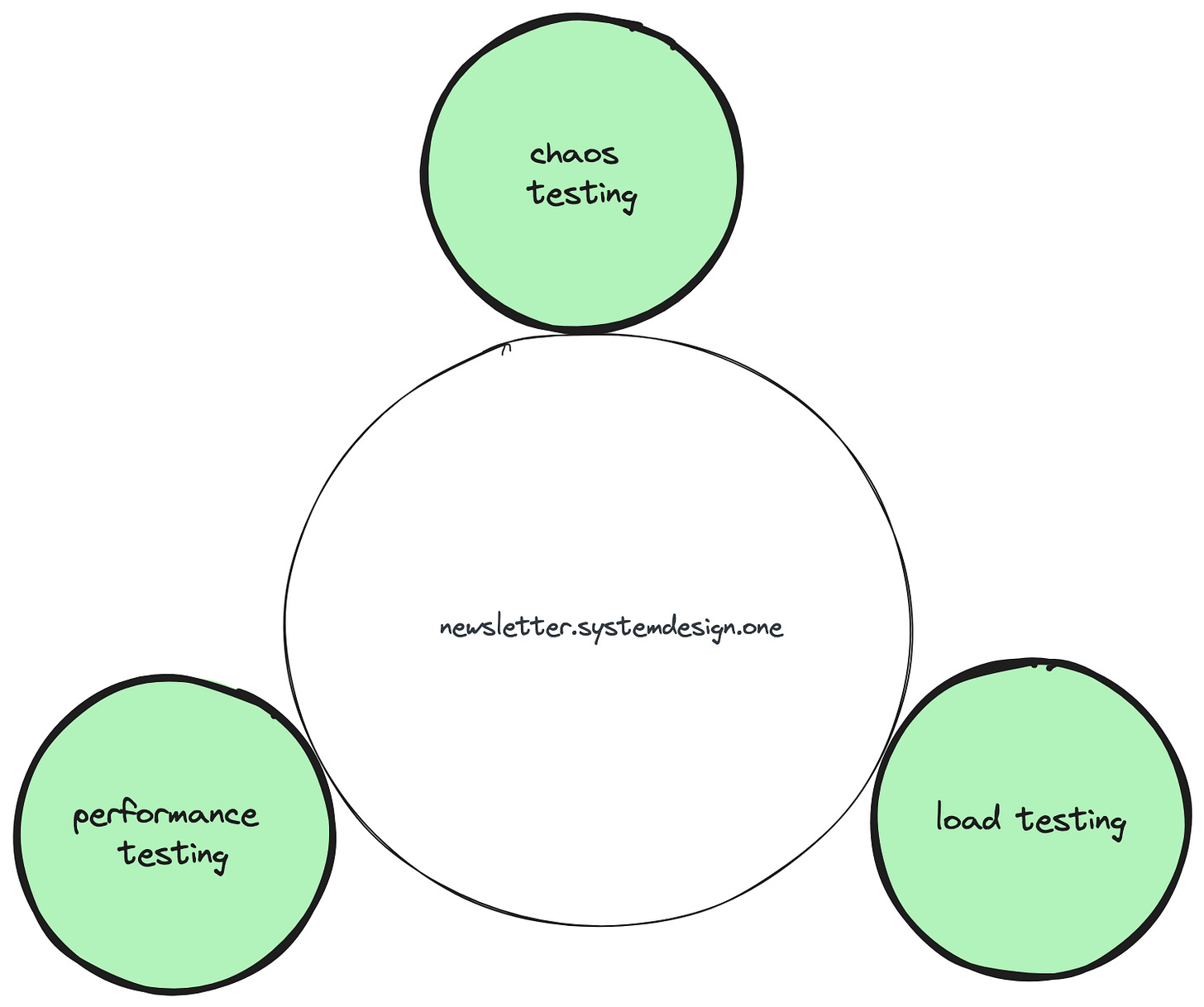

7. Testing

They identified bottlenecks through tests.

This is how they did it:

Chaos testing checks whether the system will remain functional if certain services fail.

Performance testing checks whether the system will degrade when the number of users grows. They used the flood.io tool to run performance tests.

Load testing checks the system performance under an expected number of concurrent users. They used the gatling.io tool to run load tests.

8. Orchestrate the Infrastructure

They considered reliable infrastructure to be the foundation of scalability.

They avoided configuration tools like Chef and Puppet. Because it added further delay to the newly provisioned server from becoming healthy.

Instead they used baked container images. It included everything necessary to become healthy after provisioning. This allowed quick scalability.

Also they used proven technologies to simplify infrastructure operations. For example, Kubernetes.

9. Everything Is a Trade-off

Cloud providers have limitations. AWS sets a limit on the number of servers available for a specific instance type. It reduced the server availability for Hotstar during peak traffic. Their workaround was to use different instance types - and get more servers.

Also AWS doesn't allow fine-tuning autoscaling logic. So, they added a custom autoscaling script to meet their needs.

10. Tweak the Software

Unique problems need a unique solution. So does system design.

They studied the data patterns to tweak their system. For example, they tweaked the operating system kernel and application libraries. This improved performance.

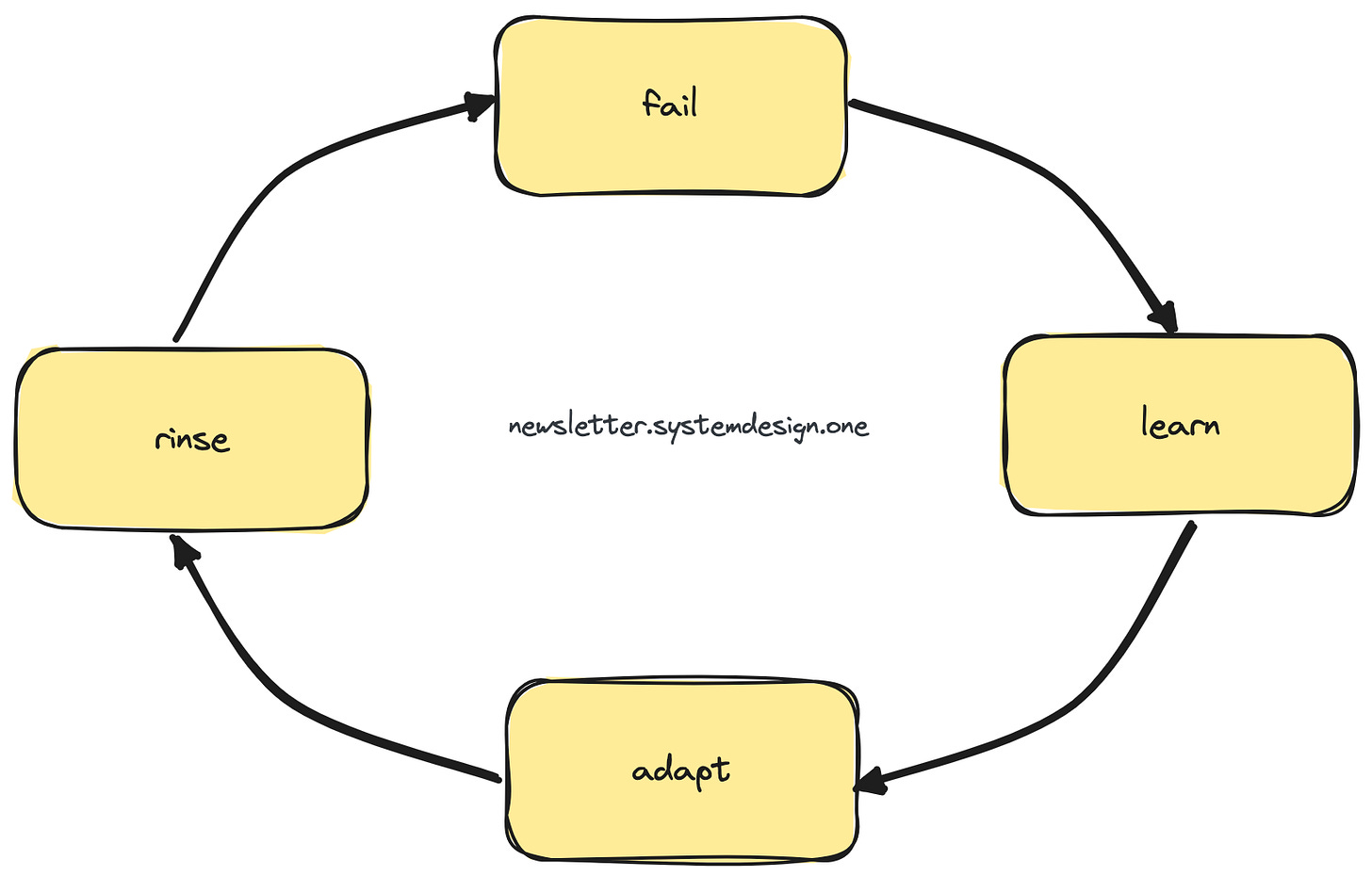

11. Flywheel Effect

They identified bottlenecks by profiling. For example, they observed that users with limited network bandwidth had poor user experience. So, they tweaked the bit-rate settings.

They put learning from failures in a loop. And this constant loop improved their performance.

12. Keep an Open Communication Channel

Monitoring important system metrics was crucial for performance.

So, they maintained an open communication channel between stakeholders. This helped to align on the important system metrics.

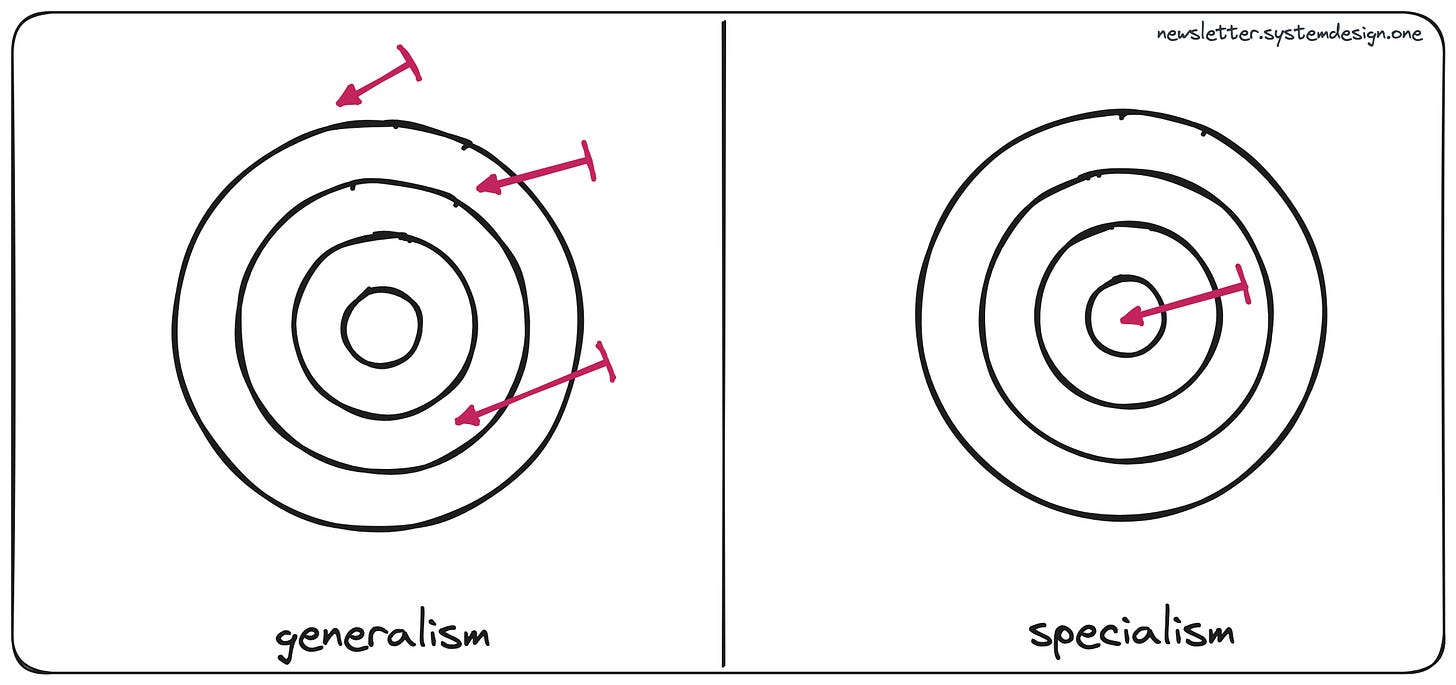

13. Grow the Scalability Toolbox

They knew that there was no silver bullet to scalability. Because every tool has its limitations. So, they used a combination of many tools and techniques to scale. And kept introducing new scalability techniques.

Also they studied the traffic pattern to get the best out of each tool.

This case study shows that preparing for failures is important to scale. Hotstar claims that its current scalability techniques will handle 50 million concurrent users.

Walt Disney acquired Hotstar as part of its acquisition of 21st Century Fox. And Disney+ Hotstar remains a major player in India's streaming industry.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Word-of-mouth referrals like yours help this community grow - Thank you.

Get featured in the newsletter: Write your feedback on this post, and tag me on Twitter, LinkedIn, and Substack Notes.

References

Saxena, A. (2018). Scaling Is Not An Accident. [online] Medium. Available at: https://blog.hotstar.com/scaling-is-not-an-accident-895140ac84c0 [Accessed 15 Sep. 2023].

Saxena, A. (2019). Scaling the Hotstar Platform for 50M. [online] Medium. Available at: https://blog.hotstar.com/scaling-the-hotstar-platform-for-50m-a7f96a019add.

www.youtube.com. (n.d.). Scaling hotstar.com for 25 million concurrent viewers. [online] Available at YouTube [Accessed 15 Sep. 2023].

Wikipedia Contributors (2022). Disney+ Hotstar. [online] Wikipedia. Available at: https://en.wikipedia.org/wiki/Disney%2B_Hotstar.

I think you should pick one aspect of scaling and explain it in deep.

I appreciate the mention! I think that is the first time I’ve noticed Substack alert me to it. Definitely a smart notification for Substack team to have built, they just fostered a new writer to writer connection.