Get the powerful template to approach system design on newsletter sign-up (it’s free):

How to scale an app on the cloud without worrying about server management?

Simply use a serverless computing service such as AWS Lambda.

Share this post & I'll send you some rewards for the referrals.

This post outlines the internal architecture of AWS Lambda. You will find references at the bottom of this page if you want to go deeper.

Note: This post is based on my research and may differ from real-world implementation.

Once upon a time, there was a 2-person startup.

They had a customer with a massive following on social networks.

And they were given an impossible task - build an extremely scalable app in 23 days.

Yet they were only good at mobile app development and business skills.

So they set up the infrastructure on the cloud.

Although it temporarily solved their scalability issues, there were newer problems.

Here are some of them:

1. Cost Efficiency

They should pay for each provisioned virtual machine (VM) even if unused.

And scaling down VM instances needs extra effort with the setup of autoscaling groups.

2. Server Management

The operating system should be updated monthly and server configuration must be managed.

But they didn’t have the time for it.

3. Infrastructure Scalability

The network traffic management becomes complex as the app scales.

And proper capacity planning is needed for performance.

But they didn’t know how to do it.

This Post Summary (Instagram)

I wrote a summary of this post:

(Save it for later.)

And I’d love to connect if you’re on Instagram:

They wanted to ditch distributed systems problems.

And focus only on mobile app development. So they moved to AWS Lambda (serverless). It lets them scale the backend quickly based on the traffic.

And there’s no need to provision or manage servers.

Instead provide the code as a zip file or a container image and it’ll run as an event when things happen.

Yet abstracting server management in Lambda is a difficult problem.

So the brilliant engineers at Amazon used simple ideas to solve it.

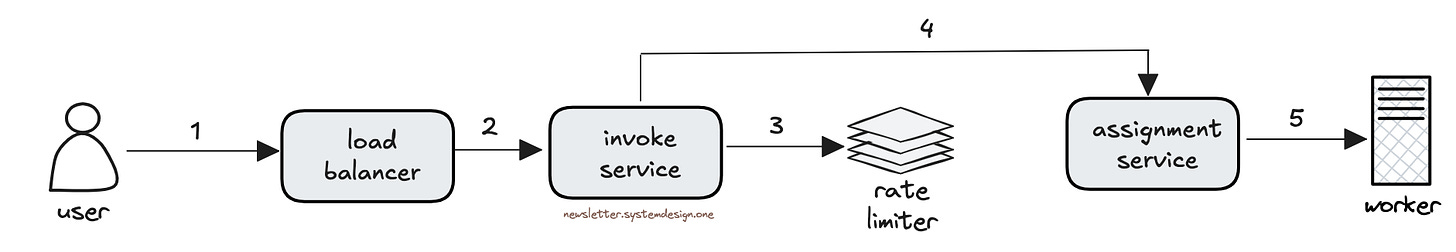

How Does AWS Lambda Work

Here’s how:

1. Scalability

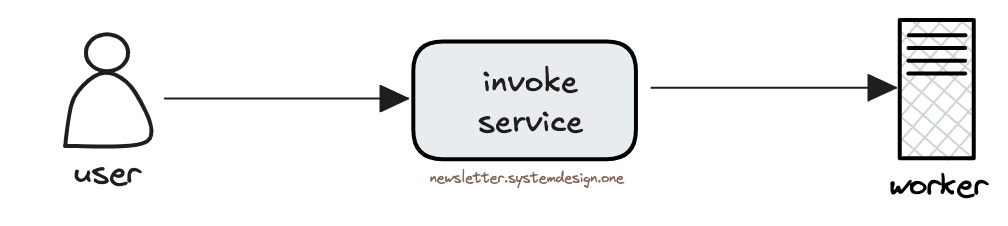

They run Lambda functions on a server called the worker.

And use microservices architecture to create the Lambda service.

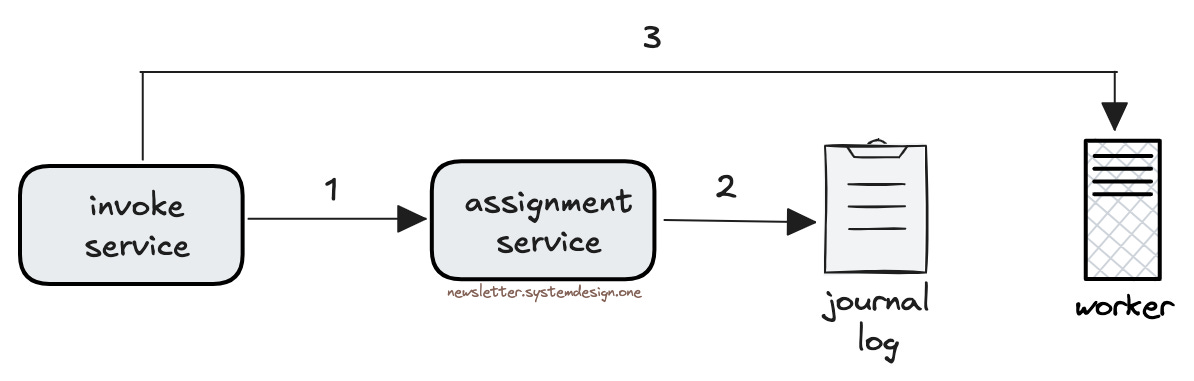

While the invoke service forwards the Lambda request to the worker. And returns the result to the caller.

They run Lambda functions across many workers for scalability. (Also across different availability zones for high availability.)

While the assignment service tracks workers executing a specific Lambda function. (It runs in the leader-follower pattern.)

Also it lets them:

Check if a worker is ready for another Lambda invocation

Mark a worker as unavailable if an error occurs during the server startup

Yet it’s important to track the workers running a specific Lambda function in a fault-tolerant way.

Otherwise the workers will get orphaned during failures. And waste resources by creating new workers. So they store the worker metadata in an external journal log.

It lets them:

Perform a quick failover if the assignment service (leader) fails

Become resilient against host and network failures

Track workers running a specific Lambda function

In simple words, the journal log stores worker metadata durably.

And the assignment service (leader) writes to the journal log while followers read from it.

2. Performance

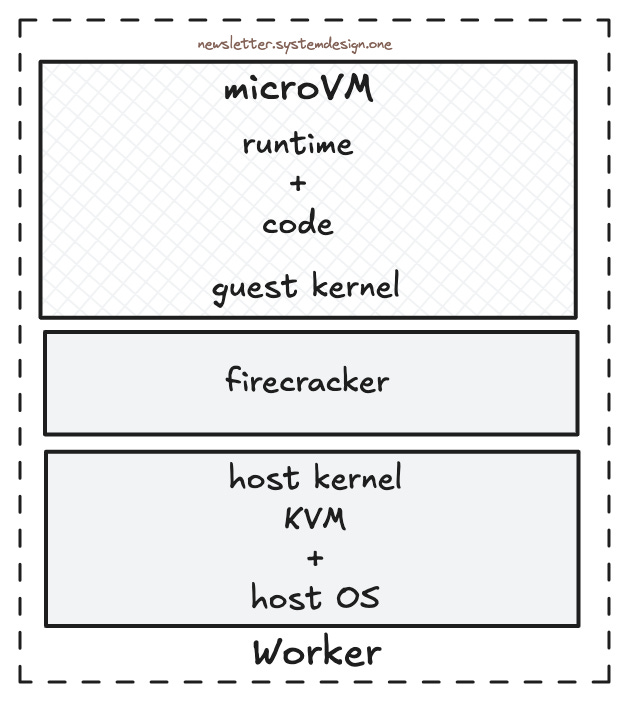

They use AWS EC2 as the worker.

And it's necessary to isolate each customer's Lambda functions.

Yet having separate workers for each customer will result in resource wastage.

So they use lightweight virtual machines called microVMs.

And a virtual machine manager called Firecracker.

It lets them scale down while providing tenant-level isolation.

Put simply, Firecracker runs many microVMs on a single worker.

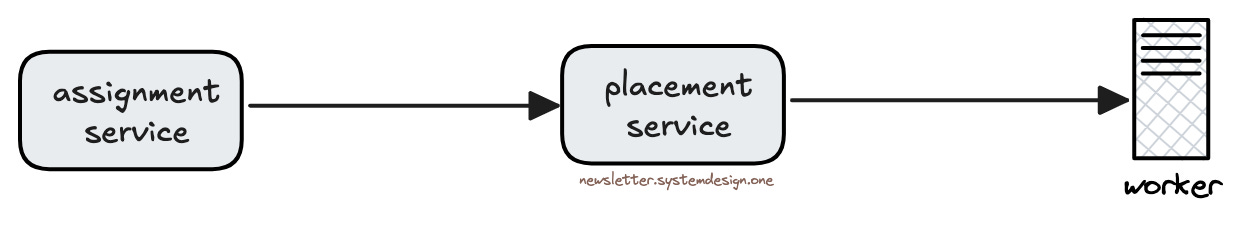

Besides they use the placement service to set up a new worker.

Also it lets them:

Lease workers for a specific period

Monitor the health of workers

Scale workers based on traffic

The worker then downloads the Lambda function code and initializes it.

3. Latency

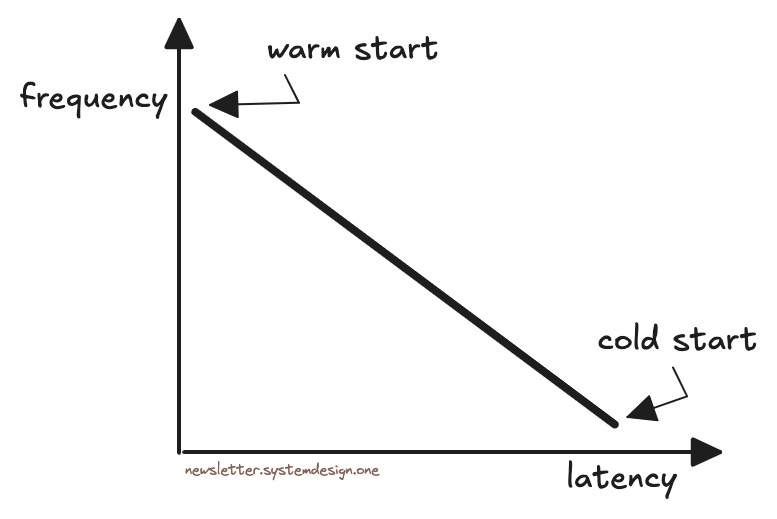

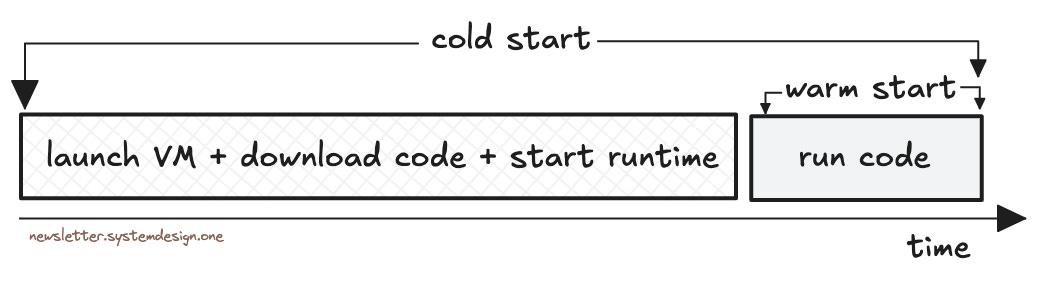

99% of requests get handled by an existing microVM.

And the reuse of an existing microVM by a Lambda function is called warm start. It allows quick processing of a request.

But occasionally microVMs run out of capacity and new microVMs must be set up.

The creation of a new microVM to handle a request is called cold start.

Yet a cold start takes extra time before it can serve requests because:

microVM must be launched

code should be initialized

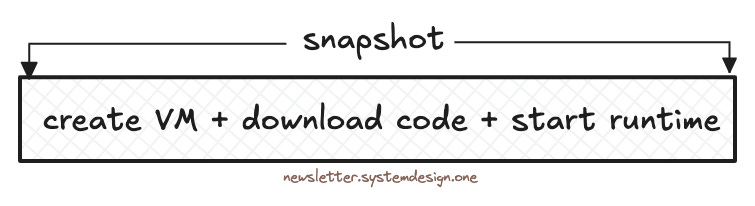

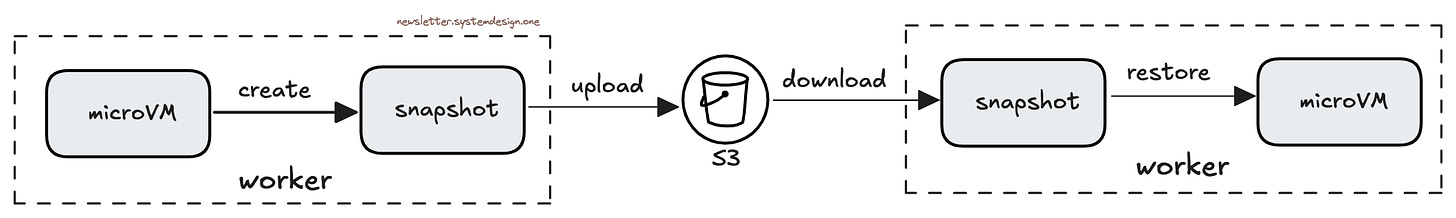

So they create a microVM snapshot and restore it when a cold start occurs.

In other words, an actual running microVM gets delivered to the worker instead of the code.

It reduces the cold start latency by 90%.

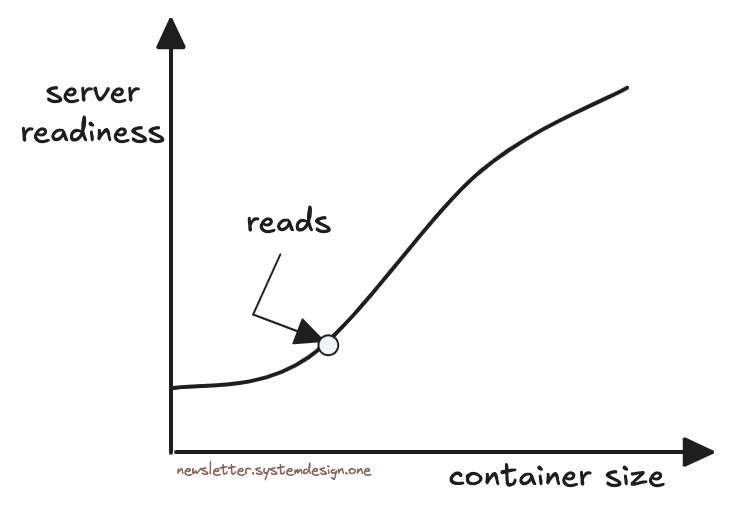

They allow container images (with code) for Lambda.

Yet only a subset of the container's image is needed to start serving requests. (Proof: slacker white paper.)

So they create chunks from the container's image, and it could be stored & accessed at low granularity. Then download only the subset of the container image needed to serve requests. Put simply, the container is lazy-loaded.

Thus reducing latency and bandwidth usage.

Besides a container image consists of layers. So they find the shared data between the layers for optimal delivery.

TL;DR

Invoke service: route requests

Assignment service: tracks workers running a specific Lambda function

Worker: servers running Lambda function

This case study shows fundamentals haven’t changed much over time.

While AWS Lambda handles more than 10 trillion requests a month.

👋 PS - Are you unhappy at your current job?

While preparing for system design interviews to get your dream job can be stressful.

Don't worry, I'm working on content to help you pass the system design interview. I'll make it easier - you spend only a few minutes each week to go from 0 to 1. Yet paid subscription fees will be higher than current pledge fees.

So pledge now to get access at a lower price.

"Excellent system design golden nuggets for anyone seeking to ace interviews. Highly recommend." Irina

Subscribe to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Awesome content Neo!

It got me thinking how the lazy loading of images would actually make it have smaller latency, and now I know how the snapshots make it work!

Thanks a lot!

This was much needed!!! 🙏