How Instagram Scaled to 2.5 Billion Users

#49: Break Into Instagram Scalability Techniques (7 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how Instagram scaled its infrastructure. If you want to learn more, find references at the bottom of the page.

Share this post & I'll send you some rewards for the referrals.

Note: This post is based on my research and may differ from real-world implementation.

Once upon a time, 2 Stanford graduates decided to make a location check-in app.

Yet they noticed photo sharing was the most used feature of their app.

So they pivoted to create a photo-sharing app and called it Instagram.

As more users joined in the early days, they bought new hardware to scale.

But scaling vertically became expensive after a specific point.

Yet their growth was skyrocketing.

And hit 30 million users in less than 2 years.

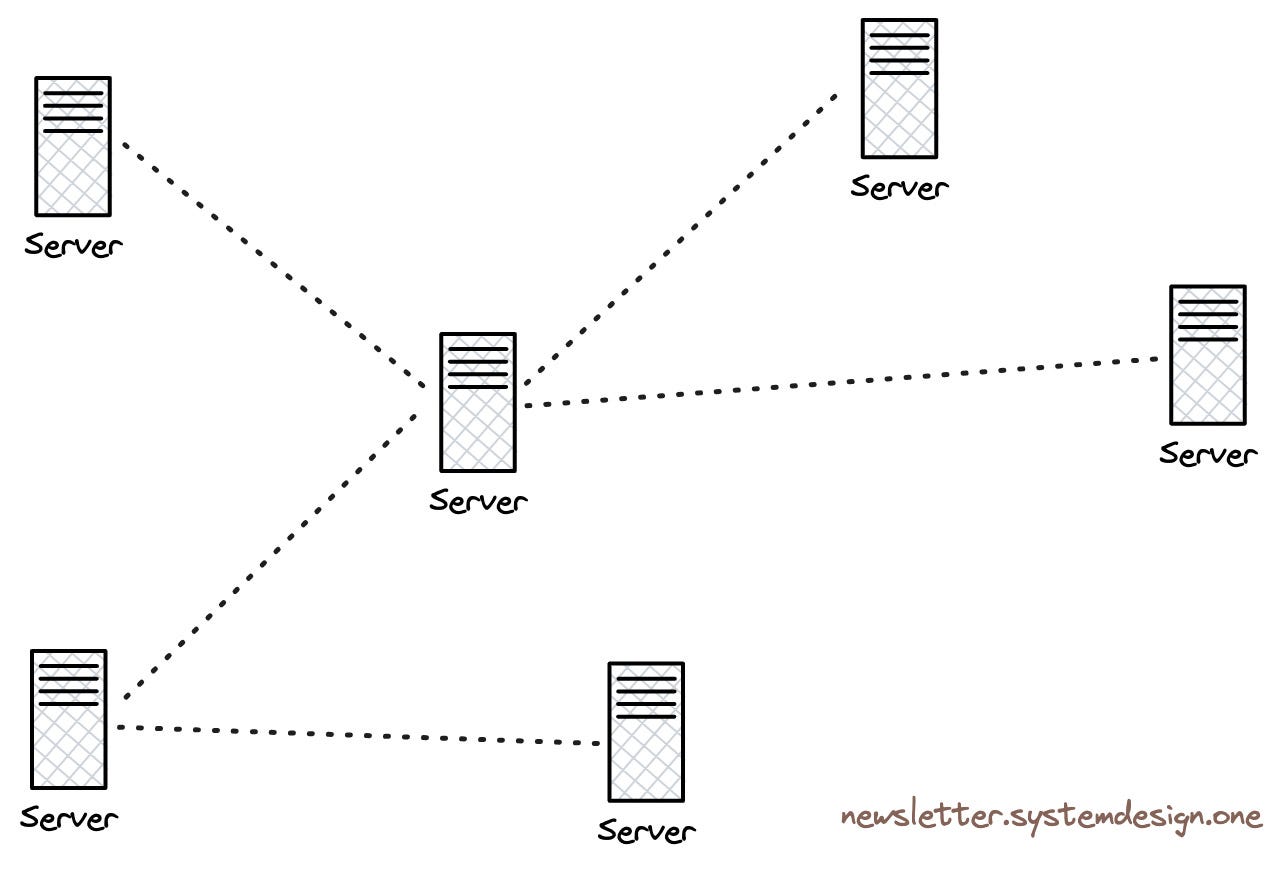

So they scaled out by adding new servers.

Although it temporarily solved their scalability issues, there were newer problems from hypergrowth.

Here are some of them:

1. Resource Usage:

More hardware allowed them to scale out.

Yet each server wasn’t used to maximum capacity.

Put simply, resources got wasted as their server throughput wasn’t high.

2. Data Consistency:

They installed data centers across the world to handle massive traffic.

And data consistency is needed for a better user experience.

But it became difficult with many data centers.

3. Performance:

They run Python on the application server.

This means they have processes instead of threads due to global interpreter lock (GIL).

So there were some performance limitations.

Instagram Infrastructure

The smart engineers at Instagram used simple ideas to solve these difficult problems.

Here’s how they provide extreme scalability:

1. Resource Usage:

Python is beautiful.

But C is faster.

So they replaced stable functions that are extensively used with Cython and C/C++.

It reduced their CPU usage.

Also they didn’t buy expensive hardware to scale up.

Instead reduced CPU instructions needed to finish a task.

This means running good code.

Thus handling more users on each server.

Besides the number of Python processes on a server is limited by the system memory available.

While each process has a private memory and access to shared memory.

That means more processes can run if common data objects get moved to shared memory.

So they moved data objects from private memory to shared memory if a single copy was enough.

Also removed unused code to reduce the amount of code in memory.

2. Data Consistency:

They use Cassandra to support the Instagram user feed.

Each Cassandra node can be deployed in a different data center and synchronized via eventual consistency.

Yet running Cassandra nodes in different continents makes latency worse and data consistency difficult.

So they don’t run a single Cassandra cluster for the whole world.

Instead separate ones for each continent.

This means the Cassandra cluster in Europe will store the data of European users. While the Cassandra cluster in the US will store the data of the US users.

Put another way, the number of replicas isn’t equal to the number of data centers.

Yet there’s a risk of downtime with separate Cassandra clusters for each region.

So they keep a single replica in another region and use quorum to route requests with an extra latency.

Besides they use Akkio for optimal data placement. It splits data into logical units with strong locality.

Imagine Akkio as a service that knows when and how to move data for low latency.

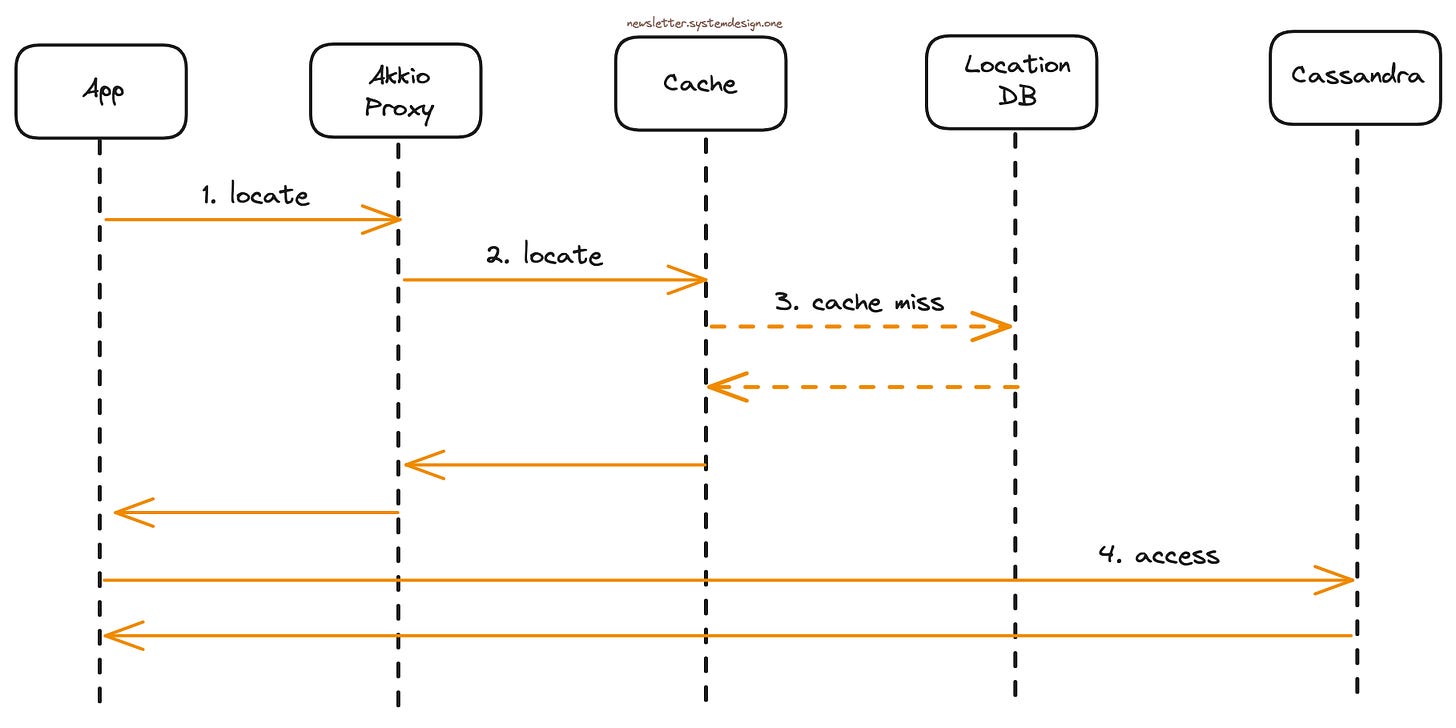

They route every user request through the global load balancer. And here’s how they find the right Cassandra cluster for a request:

The user sends a request to the app but it doesn’t know the user's Cassandra cluster

The app forwards the request to the Akkio proxy

The Akkio proxy asks the cache layer

The Akkio proxy will talk to the location database on a cache miss. It’s replicated across every region as it has a small dataset

The Akkio proxy returns the user's Cassandra cluster information to the app

The app directly accesses the right Cassandra cluster based on the response

The app caches the information for future requests

The Akkio requests take around 10 ms. So the extra latency is tolerable.

Also they migrate the user data if a user moves to another continent and settles down there.

And here’s how they migrate data if a user moves between continents:

The Akkio proxy knows the user's Cassandra cluster location from the generated response

They maintain a counter in the access database. It records the number of accesses from a specific region for each user

The Akkio proxy increments the access database counter with each request. They could find the exact location of the user based on the IP address

Akkio migrates user data to another Cassandra cluster if the counter exceeds a limit

Besides they store user information, friendships, and picture metadata in PostgreSQL.

Yet they get more read requests than write requests.

So they run PostgreSQL in leader-follower replication topology.

And route write requests to the leader. While read requests get routed to the follower on the same data center.