How Facebook Scaled Live Video to a Billion Users

#47: Break Into Live Streaming Architecture (6 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines Facebook’s live video architecture. If you want to learn more, find the references at the bottom of the page.

Share this post & I'll send you some rewards for the referrals.

Note: This post is based on my research and may differ from real-world implementation.

July 2018 - Thailand.

A group of boys and their coach had a football training session.

And they decide to do an outing for team building.

So they visit the Tham Luang cave.

But heavy rains flooded the cave and trapped them inside.

And rescue is difficult due to narrow cave passages.

18 days passed…

A rescue effort with an international team started.

While they streamed it via Facebook live video.

TheFutureParty (Featured)

Understand the future of business, entertainment, and culture, all in a 5-minute daily email.

A Facebook live video is a one-to-many stream. That means everyone with access to live video can watch it.

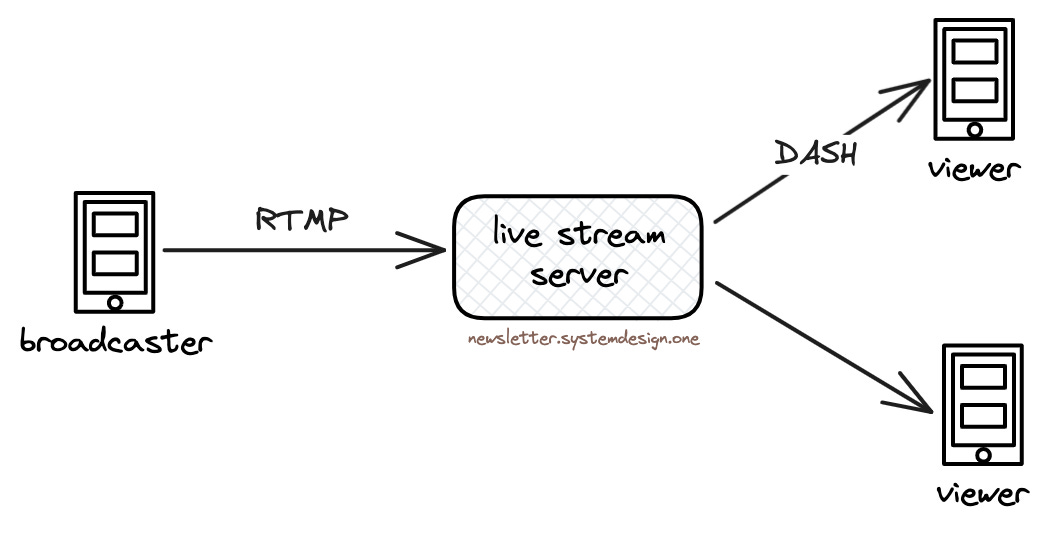

The broadcaster sends live video to the Facebook infrastructure. Then they process it and forward it to viewers.

They use real-time messaging protocol (RTMP) to send live video from the broadcaster to the Facebook infrastructure. It’s based on TCP and splits the live video into separate audio and video streams.

They run the live stream server to transcode live video into different bit rates.

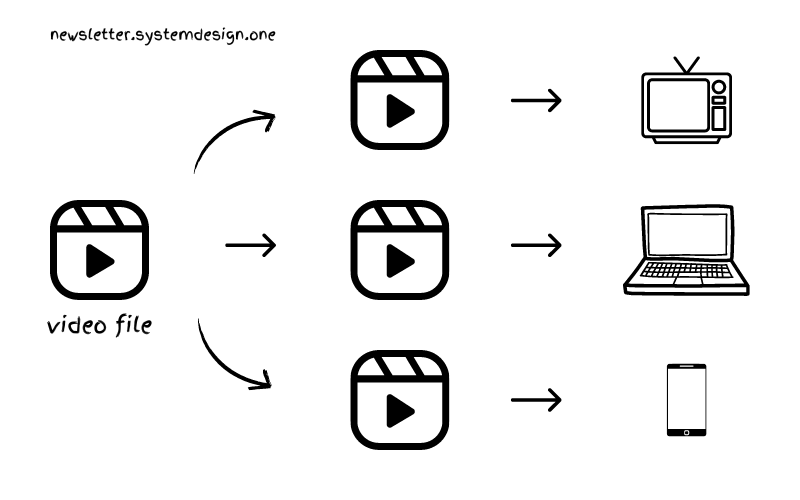

Transcoding is the process of converting a video to different formats. It’s necessary because each client device supports a different format. While the bit rate represents the data in each second of the video.

Then they create 1-second video segments in MPEG-DASH format and send those to viewers.

DASH is a streaming protocol over HTTP. It consists of a manifest file and media files. Imagine the manifest file as a collection of pointers to media files. They update the manifest file whenever new video segments are created. Hence the viewer can request the media files in the correct order.

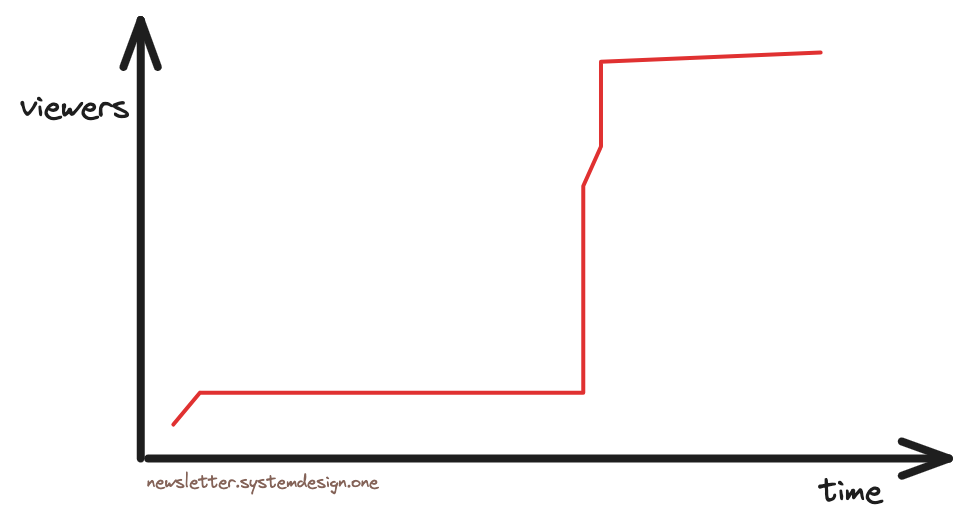

This rescue operation became extremely popular on Facebook.

And 7.1 million people across the world started watching it concurrently.

So it created a traffic spike.

Live Streaming Architecture

Scaling live video is a hard problem.

Here’s how Facebook solves it:

1. Scalability

There’s a risk of the live stream server failing while processing the video.

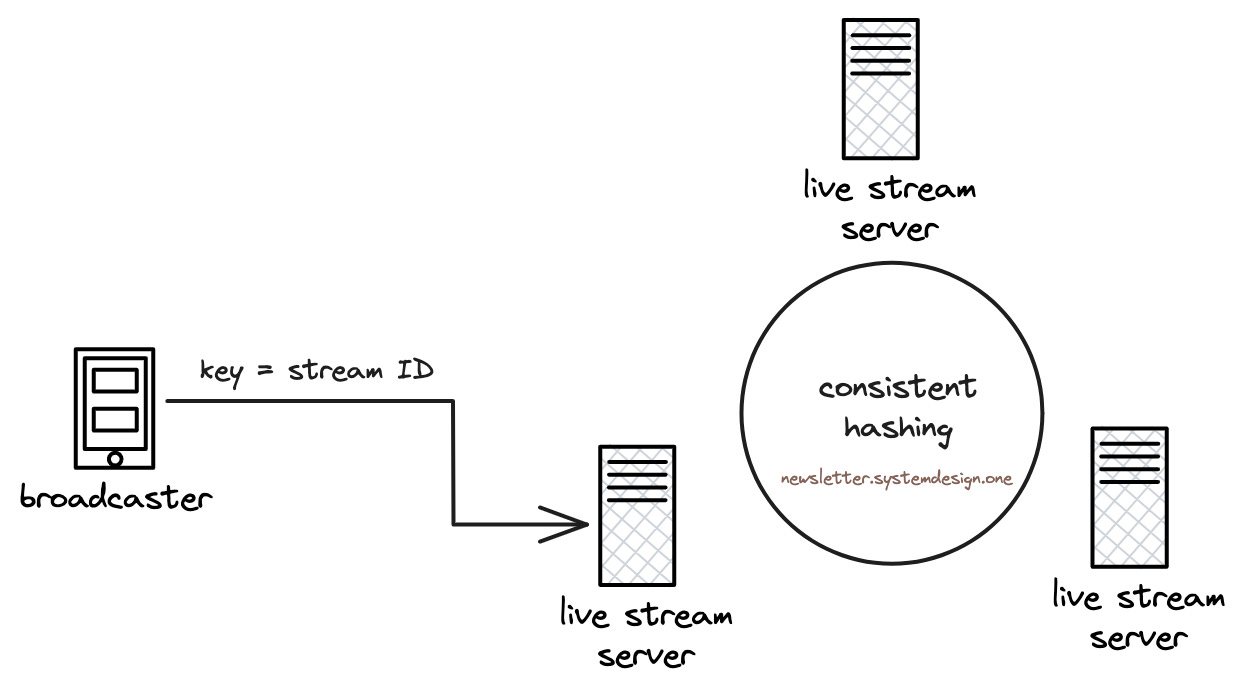

So they run many live stream servers and use consistent hashing to select one. It automatically selects the next server if the active server fails.

Also they use stream ID as the consistent hash key. Hence broadcaster could reconnect to the same server if their network connection drops.

2. Network Bandwidth

Viewers may have different network bandwidths as people use mobile internet.

So they do adaptive bitrate streaming. This means a live video gets broken down into smaller parts. And each part gets converted into different resolutions.

Simply put, the video quality changes based on network bandwidth.

Besides they measure the upload bandwidth of the broadcaster. And then change the video resolution to match the bandwidth.

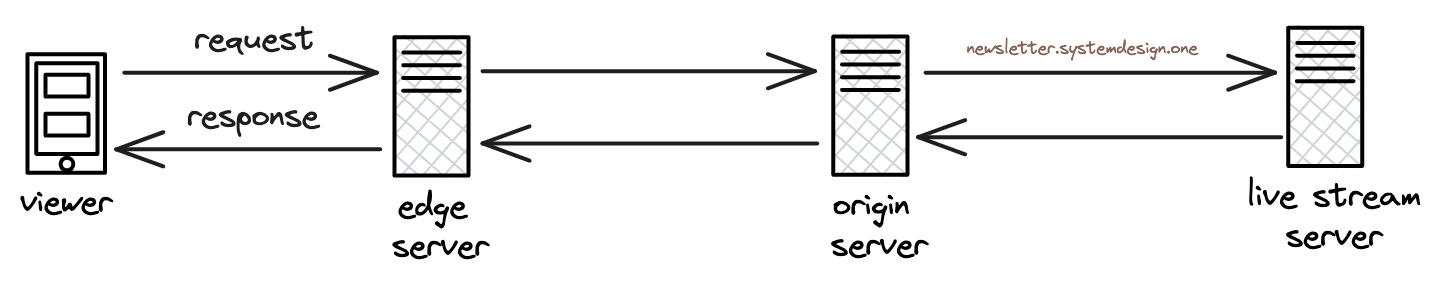

3. Latency

Viewers from another continent may experience latency due to their distance from the live stream server.

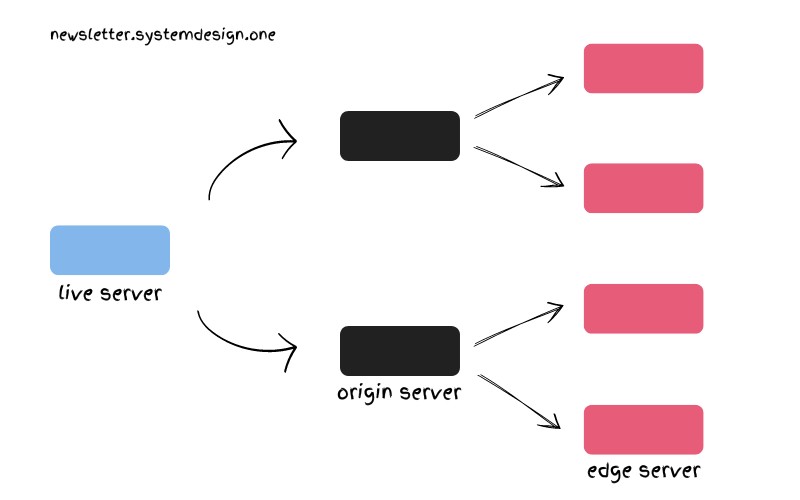

So they set up extra servers in a 2-level hierarchy.

They installed edge servers closer to the viewers. It stores the live video segments for low latency.

And installed origin servers to reduce the live stream server load.

Imagine each continent has an origin server, while each country has many edge servers.

Put another way, there’s a one-to-many relationship between an origin server and edge servers. So many edge servers send requests to the same origin server.

While there’s a one-to-many relationship between a live server and origin servers. Hence many origin servers send requests to the same live server.

So when a viewer requests a video segment, they serve it from the edge server if it got cached. Otherwise the request gets forwarded to the origin server and live stream server.

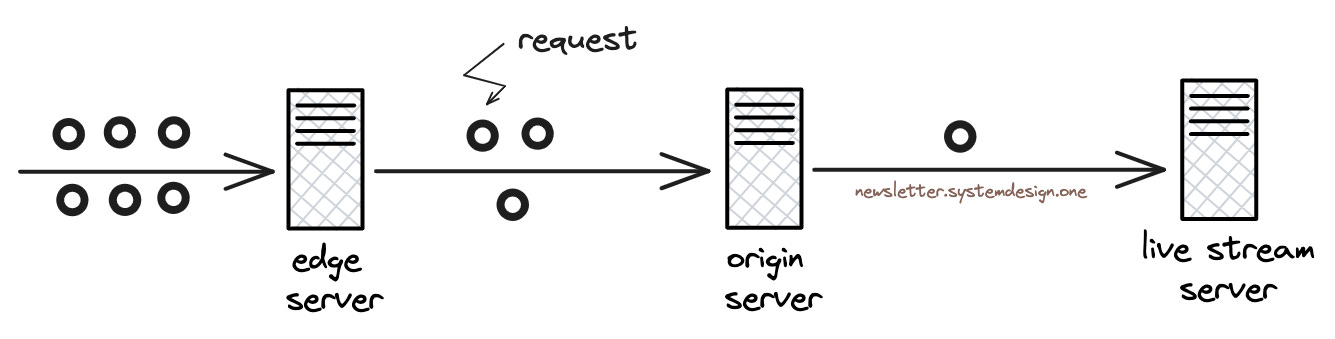

4. Thundering Herd

An edge server can handle 200k requests per second. Thus reducing the traffic reaching the origin server.

But if many people watch a live video concurrently, there’s a risk of the thundering herd problem in the edge server.

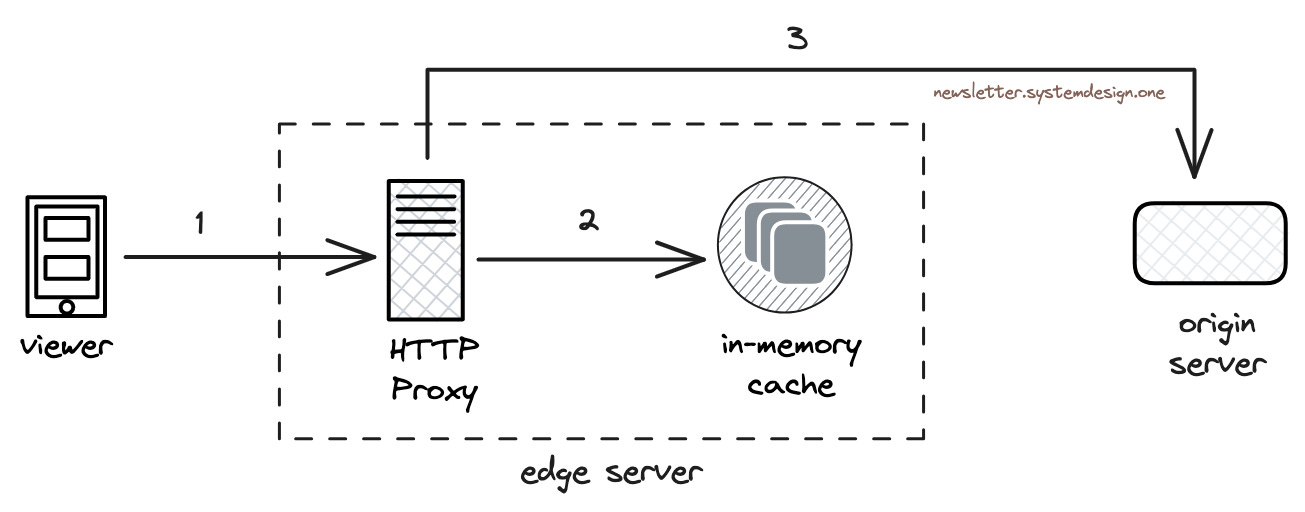

So they built the edge server with an HTTP proxy and a caching layer.

This means the viewer first checks whether a specific video segment is in the edge server cache.

The HTTP proxy then requests the origin server for that video segment only if it’s not in the cache.

Also they store video segments in different cache servers within an edge server to load balance the traffic.

Besides they built the origin server with the same architecture. And it forwards the request to the live stream server on a cache miss.

Then the video segment gets cached in each server to handle future requests.

Yet statistically 2 out of 100 requests will reach the origin server.

At Facebook’s scale, this will be a big problem.

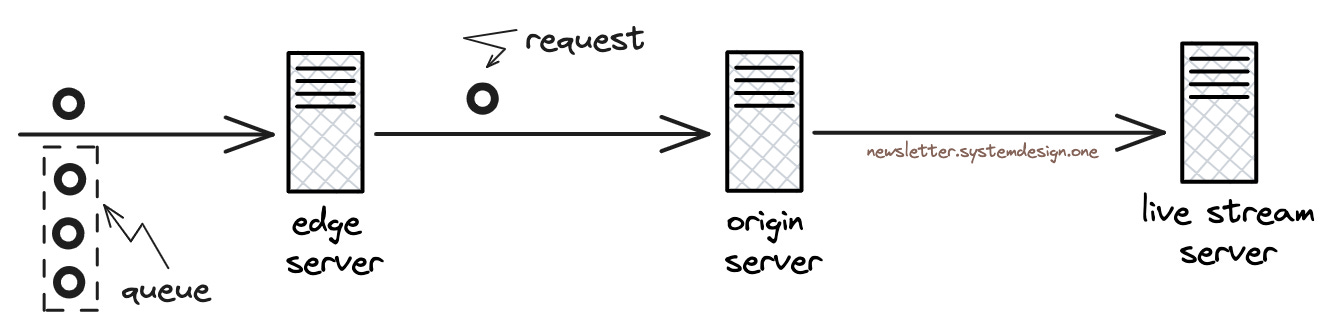

So they do request coalescing.

That means all concurrent requests for a specific video segment get stored in a queue. While only a single request gets forwarded to the server.

Then all the requests in the queue get that video segment at once after the server responds. Thus reducing the server load and preventing the thundering herd problem.

Also many edge servers may concurrently request a specific video segment from an origin server. So they do request coalescing at the origin server to reduce the live stream server load.

Besides they replicate the video segments across edge servers for better performance.

This case study shows that scalability must be built into the design at an early stage.

And Facebook Live remains one of the largest streaming services in the world.

While all 12 boys and their coach were safely rescued from the cave.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Solid share my friend, loved the visuals as always! Keep these coming!