How Load Balancing Algorithms Really Work ⭐

#72: Break Into Load Balancing Algorithms (3 Minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines 6 popular load balancing algorithms. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

Note: This post is based on my research and may differ from real-world implementation.

Once upon a time, there lived 2 QA engineers named John and Paul.

They worked for a tech company named Hooli.

Although bright, they never got promoted.

So they were sad and frustrated.

Until one day, they had a smart idea to build a photo-sharing app.

And their growth skyrocketed every day.

So they scaled by installing more servers.

But uneven traffic distribution caused server overload.

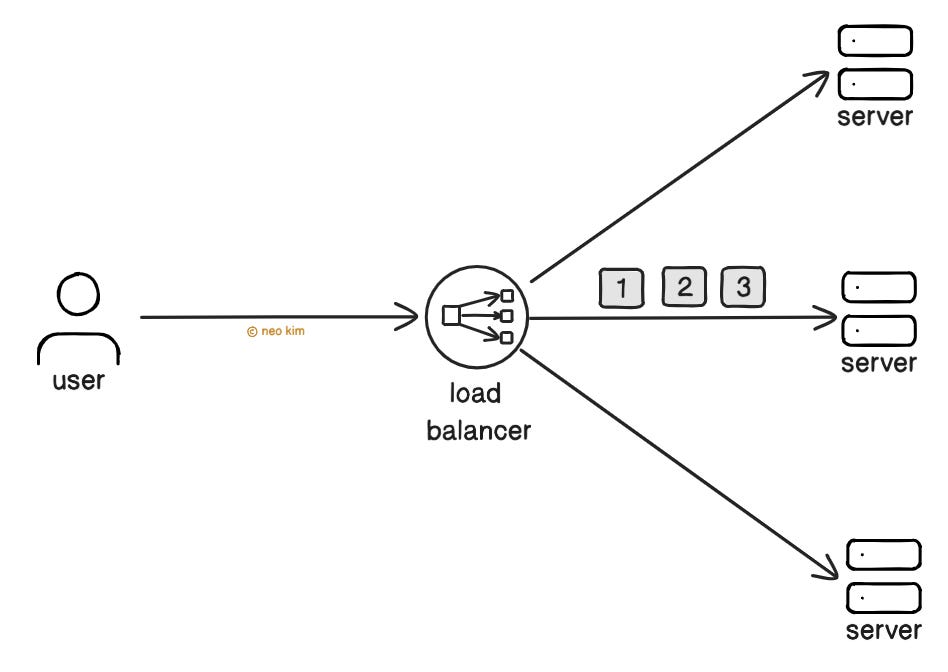

So they set up a load balancer for each service.

Think of load balancer as a component that distributes traffic evenly among servers.

Yet each service has a different workload and usage pattern.

Onward.

Securing AI agents and non-human identities - Sponsor

NHIs surged with the rise of AI agents, microservices, and distributed cloud systems. This ebook gives you a practical roadmap to secure NHIs in your architecture, with Zero Trust principles at the core:

Real-world examples of AI and NHI specific security threats, illustrated by incidents from Okta, GitHub, and Microsoft + OWASP research

12 security principles with 35 practical steps for risk-informed NHI governance

A vendor landscape and evaluation checklist to help you build out your NHI security infrastructure

Load Balancing Algorithms

Here’s how they load balance traffic across different services:

1. Round Robin

One weekend, their app became trending on the play store.

Because of this, many users tried to log in at the same time. Yet it’s necessary to distribute traffic evenly among auth servers.

So they installed the round robin algorithm on the load balancer.

Here’s how it works:

The load balancer keeps a list of servers

It then forwards requests to the servers in sequential order

Once it reaches the end of the list, it starts again from the first server.

This approach is simple to set up and understand. Yet it doesn't consider how long a request takes, so slow requests might overload the server.

Life was good.

2. Least Response Time

Until one day, a celebrity uploads a photo on the app.

Because of that, millions of users check their feed. Yet some servers handling the feed might be slow due to garbage collection and CPU pressure.

So they use the least response time algorithm to route the requests.

Here’s how it works:

The load balancer monitors the response time of servers

It then forwards the request to the server with the fastest response time

If 2 servers have the same latency, the server with the fewest connections gets the request

This approach has the lowest latency, yet there’s an overhead with server monitoring. Besides latency spikes might cause wrong routing decisions.

Life was good again.

3. Weighted Round Robin

But one day, they noticed a massive spike in photo uploads.

Each photo gets processed to reduce storage costs and improve user experience. While processing some photos is complex and expensive.

So they installed the weighted round robin algorithm on the load balancer.

Here’s how it works:

The load balancer assigns a weight to each server based on its capacity

It then forwards requests based on server weight; the more the weight, the higher the requests

Imagine weighted round robin as an extension of the round robin algorithm. It means servers with higher capacity get more requests in sequential order.

This approach offers better performance. Yet scaling needs manual updates to server weights, thus increasing operational costs.

4. Adaptive

Their growth became inevitable; they added support for short videos.

A video gets transcoded into different formats for low bandwidth usage. Yet transcoding is expensive, and some videos could be lengthy.

So they installed the adaptive algorithm on the load balancer.

Here’s how it works:

An agent runs on each server, which sends the server status to the load balancer in real-time

The load balancer then routes the requests based on server metrics, such as CPU and memory usage

Put simply, servers with lower load receive more requests. It means better fault tolerance. Yet it’s complex to set up, also the agent adds an extra overhead.

Let’s keep going!

5. Least Connections

Until one day, users started to binge-watch videos on the app.

This means long-lived server connections. Yet a server can handle only a limited number of them.

So they installed the least connections algorithm on the load balancer.

Here’s how it works:

The load balancer tracks the active connections to the server

It then routes requests to the server with fewer connections

It ensures a server doesn’t get overloaded during peak traffic. Yet tracking the number of active connections makes it complex. Also session affinity needs extra logic.

Life was good again.

6. IP Hash

But one day, they noticed a spike in usage of their chat service.

Yet session state is necessary to track conversations in real-time.

So they installed the IP hash algorithm on the load balancer. It allows sticky sessions by routing requests from a specific user to the same server.

Here’s how it works:

The load balancer uses a hash function to convert the client’s IP address into a number

It then finds the server using the number

This approach avoids the need for an external storage for sticky sessions.

Yet there’s a risk of server overload if IP addresses aren’t random. Also many clients might share the same IP address, thus making it less effective.

There are 2 ways to set up a load balancer: a hardware load balancer or a software load balancer.

A hardware load balancer runs on a separate physical server. Although it offers high performance, it's expensive.

So they set up a software load balancer. It runs on general-purpose hardware. Besides it's easy to scale and cost-effective.

And everyone lived happily ever after.

Subscribe to get simplified case studies delivered straight to your inbox:

Want to advertise in this newsletter? 📰

If your company wants to reach a 150K+ tech audience, advertise with me.

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

TL;DR 🕰️

You can find a summary of this article here. Consider a repost if you find it helpful.

Funny thing about load balancing, there’s no one “best” algorithm.

What works great for one service might totally mess up another.

Sometimes simple round robin just wins because it’s dead easy. Other times, you need the fancy stuff like adaptive or IP hash.

Depends on the traffic, the bottlenecks, and how much pain you can handle when things go sideways.

Good one, Neo

I loved it! Thanks, Neo!