How Figma Scaled to 4M Users—Without Fancy Databases 🔥

#69: Break Into Postgres Scalability (4 Minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how Figma scaled the Postgres database. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

Note: This post is based on my research and may differ from real-world implementation.

Once upon a time, 2 university students decided to build a meme generator.

Yet their business idea failed.

So they pivoted to create a design tool for the browser and called it Figma.

They stored metadata such as file information and user comments in a single Postgres database for simplicity.

Yet their traffic was massive.

And it affected latency and performance because of high CPU usage.

So they scaled vertically by installing Postgres on a larger machine. It means more CPU.

Although it temporarily solved their scalability issue, there were new problems.

Here are some of them:

1. Storage Capacity

Some tables grew extremely fast.

While smallest unit of partition is a table.

So a single database couldn’t handle their storage needs.

2. Performance

They received a ton of write operations.

It exceeded the input-output per second (IOPS) limit of a single database.

So the performance became poor.

Onward.

GibsonAI: AI-Powered Cloud Database - Sponsor

Ship and scale production grade apps at a blistering pace with GibsonAI, your AI-powered serverless database. With GibsonAI, you will:

Design your database with natural language and view it as a diagram (ERD) & code.

Deploy both dev and production environments in seconds with one click.

Access your database directly or through the secure REST API built for you.

Instruct GibsonAI to build, query, or update databases right from your favorite IDE via the GibsonAI MCP server.

Migrations, optimization and scaling handled for you.

Postgres Scale

Here’s how they scaled Postgres step by step:

1. Database Replication

They set up database replicas and route read traffic towards it.

It let them:

Handle more read traffic

Reduce latency by keeping data closer to users around the world

Increase fault tolerance as replicas could take over when the leader database fails

Yet replicas couldn’t handle some read operations because of replication lag and strong consistency needs. So they route those operations to the leader database.

Besides they added a caching layer to store frequently accessed data, thus reducing the database load.

Let’s keep going.

2. Database Federation

They moved tables into separate databases based on the domain.

It let them:

Scale horizontally by adding more databases

Spread workload across databases and reduce bottlenecks

For example, they store file information and user comments in separate databases because file information grows faster than comments. While the workload is different for each table.

Ready for the next technique?

3. Connection Pooling

They set up PgBouncer as the connection pooler.

It acts as a TCP proxy holding a pool of connections to Postgres.

And the client connects directly to PgBouncer instead of Postgres.

It let them:

Allow clients to reuse the same connection and improve throughput

Limit the number of database connections to avoid connection starvation

Keep persistent connections with the client to avoid expensive reconnection requests

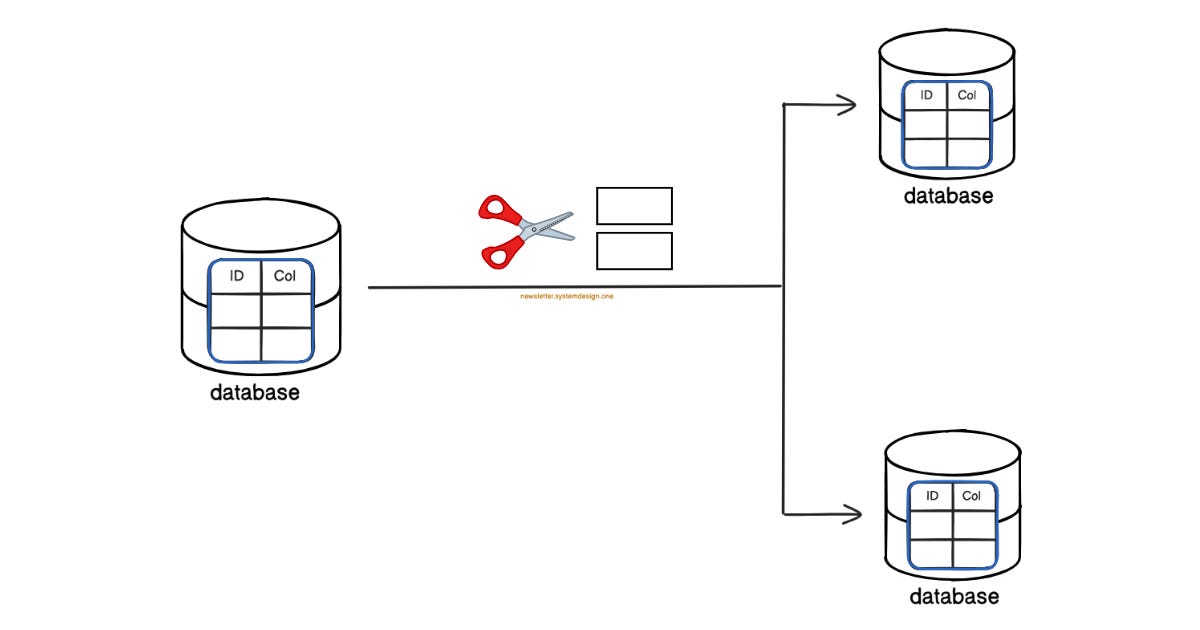

4. Vertical Partitioning

They moved columns from high-traffic tables into separate tables.

And then stored those tables in different databases.

Yet each table has its own database. Put simply, a single table doesn’t spread across many databases.

It let them:

Isolate sensitive data for security

Reduce workload by routing traffic across many databases

Achieve high query performance by scanning only a subset of data

Yet vertical partitioning becomes a bottleneck with high write traffic. Because a single database wouldn’t be able to store a table with billions of rows.

Ready for the best part?

5. Horizontal Partitioning

They split tables at the row level and stored them across many databases for maximum scalability.

Also they set up a proxy server to route queries to the correct database.

It let them:

Rewrite expensive queries for performance

Manage database topology and support transactions

Drop extra requests when it exceed the threshold limit

They used the hash of the partition key to route write requests. Thus distributing data uniformly across databases.

Besides they co-located tables partitioned by the same key to make transactions and joins easier.

Figma became a popular design tool with over 40 million users.

This case study shows Postgres can easily handle internet-scale traffic.

Subscribe to get simplified case studies delivered straight to your inbox:

Want to advertise in this newsletter? 📰

If your company wants to reach a 100K+ tech audience, advertise with me.

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

TL;DR 🕰️

You can find a summary of this article on Twitter. Please consider a retweet if you find it helpful.

Here is a lesson on how different it is to scale read than write.

Thanks for sharing, Neo.

There are some very important tips like caching, separation of concerns, and dealing with heavy load.

Great breakdown, Neo! 🙌