How LinkedIn Adopted Protocol Buffers to Reduce Latency by 60%

#12: You Need to Read This - Awful JSON Serialization (5 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how LinkedIn reduced latency using Protocol Buffers. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

LinkedIn uses microservices architecture because the number of daily requests they receive is in the range of billions. Also they needed to scale.

But microservices architecture increased their network calls and degraded latency.

They used JavaScript Object Notation (JSON) as the data serialization format but it became a performance bottleneck. So they moved to Protocol Buffers.

This post outlines how LinkedIn adopted Protocol Buffers to reduce latency. I think it’s important to understand data serialization and Protocol Buffers first. So I’ll teach you the basics first.

What Is Data Serialization?

Imagine a get-together of people who speak different languages. They have no choice but to speak in a language that everybody understands.

So each person must translate thoughts from their native to the common language. But it reduces communication efficiency. This is how data serialization works.

Now in computing terms. Translating an in-memory data structure to a format that can be stored or sent across the network. This is called data serialization.

What Is Protocol Buffers?

Protocol buffer (Protobuf) is a data serialization format and a set of tools to exchange data.

Protobuf keeps data and the metadata separate. And serializes data into binary format.

Besides Protobuf messages are sent across network protocols such as REST or RPC. And supports many programming languages: Python, Java, Go, and C++.

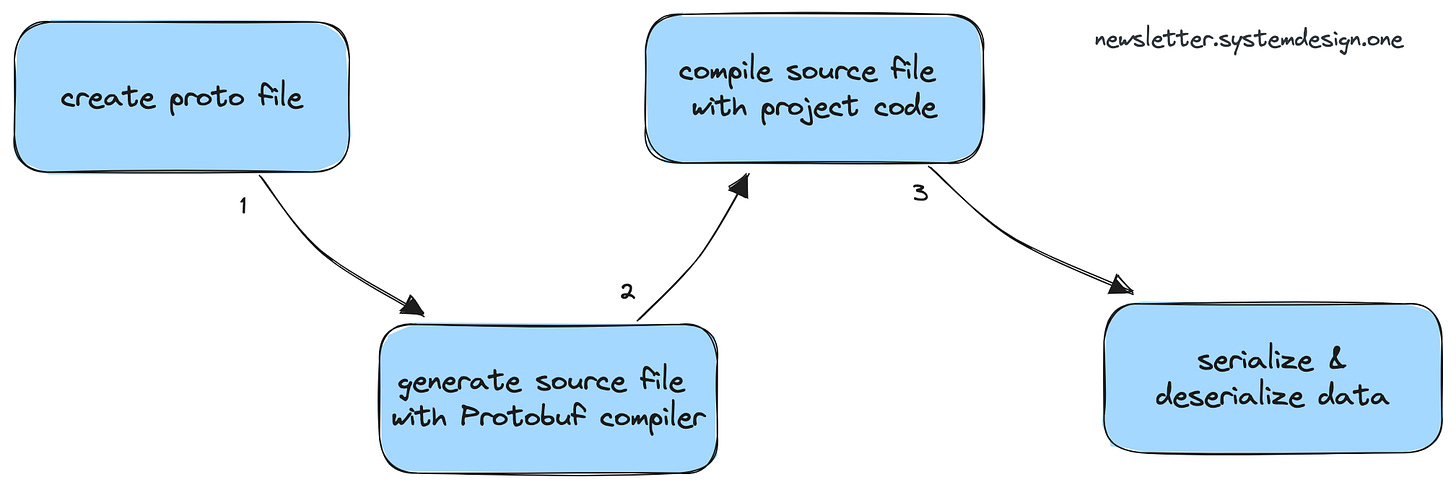

Here is the Protobuf workflow:

Create a proto file

And define payload schema: data fields and types.

Compile proto file to language-specific source files

Compile the proto file using the Protobuf compiler. And create language-specific source files: One file for the client to serialize data. And other for the server to deserialize data.

Create an executable package

Compile the generated Protobuf source file with the project code.

Serialize or deserialize data

Serialize data at runtime.

Why Use Protocol Buffers?

JSON serialization became a performance bottleneck at LinkedIn. Because textual format needed extra network bandwidth. And more computing resources to compress data. It resulted in poor latency and throughput.

Also skipping unwanted data fields is not possible while parsing JSON. Because there is no separation between data and metadata.

But metadata in Protobuf allows parsing specific data fields. And makes it a lot more efficient for a big payload.

Their criteria to find a JSON data serialization alternative were:

Smaller payload size. Because it reduces bandwidth needs

Improved efficiency. Because it reduces latency

Support for many programming languages. Because their tech stack was diverse

Easy to plug into the existing setup. Because they wanted to reduce the engineering effort

Protobuf satisfied all the criteria. So they moved to Protobuf.

Protobuf reduced their P99 latency by 60% for big payloads. And improved average throughput by 6.25% for response payloads.

99th latency percentile is called P99 latency. Put another way, 99% of requests will be faster than the given latency number. Or only 1% of requests will be slower than P99 latency.

Here is a summary of the Protobuf study by Auth0.com

If services running JavaScript and Java communicate with each other. There is no big performance improvement with Protobuf. And the latency difference was only 4% compared to compressed JSON.

But if services running Java and Python or Java communicated with each other. Protobuf offered 6 times better latency compared to JSON.

In simple words, there is a big performance improvement for Protobuf with non-JavaScript environments.

Protobuf Rollout at LinkedIn

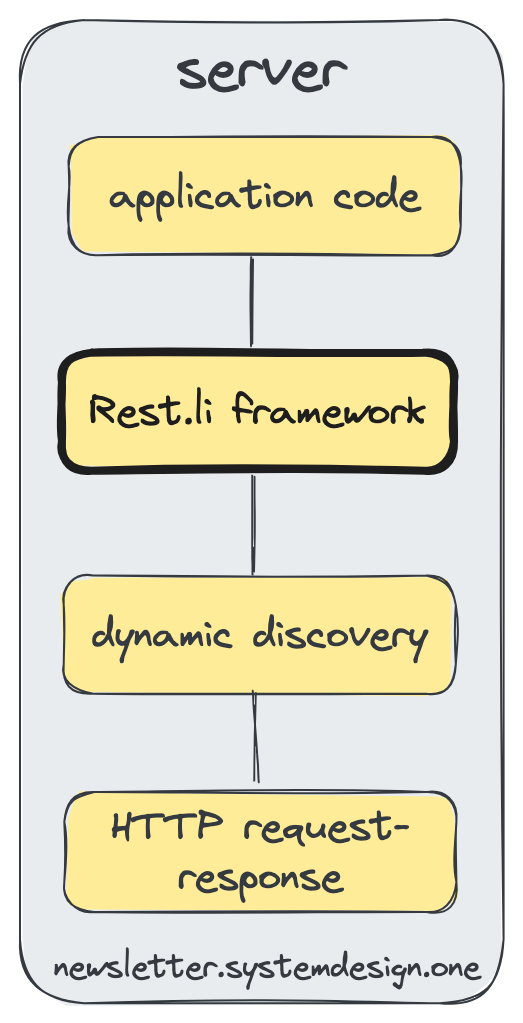

They used Rest.li framework for calls between microservices. Because it abstracted many parts of data exchange: serialization and service discovery.

And this is how they rolled out Protobuf:

Add Protobuf support to Rest.li framework

Increment Rest.li framework version. And redeploy every microservice

Release slowly using client configuration. And reduce service disruption

Protocol Buffers vs JSON

I will outline the top 3 benefits and limitations of Protobuf and JSON because it might help you to make better architectural decisions with your project.

Protobuf benefits:

Support for schema validation

Improved performance with big payloads. Because it uses the binary format

Support for backward compatibility

Protobuf limitations:

Hard to debug. And not human-readable

Extra effort to update the proto file needed

Limited language support compared to JSON

JSON benefits:

Easy to use and human-readable

Easy to change. Because it provides a flexible schema

Support for many programming languages

JSON limitations:

No support for schema validation

Poor performance for big payloads

Backward compatibility problems

Takeaways

Use Protobuf when:

Payload is big

Communication between non-JavaScript environments needed

Frequent changes to the payload schema expected

Use JSON when:

Simplicity needed

High performance is not needed

Communication between JavaScript and Node.js or other environments needed

Protobuf gave big performance improvements for LinkedIn. But it is important to check if Protobuf is best for your use case to prevent over-engineering.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

References

https://engineering.linkedin.com/blog/2023/linkedin-integrates-protocol-buffers-with-rest-li-for-improved-m

https://linkedin.github.io/rest.li/user_guide/server_architecture

https://auth0.com/blog/beating-json-performance-with-protobuf/

https://programmathically.com/protobuf-vs-json-which-data-serialization-format-is-best-for-your-engineering-project/

https://protobuf.dev/

Thanks NK for the detailed explanations. Why does JS doesn’t perform as good as Java or python using Protobuf?

JSON -> Binary, yea totally makes sense for perf wins.