How Google Search Works 🔥

#57: Break Into Google Search Engine Architecture (6 Minutes)

Get my system design playbook for FREE on newsletter signup:

How can you quickly find the answer to a question on the internet?

Simply enter the question into a web search engine - Google.

Share this post & I'll send you some rewards for the referrals.

This post outlines Google search architecture. You will find references at the bottom of this page if you want to go deeper.

Note: This post is based on my research and may differ from real-world implementation.

It began as a university research project by 2 students.

They wanted to build a web search engine - and got outstanding results.

Yet they didn’t have the management skills to start a business + wanted to focus on their studies.

So they decided to sell their project for 1 million USD and approached Yahoo - rejected.

But it wasn’t the end - as they believed in the potential of their project.

And the best part?

They managed to get venture capital funding & named their tiny project Google.

Their growth rate was mind-boggling.

Although explosive growth is a good problem to have, there were newer problems.

A good search engine must be: (1) effective - search results must be relevant. (2) efficient - response time should be low.

This Post Summary - Instagram

I wrote a summary of this post

(save it for later):

And I’d love to connect if you’re on Instagram:

Search Engine Architecture

They used simple ideas to build Google.

Here’s how:

1. Crawling 🕷️

There are trillions of URLs on the internet - making it difficult to crawl every page.

Yet it's important to include all relevant web pages in search results. So they use a distributed web crawler. It gives performance and scalability.

Imagine the web crawler as a software to automatically download text + media from a website.

They run web crawlers in data centers across the world. It lets them keep the crawler closer to the website, thus reducing bandwidth usage.

Yet there’s no central database containing all web pages on the internet.

And it’s difficult to find new and updated web pages. So they: (1) follow links from known pages. (2) use the sitemap to find pages without any links pointing towards it.

Think of the sitemap as an XML file with information about pages of a specific website.

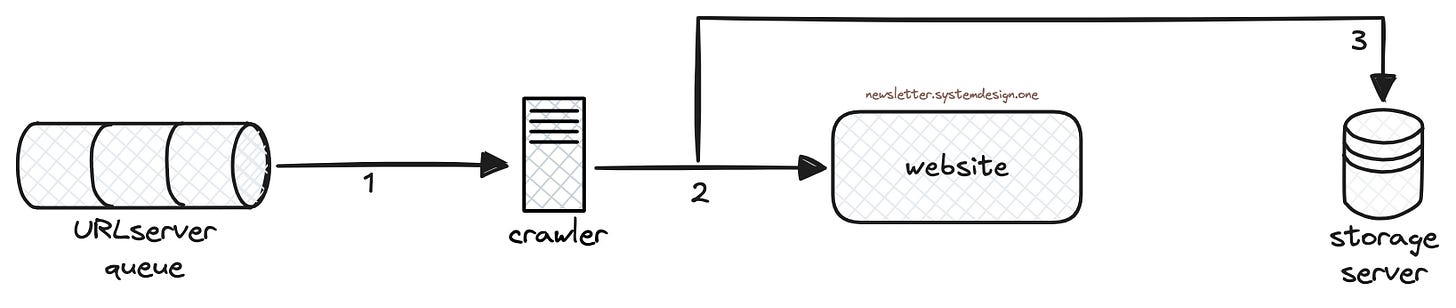

Here’s how Google crawler works:

It stores URLs to crawl in a queue called the URLserver.

It checks the robots.txt file to understand if crawling is allowed on a specific URL.

It fetches the URL from the queue and makes an HTTP request (if allowed).

It keeps a local DNS cache for performance.

It renders the page for finding new URLs on the page. (Similar to a web browser.)

It saves crawled pages in compressed form on a distributed storage server.

It doesn’t crawl pages behind a login. (Put simply, only public URLs are crawled.)

It checks the HTTP response code to make sure a website isn’t overloaded. (For example, HTTP response code 500 means slow down.)

It determines when & how often to crawl a website using an internal algorithm.

They render web pages because most websites use JavaScript to show full content.

And the rendered page gets parsed for new URLs, which then gets added to the queue for crawling.

Ready for the next technique?

2. Indexing 📖

The search results must be relevant.

Yet it's difficult to return the correct web page without understanding its content.

So they process crawled pages and store the found information in a database called the index.

Besides they keep 2 types of index: (1) forward index - list the words on a specific page and their position. (2) inverted index - maps a word to the list of documents containing it.

They sort the forward index to create the inverted index.

Yet they receive many single-word queries. (For example, “YouTube”.)

And the same inverted index isn't enough to handle single-word and multi-word queries. (Merging the document list is difficult.)

So they keep 2 types of inverted indexes for performance. It's categorized based on the way documents are sorted.

The inverted index sorted by word rank gets queried first - it answers most queries.

While the inverted index sorted by document ID gets queried for multi-word queries.

Here’s how the Google index works:

Each web page (document) is assigned a unique document ID.

The words found in documents get stored in Lexicon. It’s an in-memory storage, which is used to correct spelling mistakes in search queries.

The storage location of each document get recorded in the document server. It’s used to create page titles and snippets shown in search results.

The links found in the parsed document are stored in a separate database. It’s used to rank documents.

The document server and index server are partitioned by document ID. (Also replicated for capacity.)

Onward.

3. Searching 🔍

They receive 100+ million queries a day with spelling mistakes.

Yet it’s important to guess the query correctly to show relevant results.

So they clean the query via text transformation.

And use language models to find word synonyms + correct spelling mistakes.

Here’s the Google search workflow:

Lexicon finds word IDs for words in the search query.

The cleaned query gets forwarded to the index.

The inverted index returns a sorted list of documents matching the query.

The top k results are shown to reduce response time.

They cache index results and document snippets for performance.

Besides a copy of the index is kept in each data center. And search queries get routed to the closest data center for low latency.

Also it’s important to find duplicate content and show only canonical pages in the search results.

So they: (1) group similar pages & show the pages based on the user’s language, location, and so on. (2) use HTML annotations to find the canonical page.

TL;DR 🚀

Crawling: find URLs on the internet.

Indexing: understand page content & make it searchable.

Searching: rank & show results.

The same Yahoo that rejected Google plummeted to ~1% in market share.

While Google became one of the most valuable companies in the world ~2 trillion USD.

And laid the foundation of the modern Internet.

Subscribe to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

The story of Google reminds us that a strong foundation in technology and user needs can lead to unprecedented growth.

I only can imagine the process to generate a unique document ID at this scale.

Great breakdown, Neo!