How Shopify Handles Flash Sales at 32 Million Requests per Minute

#16: Learn More - Awesome Shopify Engineering (4 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines flash sales at Shopify. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

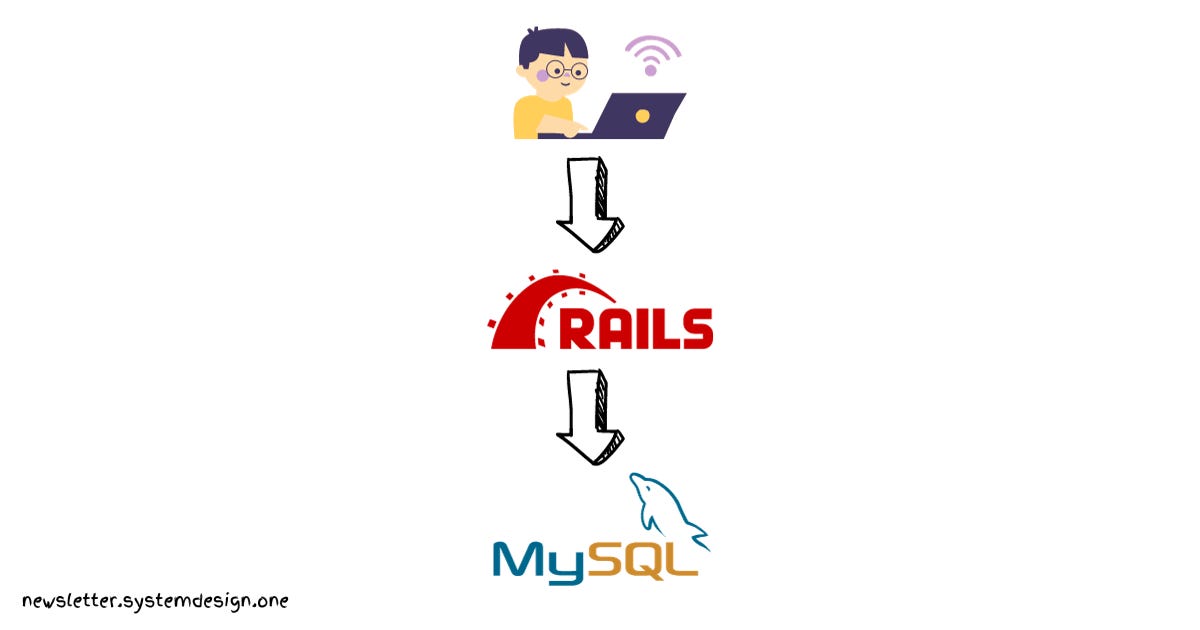

Once upon a time, there lived a Shopify server.

He had a single purpose in life: to help people sell products online.

He was the sweet child of Shopify engineers - and built with a simple architecture.

He used Nginx for load balancing because of its fast event loop. And Redis for caching expensive operations.

He was living a Happy life.

Until one day.

An Evil social media influencer, Pam entered the picture.

And ruined it all.

She wanted to sell a limited edition of shoes in a short time at a discount price - a Flash sale.

So she opened the Bird app and Instagram - and hyped about it.

Shopify server knew what was coming for him: massive traffic.

.He got Scared and called Shopify engineers for help.

So they thought hard.

And came up with 10 simple ideas to handle the Flash sale.

Shopify Flash Sale

Here are 10 ideas that Shopify used to handle Flash sales at 32 million requests per minute:

1. Low Timeout

A user wouldn’t wait more than a few seconds for an action to finish.

Besides a client would waste computing resources and increase costs by waiting on an unresponsive server.

So they reduced timeout wherever they could in the system.

2. Circuit Breaker

A degraded service is better than a completely down service.

And connecting to a server that failed many times in a short time is bad. Because it prevents the server from becoming healthy again.

So they installed the circuit breaker pattern. It stops requests if the circuit breaker is open and protects the database and API server.

3. Rate Limit

Performance degrades if the number of requests exceeds system capacity.

So they used rate limiting and load shedding to prevent extra requests.

Also they installed the back pressure pattern. It allows many requests to go through without breaking the service.

Besides they set up fair queueing to give priority to users who came first.

4. Monitoring

Monitoring and alerting the important metrics helps to identify server failure risks quickly.

So they monitored the following metrics:

Latency: time it takes to process a unit of work

Traffic: the rate at which new work comes into the system in requests per minute

Errors: the rate at which unexpected things occur

Saturation: system load relative to its total capacity

5. Structured Logging

They needed logs to debug and understand what happened in a web request.

So they stored logs in a central place because there were many servers creating logs. And they wanted to keep it searchable.

They structured logs in a machine-readable format to parse and index quickly.

Besides they included correlation ID to allow tracing.

6. Idempotent Keys

The probability of an unreliable event to occur in a distributed system is high, especially with high traffic.

And retry of a failed request should be done safely. For example, they didn’t want to double charge a customer’s card.

So they sent a unique idempotency key with each request.

7. Consistent Reconciliation

They relied on financial partners. And merged financial data to achieve data consistency.

They tracked any data mismatch and automated the fix.

8. Load Testing

They used load testing to find system bottlenecks and to install protection mechanisms.

They did load testing by simulating a large volume of traffic.

9. Incident Management and Retrospective

They set up proper incident management to improve service reliability.

And involved 3-roles in incident management:

Incident Manager on Call (IMOC): coordinates the incident

Support Response Manager (SRM): responsible for public communication

Service Owner: responsible for restoring stability

Also they did incident retrospectives after an incident occurred. This helped them to:

Dig deep into what happened

Identify wrong assumptions about the system

Besides they came up with action items based on the discussion. And implemented them to prevent the same failure from occurring again.

10. Data Isolation

They kept people’s data in different database shards. So if a database shard crashes it wouldn’t affect another.

But they shared the stateless workers between shards to get the best performance.

And replicated the database across many data centers for high availability.

The probability of failures still exists but they reduced the risk of downtime. And limited the scope of impact.

And everybody lived Happily ever after.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Did you enjoy this post? Then don't forget to hit the Like button ❤️

References

https://shopify.engineering/building-resilient-payment-systems

https://www.usenix.org/conference/srecon16europe/program/presentation/stolarsky

https://www.infoq.com/presentations/shopify-architecture-flash-sale/

Photo by Roberto Cortese on Unsplash

Great Article. Thanks for sharing.

Great article! Enjoyed the story like edition with a lot of added visuals.