11 Reasons Why YouTube Was Able to Support 100 Million Video Views a Day With Only 9 Engineers

#5: Read Now - YouTube Top 11 Scalability Techniques (6 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines YouTube's scalability in its early days. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

February 2005 - California, United States.

3 early employees from PayPal wanted to build a platform to share videos.

They co-founded YouTube in their garage.

Yet they had limited financial resources. So they funded YouTube through credit card debt and infrastructure borrowing. The financial limitations forced them to create innovative scalability techniques.

In the next year, they hit 100 million video views a day. And they did this with only 9 engineers.

YouTube Scalability

Here are the 11 YouTube scalability techniques:

1. Flywheel Effect

They took a scientific approach to scalability: collect and analyze system data.

Their workflow was a constant loop: identify and fix bottlenecks.

This approach avoided the need for high-end hardware and reduced hardware costs.

2. Tech Stack

They kept their tech stack simple and used proven technologies.

MySQL stored metadata: video titles, tags, descriptions, and user data. Because it was easy to fix issues in MySQL.

Lighttpd web server served video.

Suse Linux as the operating system. They used Linux tools to inspect the system behavior: strace, ssh, rsync, vmstat, and tcpdump.

Python ran on the application server. Because it offered many reusable libraries and they didn’t want to reinvent the wheel. In other words, Python allowed rapid and flexible development. Python was never a bottleneck based on their measurements.

Yet they used Python-to-C compiler and C-language extensions to run CPU-intensive tasks.

3. Keep It Simple

They considered software architecture to be the root of scalability. They didn’t follow buzzwords to scale. But kept the architecture simple - making code reviews easier. And it allowed them to rearchitect fast to meet changing needs. For example, they pivoted from a dating site to a video-sharing site.

They kept the network path simple. Because network appliances have scalability limitations.

Also they used commodity hardware. It allowed them to reduce power consumption and maintenance fees - and keep the costs low.

Besides they kept the scale-aware code opaque to application development.

4. Choose Your Battles

They outsourced their problems. Because they wanted to focus on important things. They didn’t have the time or resources to build their infrastructure to serve popular videos. So they put the popular videos on a third-party CDN. The benefits:

Low latency due to fewer network hops from the user

High performance because it served videos from memory

High availability because of automatic replication

They served less popular videos from a colocated data center. And used the software RAID to improve the performance through multi-disk access parallelism. Also tweaked their servers to prevent cache thrashing.

They kept their infrastructure in a colocated data center for 2 reasons. To tweak servers with ease to meet their needs and to negotiate their contracts.

Each video had 4 thumbnails. So, they faced problems in serving small objects: lots of disk seeks, and filesystem limits. So, they put thumbnails in BigTable. It is a distributed data store with many benefits:

Avoids small file problems by clustering files

Improved performance

Low latency with a multi-level cache

Easy to provision

Also they faked data to prevent expensive transactions. For example, they faked the video view count and asynchronously updated the counter. A popular technique today to approximate correctness is the bloom filter. It’s a probabilistic data structure.

5. Pillars of Scalability

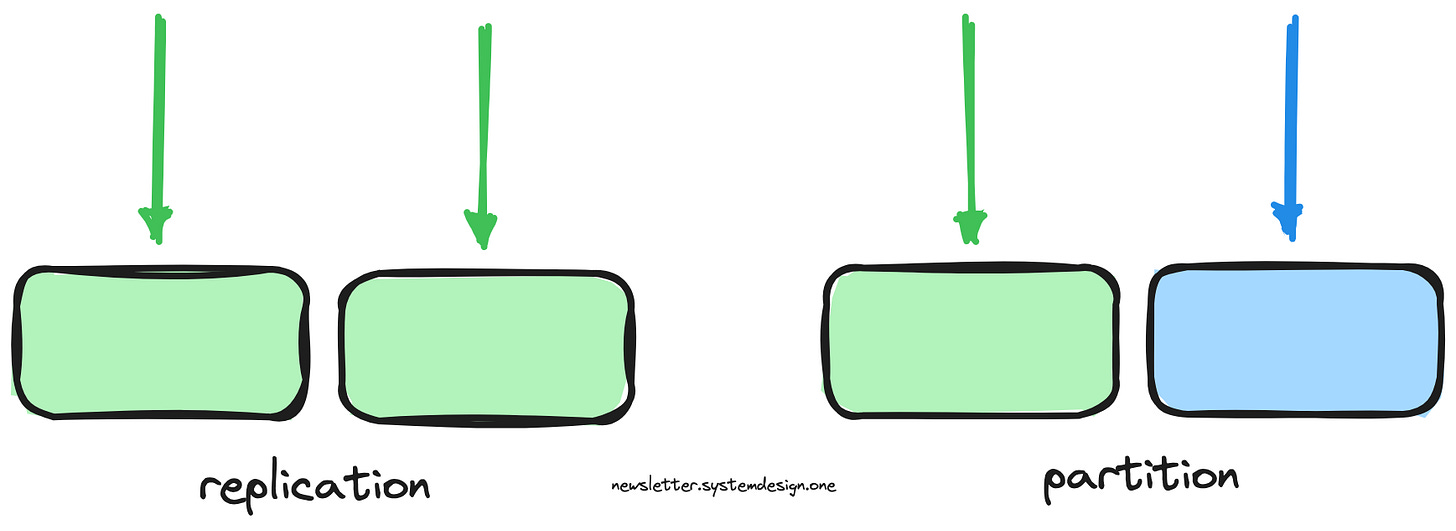

They relied on 3 pillars of scalability: stateless, replication, and partitioning.

They kept their web servers stateless. And scaled it out via replication.

They replicated the database server for read scalability and high availability. And load balanced the traffic among replicas. But this approach caused problems: replication lag and issues with write scalability.

So they partitioned the database for improved write scalability, cache locality, and performance. Also it reduced their hardware costs by 30%.

Besides they studied data access patterns to determine the partition level. For example, they studied popular queries, joins, and transactional consistency. And chose the user as the partition level.

6. Solid Engineering Team

A knowledgeable team is an important asset to scalability.

They kept the team size small for improved communication - 9 engineers. And their team was great at cross-disciplinary skills.

7. Don’t Repeat Yourself

They used cache to prevent repeating expensive operations. It allowed them to scale reads.

Also they implemented caching at many levels - it reduced latency.

8. Rank Your Stuff

They ranked video-watch traffic over everything else. So they kept a dedicated cluster of resources for video-watch traffic. It provided high availability.

9. Prevent the Thundering Herd

The thundering herd problem occurs if many concurrent clients query a server. It degrades performance.

So they added jitter to prevent the thundering herd problem. For example, they added jitter to the cache expiry of popular videos.

10. Play the Long Game

They focused on macro-level of things: algorithms, and scalability. They did quick hacks to buy more time to build long-term solutions. For example, stubbing bad API with Python to prevent short-term problems.

They tolerated imperfection in their components. When hit a bottleneck: they either rewrote the component or got rid of it.

They traded off efficiency for scalability. For example:

They chose Python over C

They kept clear boundaries between components to scale out. And tolerated latency

They optimized the software to be fast enough. But didn’t obsess with machine efficiency

They served video from a server location based on bandwidth availability. And not based on latency

11. Adaptive Evolution

They tweaked the system to meet their needs. Examples:

Critical components used RPC instead of HTTP REST. It improved performance

Custom BSON as the data serialization format. It offered high-performance

Eventual consistency in certain parts of the application for scalability. For example, the read-your-writes consistency model for user comments

Studied Python to prevent common pitfalls. Also relied on profiling

Customized open-source software

Optimized database queries

Made non-critical real-time tasks asynchronous

They didn’t waste time writing code to restrict people. Instead adopted great engineering practices - coding conventions to improve their code structure.

Google acquired YouTube in 2006. And they remain the market leader in video sharing with 5 billion video views a day.

According to Forbes, the founders of YouTube have a net worth of 100+ million USD.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

References

Seattle Conference on Scalability: YouTube Scalability. (2012). YouTube. Available at: YouTube [Accessed 14 Sep. 2023].

www.youtube.com. (n.d.). Scalability at YouTube. [online] Available at YouTube [Accessed 14 Sep. 2023].

didip (2008). Super Sizing Youtube with Python. [online] Available at: https://www.slideshare.net/didip/super-sizing-youtube-with-python.

Wikipedia Contributors (2019). YouTube. [online] Wikipedia. Available at: https://en.wikipedia.org/wiki/YouTube.

highscalability.com. (n.d.). YouTube Architecture - High Scalability -. [online] Available at: http://highscalability.com/youtube-architecture.

Good summary

Great Article