How Google Ads Was Able to Support 4.77 Billion Users With a SQL Database 🔥

#60: Break Into Google Spanner Architecture (5 Minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines Google Spanner architecture. You will find references at the bottom of this page if you want to go deeper.

Share this post & I'll send you some rewards for the referrals.

Note: This post is based on my research and may differ from real-world implementation.

Once upon a time, 2 students decided to sell their university project for a million dollars.

Yet they failed to make the sale.

So they decided to develop it further and named it Google.

The main source of their income was from advertisement slots (Ads).

And their growth rate was explosive.

Yet they stored Ads data in MySQL for simplicity and reliability.

As more users joined, they partitioned MySQL for scale.

Although partitioning temporarily solved their scalability issues, there were newer problems.

Here are some of them:

1. Scalability

Their storage needs skyrocketed with growth.

And re-partitioning MySQL takes time as data must be moved between servers.

Yet they had extremely minimal downtime requirements.

So it became hard.

2. Transactions

They need ACID compliance for Ads data.

But transactions became difficult after partitioning.

Think of a transaction as a series of writes and reads.

System Design Guided Practice - Sponsor

Preparing for system design interviews? Work through common questions with personalized feedback to help you improve and own your next interview. Start practicing with System Design Guided Practice by Hello Interview today.

Cloud Spanner Database

They need massive scalability of NoSQL.

And ACID properties of MySQL.

So they created Spanner - a distributed SQL database.

Here’s how:

1. Atomicity

Atomicity means a transaction is all or nothing. Put simply, a transaction must update data in different partitions at once.

Yet it’s difficult to ensure every partition will commit.

So they use the two-phase commit (2PC) protocol.

Here’s how it works:

Prepare phase: coordinator asks relevant partitions if they’re ready to commit

Commit phase: coordinator tells relevant partitions to commit if everyone agreed

The transaction gets aborted if any partition isn't ready. Thus achieving atomicity.

Ready for the best part?

2. Consistency

They provide strong consistency at a global level.

This means if data gets updated in Europe, the same data would be shown in Asia. Put simply, a read will always return the latest write.

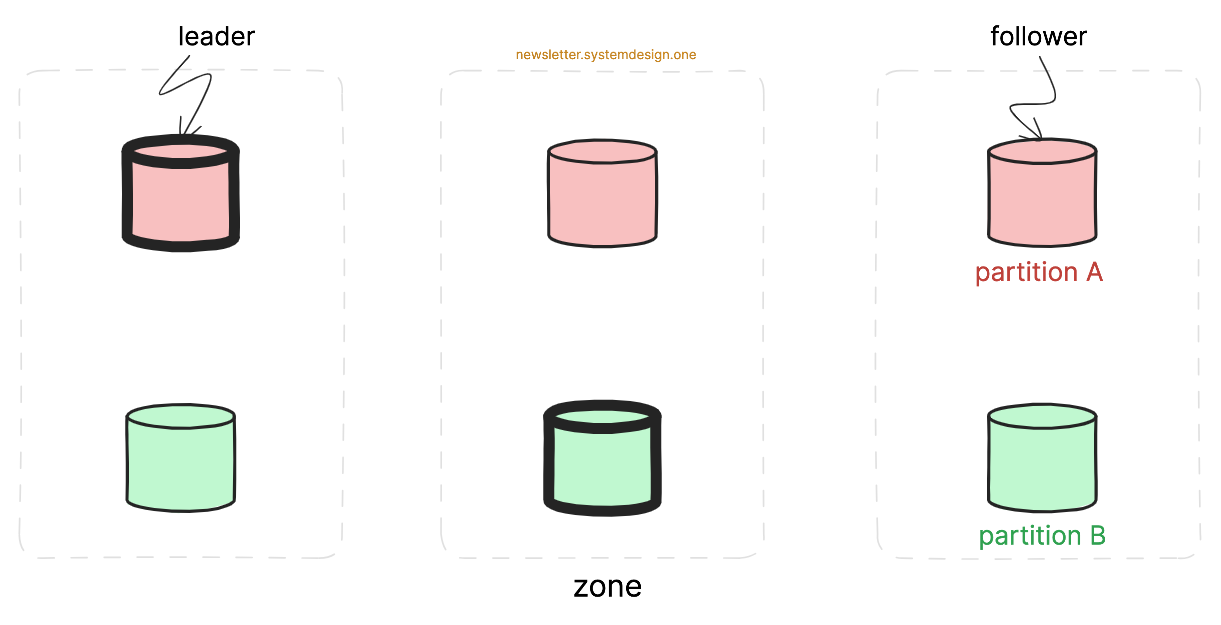

A database partition gets replicated across different zones for scalability. Think of a zone as a geographical location.

Yet it’s important to coordinate writes among replicas to avoid data conflicts.

So they use the Paxos algorithm to find a partition leader. A partition leader is responsible for managing writes, while followers handle reads. Also different partition leaders might get deployed in separate zones.

Imagine Paxos as a technique to ensure consensus across distributed systems.

A simple way to achieve strong consistency is by ordering writes with timestamps. It allows a consistent view of data across servers.

Yet it’s difficult to maintain the same time across every server in the world.

So they use TrueTime. Think of TrueTime as a combination of GPS receivers and atomic clocks. It finds the current time in each data center with high accuracy.

And each server synchronizes its quartz clock with TrueTime every 30 seconds.

Here’s how they perform reads:

The request gets routed to the nearest zone even if it has a follower

The follower asks the leader for the latest timestamp of the requested data

The follower compares the leader’s response with its timestamp

The follower responds to the client

Also the follower will wait for the leader to synchronize in case its data is outdated.

Ready for the next technique?

3. Isolation

Isolation means transactions don’t interfere with each other.

Yet it’s difficult to avoid data conflicts due to concurrent transactions.

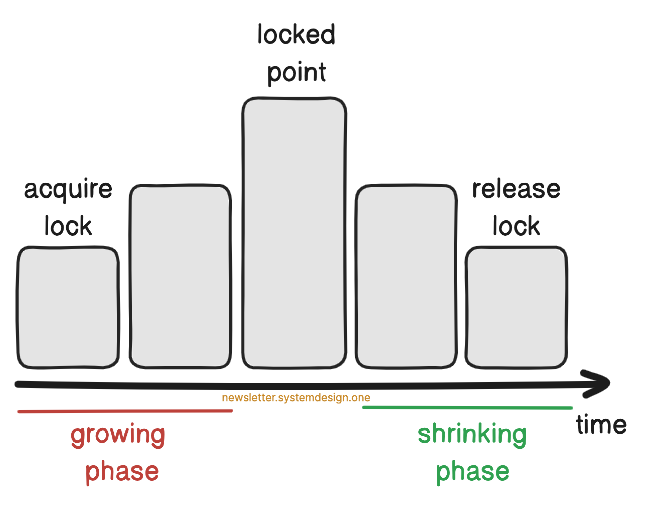

So they use two-phase locking (2PL) for data isolation during writes.

Here’s how it works:

Growing phase: transaction performs reads or writes once it acquires locks on data

Shrinking phase: transaction releases locks one at a time after it’s complete

Thus avoiding data conflicts on writes.

Besides they use snapshot isolation.

It’s a multi-version concurrency control (MVCC) technique for lock-free reads. Think of snapshot isolation as a way to look at the database from a point in time.

It returns a specific version of data during reads and doesn't affect ongoing writes. Thus providing data isolation.

Here’s how it works:

The previous data isn’t overwritten

Instead a new value gets written along with a TrueTime timestamp

Also older versions get automatically removed after a specific period to save storage.

Onward.