Everything You Need to Know About Consistent Hashing

#23: Read Now - Algorithm to Build a Planet-Scale Distributed Cache (4 minutes)

Get my system design playbook for FREE on newsletter signup:

Share this post & I'll send you some rewards for the referrals.

Imagine you own a website and it became popular.

So you install a cache to reduce the load on the origin server.

But soon the cache server will hit its limits and result in cache misses.

A simple solution is to replicate the cache server. Yet only a limited amount of data set can be cached.

So cache server must be partitioned to store more data.

But partitioning the cache to handle dynamic load is a difficult problem.

A naive approach is Static Hash Partitioning. Here’s how it works:

Hash the data key

Do a modulo operation of the generated hash code against the number of cache servers. This finds you the cache server ID

Store data key in the cache server

Cache Server ID = Hash(data key) mod n

where n is the number of cache serversBut existing mapping between data keys and cache servers will break if a cache server fails.

Also installing an extra cache server to handle more load will break the mapping.

A workaround is to rehash the data keys. But it’s an expensive operation.

So static hash partitioning won’t handle a dynamic load without service degradation.

A simple solution to this problem is consistent hashing.

The .NET Weekly (Featured)

Want to become a better software engineer? Each week, Milan sends one piece of practical advice about .NET and software architecture. It's a 5-minute read (or less) and comes every Saturday morning. Join 32,000+ engineers here:

Consistent Hashing

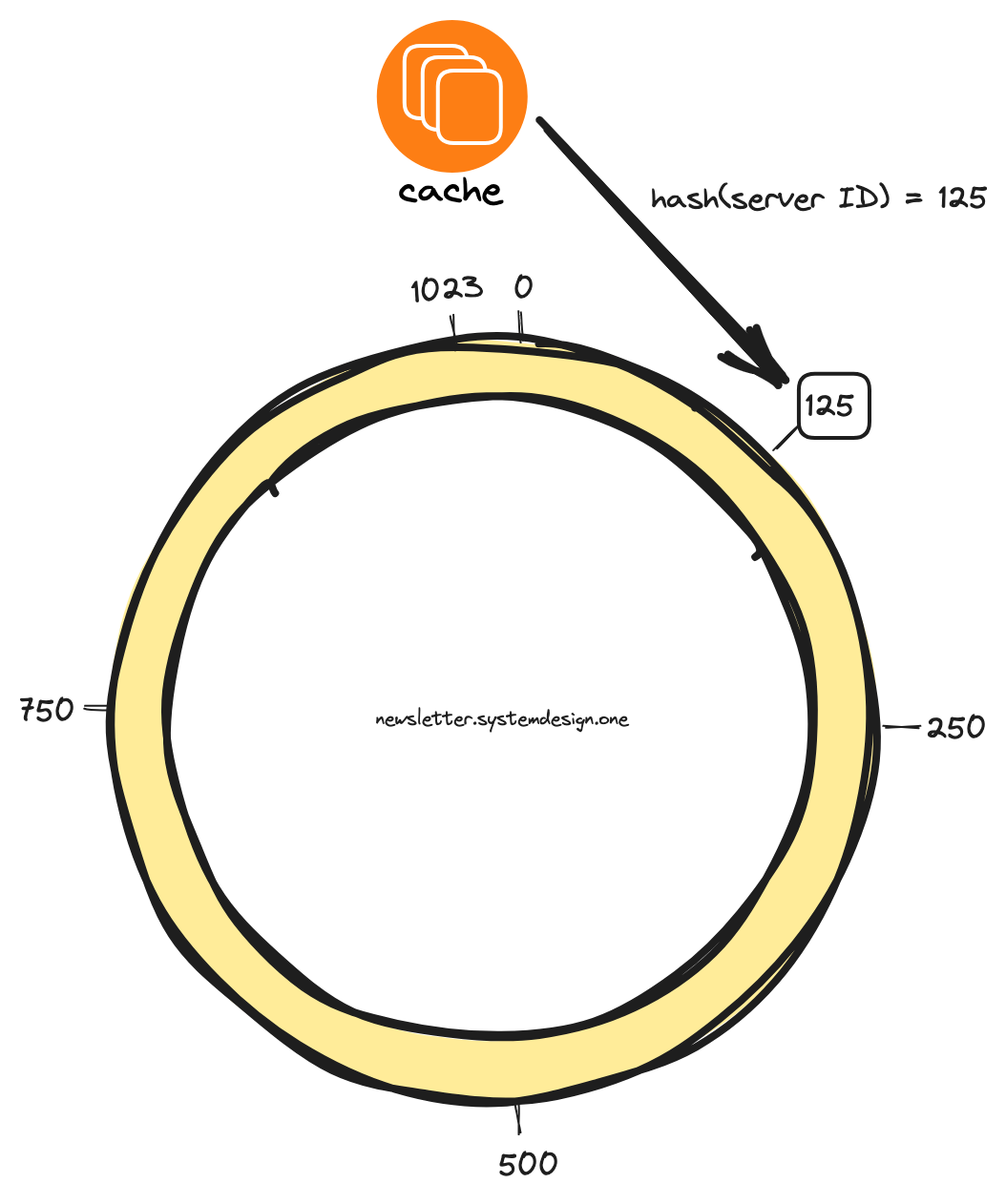

Consistent hashing places the cache servers on a virtual ring structure. It's called the hash ring.

The output space of the hash function gets mapped onto a circular space to create the hash ring. In other words, the biggest hash value gets wrapped around the smallest hash value.

This is how consistent hashing finds the position of a cache server:

Hash the IP address or domain name of the cache server

Base convert generated hash code

Do modulo operation on the hash code against the number of positions in the hash ring

Place the cache server in the hash ring

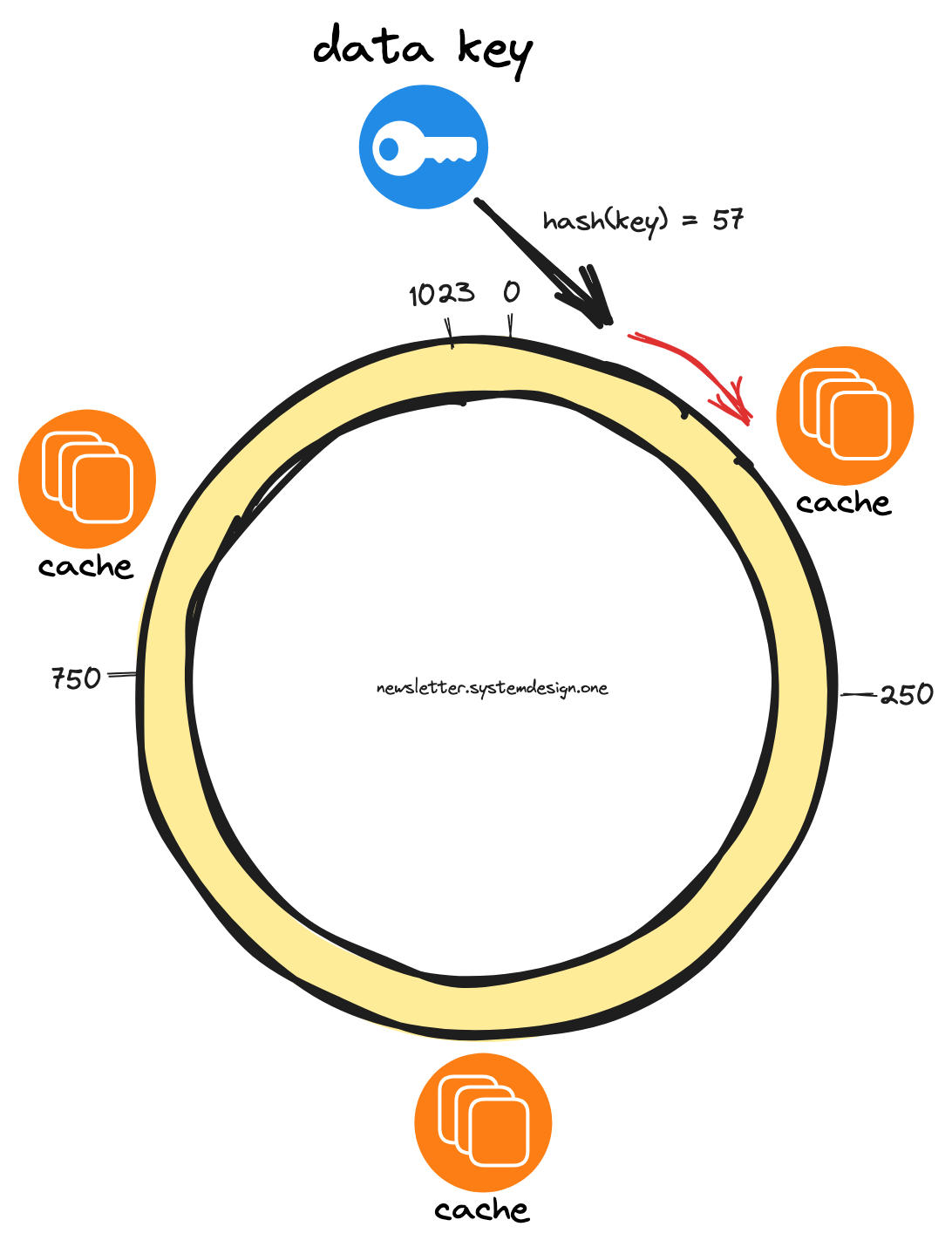

This is how consistent hashing finds the cache server to store a data key:

Hash the data key using the same hash function

Base convert generated hash code

Traverse the hash ring in the clockwise direction until a cache server

Store the data key in the cache server

Each cache server becomes responsible for the region between itself and its predecessor.

The data keys get moved to the immediate cache server in the clockwise direction if a cache server fails. While the remaining cache servers remain unaffected.

Besides only data keys that fall within the range of the new cache server get moved out when a cache server gets provisioned.

So consistent hashing reduces the data movement when the number of servers changes.

Also the data keys need not be uniformly distributed across cache servers in the hash ring.

So a single cache server can be assigned to many positions on the hash ring.

This can be done by hashing server ID through different hash functions. It’s called virtual nodes. And it prevents hot spots in the hash ring.

How to Implement Consistent Hashing?

A self-balancing binary search tree can be used to store the server positions in the hash ring. Because it offers logarithmic time complexity for search, insert, and delete operations.

Here is what happens when a data key gets inserted:

Hash the data key

Find the cache server by searching the binary search tree in logarithmic time

Store the data key in the cache server

Besides each cache server can keep a binary search tree to track the data keys stored by it.

It makes data movement easier when a cache server gets provisioned or decommissioned.

Consistent Hashing Use Cases

Other popular use cases of consistent hashing are:

Partitioning data in Amazon Dynamo and Apache Cassandra databases

Load balancing video stream traffic in Vimeo

Distributing video content across CDN by Netflix

Finding a specific Discord server by the Discord client

Consistent Hashing Advantages

The advantages of consistent hashing are:

Easy horizontal scalability

Minimal data movement when the number of servers change

Easy partitioning of data

Consistent Hashing Disadvantages

The disadvantages of consistent hashing are:

Hot spots in the hash ring could cause cascading failure

Risk of non-uniform data distribution

Server capacity is not taken into account

Consistent hashing is widely used in distributed systems. So it’s important to understand it.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

References

https://github.com/papers-we-love/papers-we-love/blob/main/distributed_systems/consistent-hashing-and-random-trees.pdf

https://systemdesign.one/consistent-hashing-explained/

Really nice article. I was not familiar with this solution. In the implementation section, maybe you should add where to store the balanced tree. Should that be in Zookeeper? In a separate cache server reserved just for the application configuration?