How Hashnode Generates Feed at Scale

#33: Learn More - Awesome Personalized Feed Architecture (5 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how the feed gets created at scale on Hashnode. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

June 2020 - Bangalore, India.

Two engineers create a blogging platform for software developers to share knowledge. And called it Hashnode.

Yet they faced difficulties with user engagement and content discovery on their platform.

So they created a feed.

A feed is a personalized list of articles a user sees when they log into the platform. It’s created based on a user’s interests, preferences, and activities within the platform. Put another way, a feed is a collection of articles from authors that a user follows.

Feed Architecture

Here’s how Hashnode generates feed at scale:

1. Ranking Articles

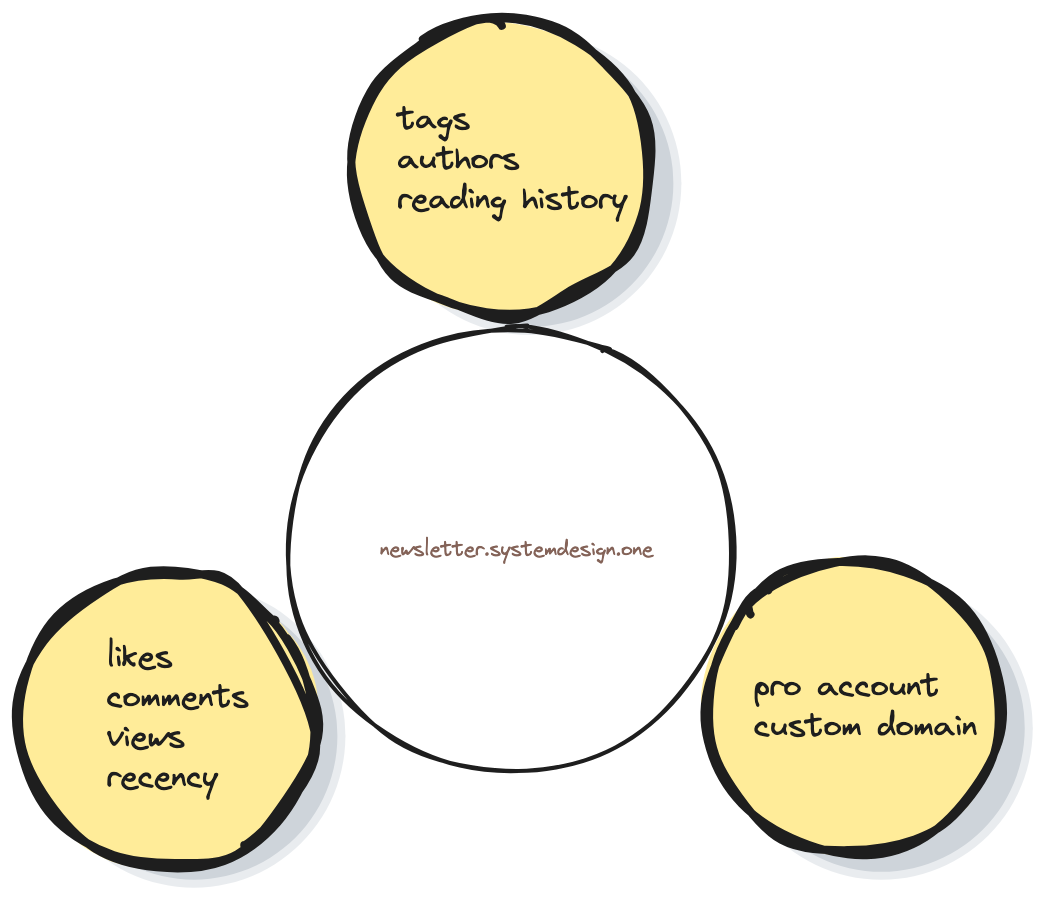

Everyone gets a unique feed because each user follows different authors and tags.

Yet they don’t use machine learning to compute personalized feeds.

Instead they use a ranking approach to keep it simple. Put another way, a score gets assigned to each article based on different factors.

They normalize the likes, views, and comments on each article to keep the feed diverse.

Besides they reduce the rank of an article if it was already shown on a user's feed to keep the feed fresh.

2. Feed Generation

Their main page displays the feed. So feed generation should be fast.

Yet it’s expensive to create a personalized feed because a user might follow many authors. And articles from each author must be fetched.

Also expensive database queries should be avoided at scale for performance. And fetching the same data many times without reuse wastes computing resources.

So they precompute the feed for active users and store it in the Redis cache. Put another way, the feed of a user gets computed before they visit it and gets served from memory.

An active user is someone who has logged into Hashnode in the last few days.

They compute the feed only for active users to reduce memory usage on Redis.

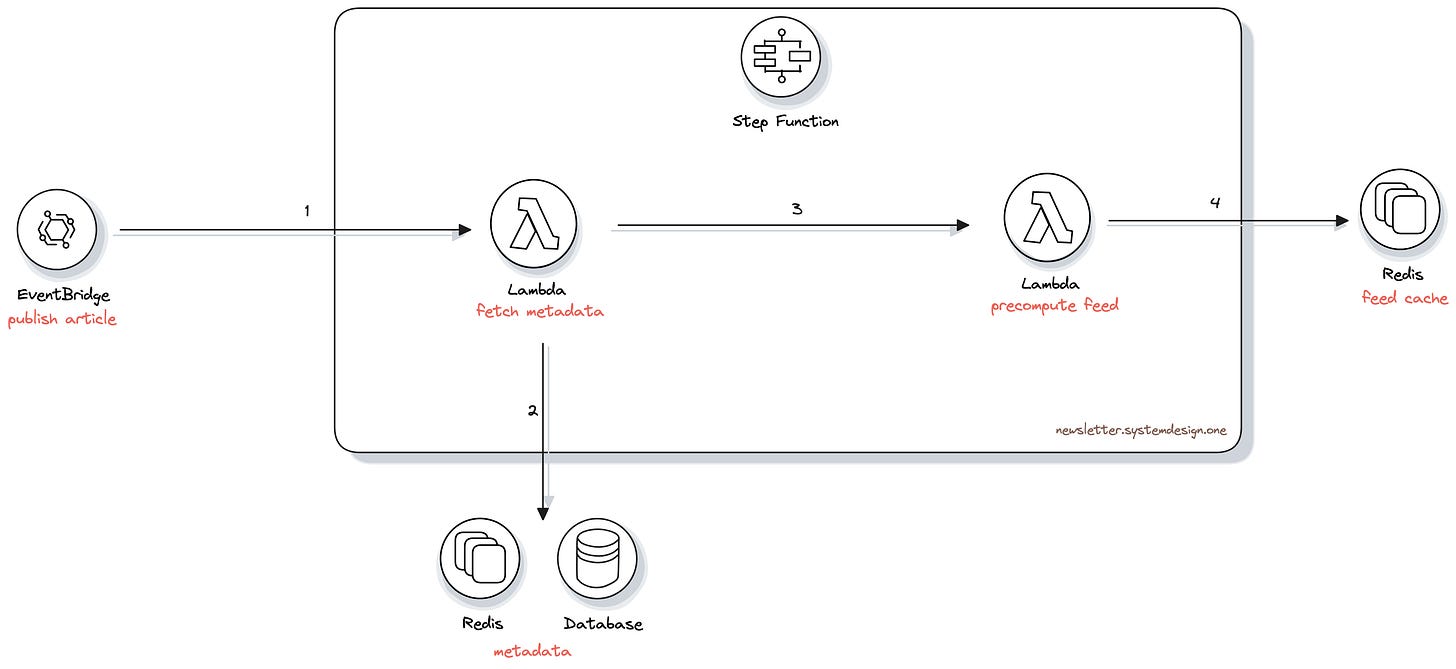

An event-driven architecture is used to keep the services decoupled and flexible. Put another way, an event gets emitted for each user action like publishing or liking an article.

They use serverless architecture to precompute the feed. Because it’s easy to scale and avoids the need for server management.

AWS EventBridge collects events like publishing or liking an article.

EventBridge is a serverless service that connects application components via events.

They store the articles in disks on MongoDB. It’s a NoSQL database.

And Upstash is their serverless Redis cache provider.

The metadata cache stores the author's data and their list of active followers.

A Lambda function queries the metadata cache to generate the feed. It queries the database if the cached data is stale.

A Lambda is an event-driven computing service.

They use another Lambda function to compute the feed and store it in the Redis cache.

And each computation gets its own Lambda function for low latency.

They use AWS Step Function's distributed map execution feature to generate the feed in parallel.

AWS Step Function is a serverless orchestration service. It keeps the Lambda functions free of extra logic by triggering and tracking them. Put another way, it’s used to coordinate many Lambda functions.

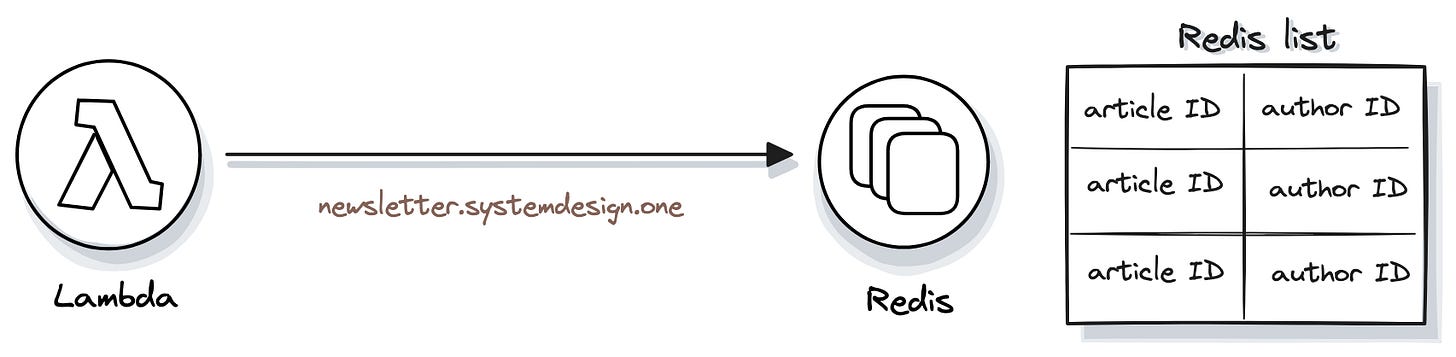

The details of their feed cache implementation are unknown. But Redis list is an option to store the user's feed. Put another way, each user gets a separate Redis list.

A Lambda function computes the feed when an article gets published. It then inserts the article ID and author ID into each follower’s Redis list.

They could store a few hundred article IDs in the Redis list to avoid frequent re-computation.

Also only article IDs get stored in the feed cache to prevent duplicating the article data. And a separate cache could store the article data.

The MGET command in Redis can be used to fetch many keys via a single operation to display the feed. Because it’s more efficient than separate GET operations. Put another way, they could fetch data about many articles with a single MGET request for speed.

3. Performance

Hashnode is a read-heavy system because articles get read more often than written.

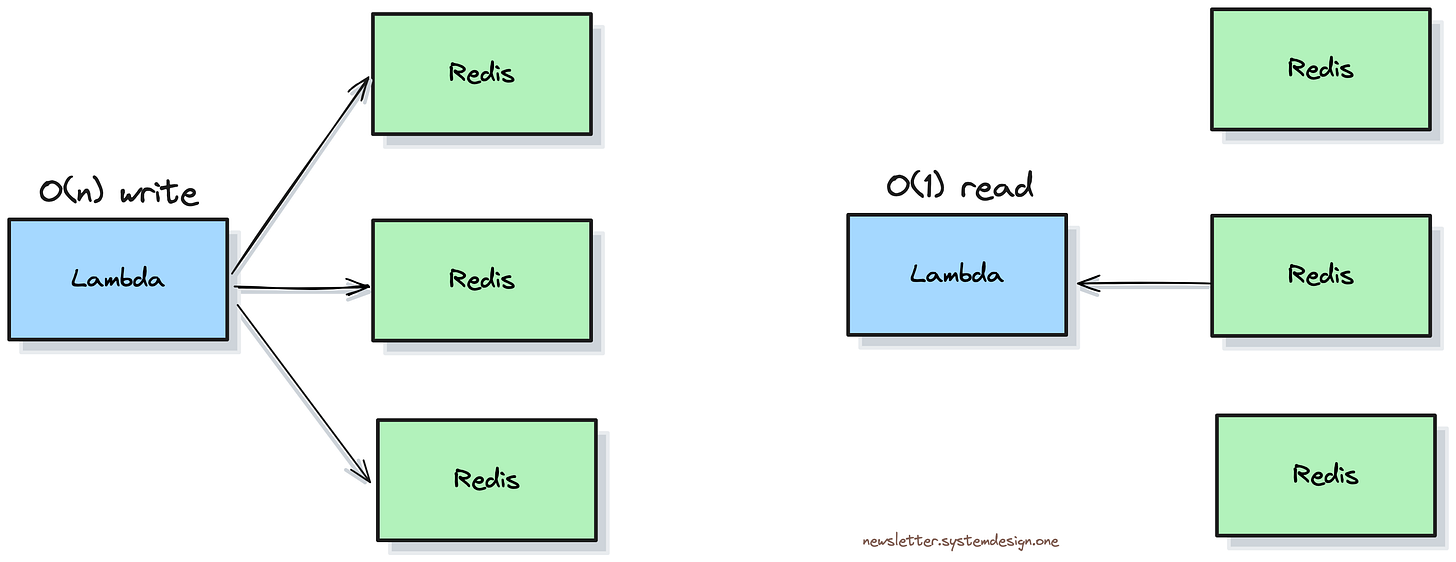

When an author publishes an article, they insert the article ID into each follower's feed. So it’s a linear time operation, O(n).

While the feed of a user gets fetched with a single Redis request. So it’s a constant time operation, O(1).

So the feed generation can be slow for famous authors with many followers.

A possible solution is to merge the article ID only when the follower accesses their feed. Put another way, the feed of a famous author's followers doesn’t get immediately populated.

Hundreds of articles get published on Hashnode every day. And the feed lets them get the newest articles to interested users.

Now they serve 2.3 million software developers across the world.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

Is it not insanely inefficient to pre-compute everyone's feed? That just seems wild to me. Is that a common approach or is it unique to HashNode?

Great article!

I learned a lot of new information. The most shocking part was discovering that feed preferences use a "ranking algorithm," not just machine learning. I always thought it was only machine learning.

It's also interesting that the feed is pre-calculated in cache before a user visits, saving computer resources.

Thanks, Neo, for teaching us this!