This Is How Stripe Does Rate Limiting to Build Scalable APIs

#9: Read Now - Awesome Rate Limiter (4 minutes)

Get my system design playbook for FREE on newsletter signup:

This post outlines how Stripe does rate limiting. If you want to learn more, scroll to the bottom and find the references.

Share this post & I'll send you some rewards for the referrals.

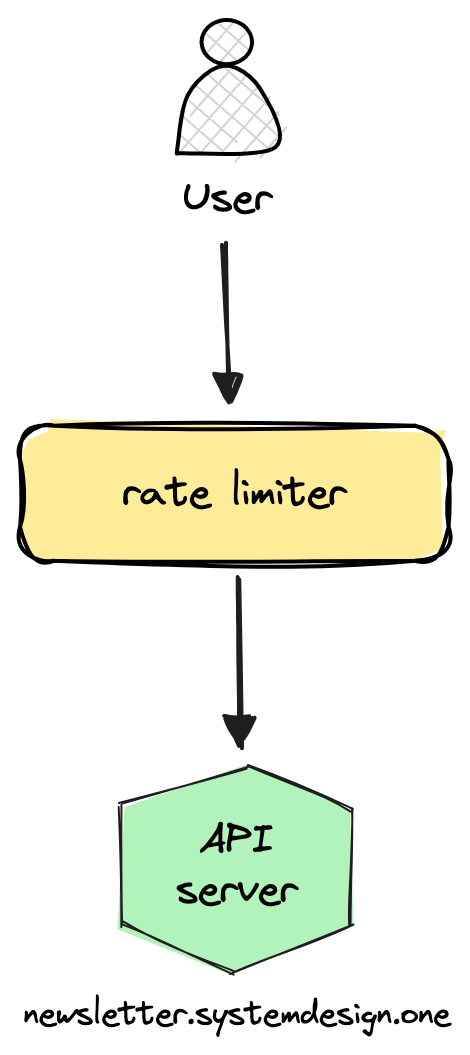

Rate Limiter

A rate limiter is important to building a scalable API. Because it prevents bad users from abusing the API.

A rate limiter keeps a counter on the number of requests received. And reject a request if the threshold exceeds. Requests are rate-limited at the user or IP address level.

And it is a good choice if a change in the pace of the requests doesn't affect the user experience.

Other potential reasons to rate limit are:

Prevent low-priority traffic from affecting high-priority traffic

Prevent service degradation

Rejecting low-priority requests under heavy load is called load shedding.

This post outlines how Stripe scales their API with the rate limiter. Consider sharing this post with someone who wants to study scalability patterns.

Rate Limiter Workflow

The rules for rate limiting are predefined. And here is the rate limiter workflow:

Check rate limiter rule

Reject a request if the threshold exceeds

Otherwise let the request pass-through

Rate Limiter Implementation

Stripe uses the token bucket algorithm to do rate limiting. Here is a quick overview of this algorithm:

Imagine there is a bucket filled with tokens. Every request must pick a token from the bucket to pass through.

No requests get a token if the bucket is empty. So, further requests get rejected.

And tokens get refilled at a steady pace.

Other popular rate-limiting algorithms are sliding windows and leaky buckets.

They used Redis to build the rate limiter. Because it is in-memory and provides low latency.

Things they considered when implementing the rate limiter are:

Quality check rate limiter logic and allow bypass on failures

Show a clear response to the user: status code 429 - too many requests or 503 - service unavailable

Enable panic mode on the rate limiter. This allowed switching it off on failures

Set up alerts and monitoring

Tune rate limiter to match traffic patterns

It's difficult to rate-limit a distributed system. Because each request from a single user might not hit the same server. I don't know how Stripe solved this problem. But here is a potential solution.

Redirect the traffic from an IP address to the same data center using DNS. And create an isolated rate limiter in each data center.

Yet a new TCP connection might hit a different server within a data center.

So set up a caching proxy (twemproxy) in each data center. Because it allows to share state across many servers. Put another way, many servers share a single cache: rate limiter.

And use consistent hashing to reduce key redistribution on changing load (cluster resize).

Rate Limiting Types

Stripe categorizes rate limiting into 4 types:

1. Request Rate Limiter

Each user gets n requests per second. This rate limiter type acts as the first line of defense for an API. And it is the most popular type.

2. Concurrent Requests Rate Limiter

The number of concurrent requests that are in progress is rate-limited. This protects resource-intensive API. And prevents resource contention.

3. Fleet Usage Load Shedder

The critical APIs reserve 20% of computing capacity. And requests to non-critical APIs get rejected if the critical API doesn’t get 20% of the resources.

4. Worker Utilization Load Shedder

The non-critical traffic gets shed on server overload. And it gets re-enabled after a delay. This rate limiter type is the last line of defense for an API.

Consider subscribing to get simplified case studies delivered straight to your inbox:

Thank you for supporting this newsletter. Consider sharing this post with your friends and get rewards. Y’all are the best.

References

https://stripe.com/blog/rate-limiters

https://www.cloudflare.com/en-gb/learning/bots/what-is-rate-limiting/

https://blog.cloudflare.com/counting-things-a-lot-of-different-things/

If you implement the simple request rate limiter - one important part is how to communicate it to the clients. I had 2 cases in the last year where we breached the limit:

1. A free 3rd party api. We had a limit of 500 requests per day (which was not written down), which we didn't pass for a long time. Then we suddenly started to get 403 errors, which immediately led us to think our token was revoked or expired. Only after it started to work at 12:00, did we understand we breached the limit, and for some reason, they decided to throw unauthorized instead of the standard 429.

2. A paid api, that we heavily use. Recently we reached their limit (100 calls/minute), and started to get 429 responses. The good part, is that in the header we got the time when our limit will be reset. This allowed us to implement internal queueing of the request, without noisy errors. We had the 429 ones, we stored the requests, and then we continued at the allowed time until we hit it again. As it's mainly in rare peaks, this solution is perfect for us.

Neo, correct me if I’m wrong, but from what I understand, Stripe’s strategy seems to be layered rather than type-based. It feels like all four types you mentioned are actually layers. I didn’t see this explicitly stated in the documentation, so just wanted to point it out for clarity. Appreciate the blog—great work!